Review] Sg-one: Similarity guidance network for one-shot semantic segmentation

0

cv_paper_reviews

목록 보기

16/22

1. Motivation

- Traditional segmentation like Unet, FCN, extras require many loads for labeling tasks. To reduce the budget, one-shot segmentation is applied

- The one-shot segmentation is required to recognize new objects according to only one annotated example.

- Old-method in one-shot learning segmentation has a pair of networks. One for being trained for extracting the feature of support images and the other for query images. However the weakness of the model are 1) The parameters of using the two parallel networks are redundant, which is prone to overfitting and leading to the waste of computational resources; 2) combining the features of support and query images by mere multiplication is inadequate for guiding the query network to learn high-quality segmentation masks.

- To overcome such weakness, SG-One model is proposed. The main idea of proposed model is to incorporate the similarities between support images and query images.

- For extracting similarities, the model used mask averaging pooling method. As a result, consine similiarity between the features related to backgournd will be low otherwise, object will be high.

2. Method

2.1 Problem Definition

- There are three kinds of datasets 1) training set 2) support set 3) testing set. Also, there is no intersected classes between training set and support set. Therefore, the purpose of the proposed model is to train the network on the training set, which can predict segmentation mask on testing set with a few reference of the support set.

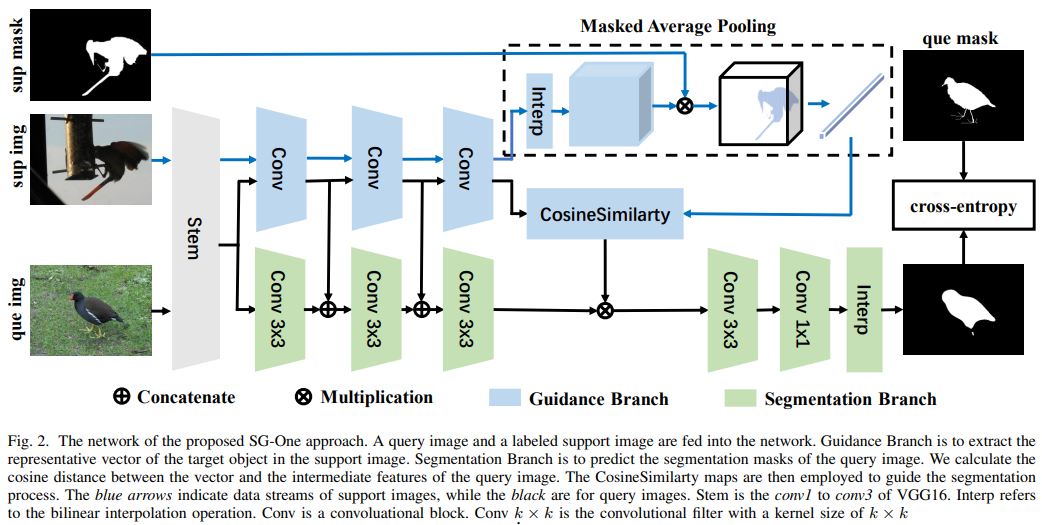

2.2. Proposed Model

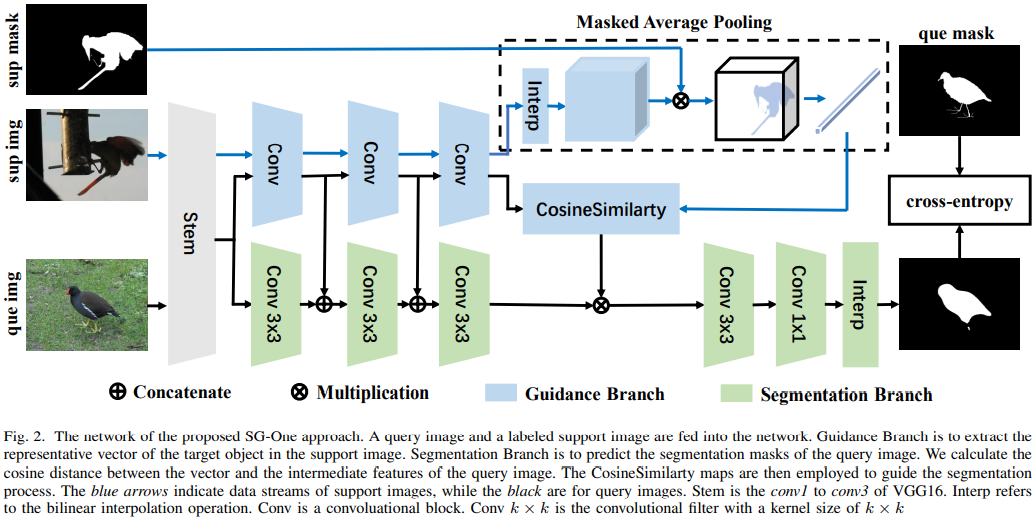

2.2.1. Masked Average Pooling

- This module extracts the object-related represenstitive vector of image from support set.

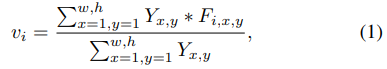

- The proposed model tried to use contextual regions. To use contextual information, first the model extracts the features from image and resizes the feature map as mask of the image with bilinear interpolation. Then, the feacture vector is computed by averaging the pixels within the object regions as eqution (1).

By this method, the model can extract the feature of object regions while discarding the unuseful information such as background.

2.2.2. Similarity Guidance

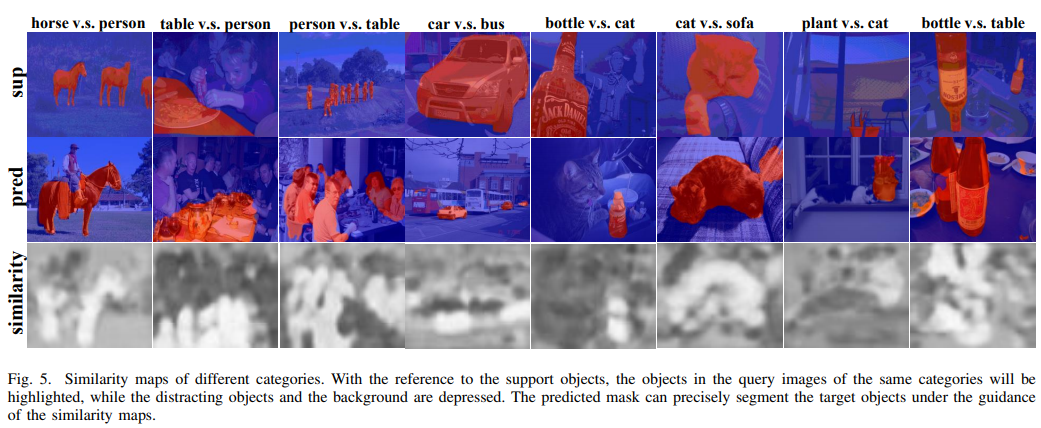

- This module combines the feature vectors of query images. In the masked average pooling layer, the model extracts the feature vectors from related region. For the query img, the model extract feature vectors and employ the cosine similiarity each other as equation 2.

- As a result the model can obtain the similarity map and it can denote the region of object in the query img. In particular, the paper multiplies the similiarty map to the feacture map of the query images from segmentation brach.

2.3. Similarity Guidance Method

- Stem means FCN layer.

- Similarity Guidance Branch is fed the extracted features of both query and support images. This branch will produce similarity map. In this branch, Conv blocks extracts the features from input images. The feature vectors are used to calculated the closens between the feacture from support image and the feacture at each pixel fo the query images.

- Segmentation branch is for pixel-wise classification. The proposed model used conv blocks to obtain features for segmentation. At the last two layer, input are concatenated with similarity map. The paper fuse the generated feature maps with the similarity maps by multiplication at each pixel. Finally, the output will be generated after decoding the feature maps.

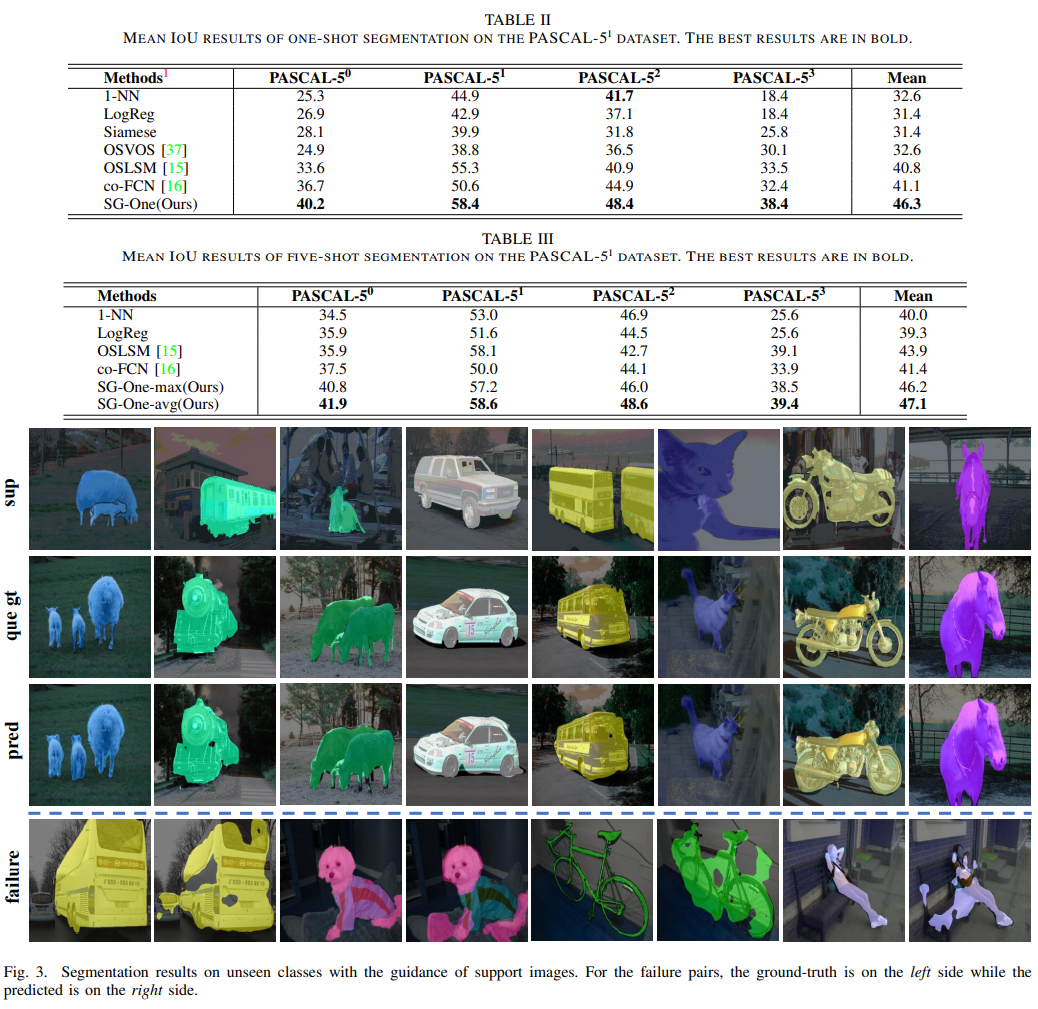

3. Results