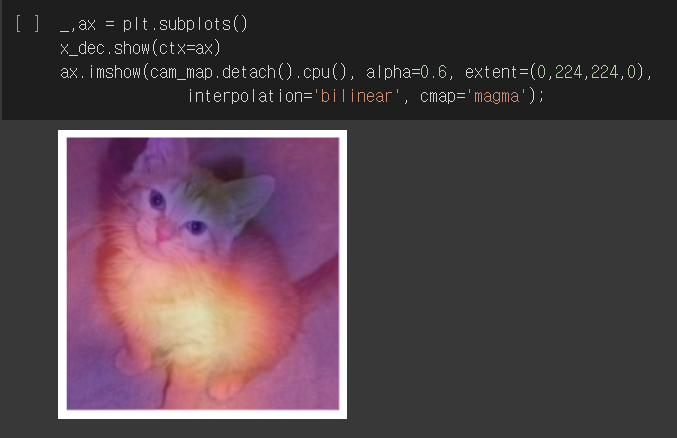

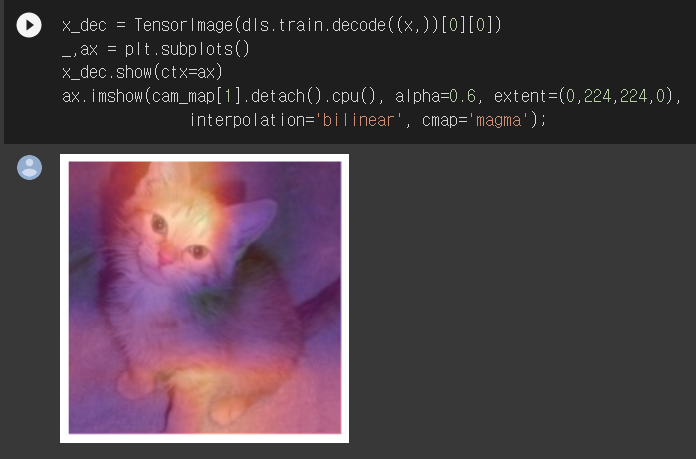

reference: CNN Interpretation with CAM

The class activation map (CAM) was introduced by Bolei Zhou et al. in "Learning Deep Features for Discriminative Localization". It uses the output of the last convolutional layer (just before the average pooling layer) together with the predictions to give us a heatmap visualization of why the model made its decision. This is a useful tool for interpretation.

A variant introduced in the paper "Grad-CAM: Why Did You Say That? Visual Explanations from Deep Networks via Gradient-based Localization" in 2016 uses the gradients of the final activation for the desired class. If you remember a little bit about the backward pass, the gradients of the output of the last layer with respect to the input of that layer are equal to the layer weights, since it is a linear layer.