Immutability and Lazy processing

-

python variables

- mutable

- flexibility

- potential for issues with concurrency

- likely adds complexity

-

immutability

- a component of functional programming

- defined once

- unable to be directly modified

- recreated if reassigned

- able to be shared efficiently

-

laziy processing ?

Understanding Parquet

- Spark and CSV files

- slow to parse

- files cannot be filtered

- The

ParquetFormat- columnar data format

- supported in Spark and other data processing frameworks

- supports predicate pushdown

- automatically stores schema info.

df = spark.read.format('parquet').load(filename.parquet')

df = spark.read.parquet('filename.parquet')df = spark.read.parquest('filename.parquet')

df.createOrReplaceTempView('name')

df2 = spark.sql('SQL QUERIES')

Partitioning and lazy processing

- Partitioning

- DF are broken up into partitions

- Partition size can vary

- Each partition is handled independently

- Lazy processing

- Transformations are lazy

- .withcolumn(...)

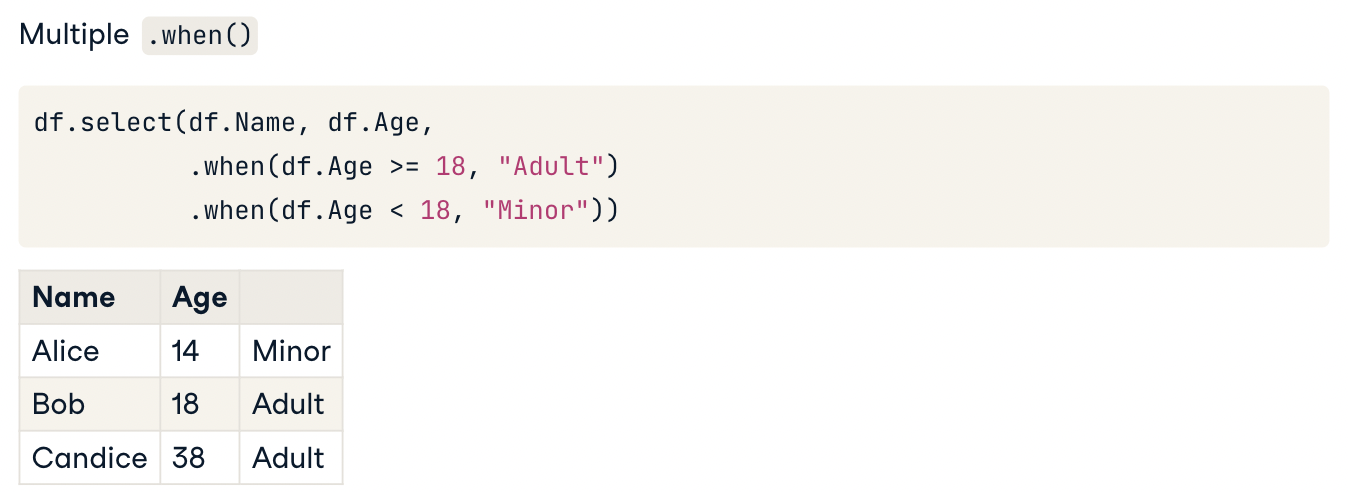

- .select(...)

- Nothing is actually done until an action is performed

- .count(...)

- .write(...)

- Transformations can be re-ordered for best performance

- Sometimes causes unexpected behavior

- Transformations are lazy

- spark is Lazy!