개요

1편에서는 Cilium에 대한 스터디내용 및 이론을 정리해보았다. 2편에서는 5개의 실습 과제에 대한 풀이를 작성해보았다.

과제내용

목표 : 아래 5개의 모든 실습 과제를 수행 후 관련 정리나 혹은 스샷을 제출하시면 됩니다.

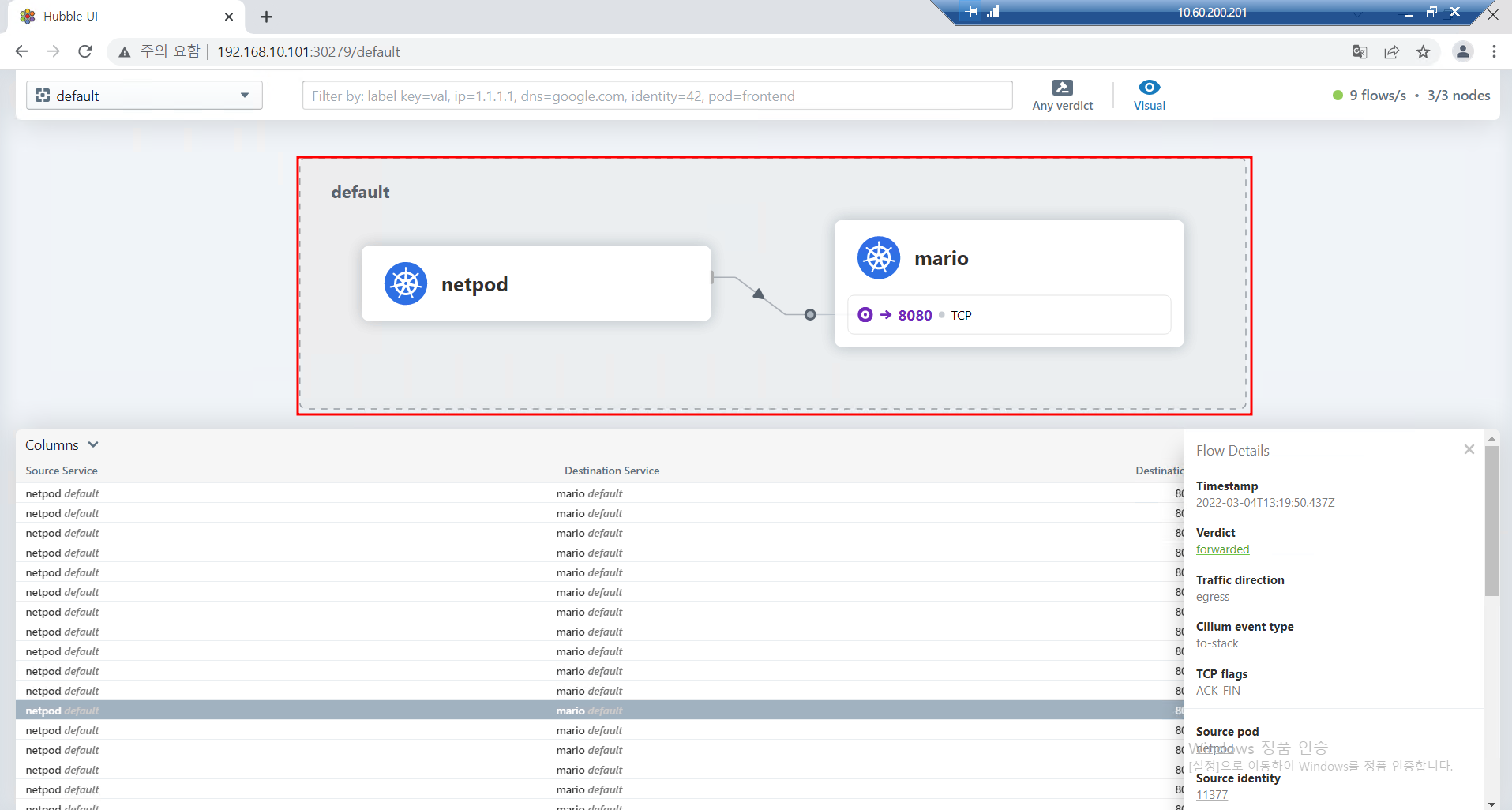

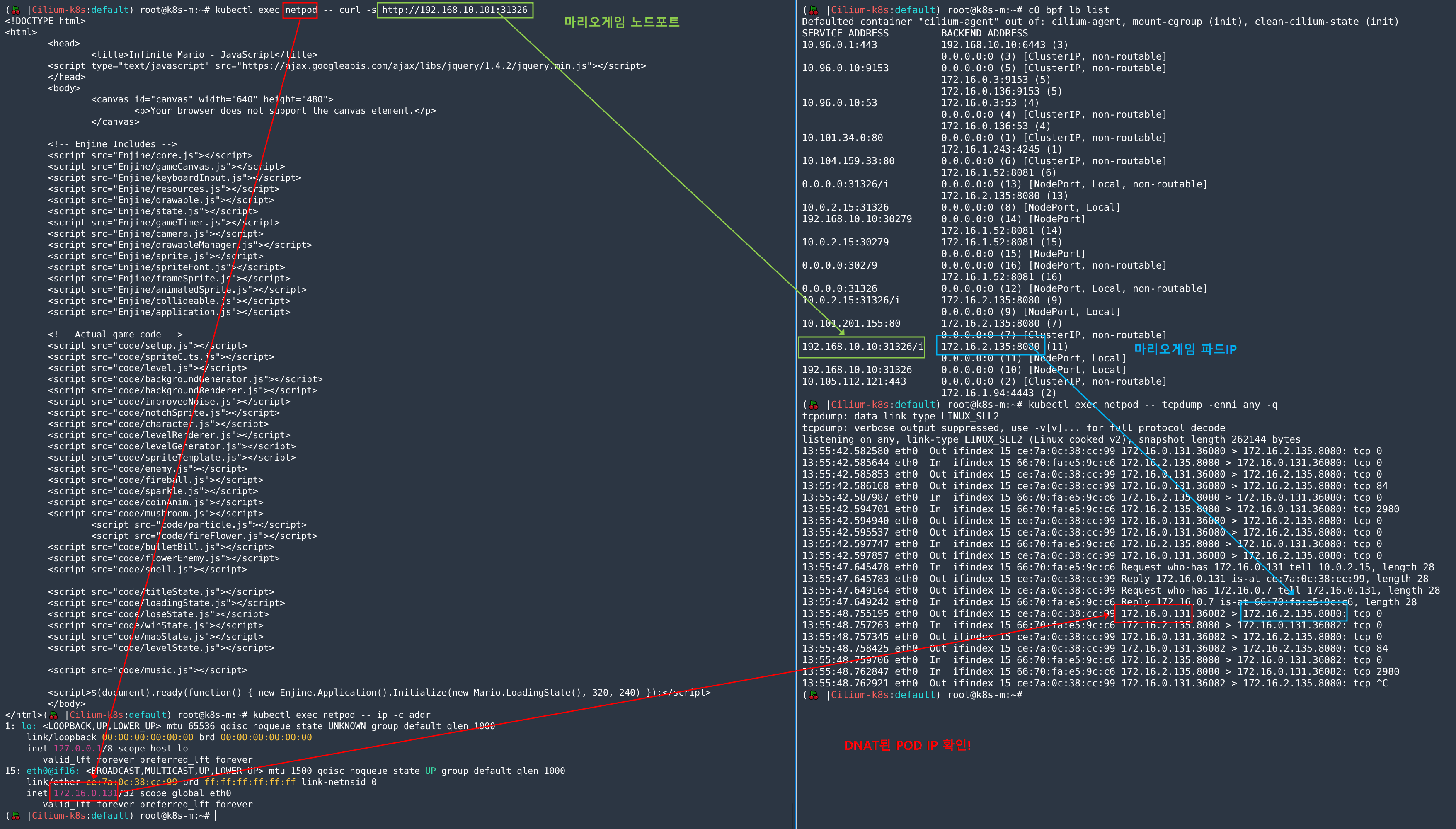

- '샘플 MSA' 중 1개를 선택하여 배포하고 외부로 노출(Service) 설정을 하자. 설정한 서비스 통신에서 tcpdump 로 파드 내에서 바로 DNAT 되는 부분에 대한 관련 스샷을 제출!

Network Policy(L3, L4, L7) 실습을 따라하면서, 3가지 종류의 정책을 설정하고 관련 스샷(Hubble UI/CLI 포함)을 제출!⇒ 버그로 과제에서 제외- [실습

6번] apache 웹 파드를 배포하고 Service(NodePort) 설정 후 DSR 확인 및 관련 스샷과 패킷 덤프에서 내용을 제출 - [실습

9번] Egress Gateway 실습 후 관련 스샷을 제출 - [실습

10번] BGP for LoadBalancer VIP 실습 후 관련 스샷을 제출

- [실습

- Bandwidth Manager 실습을 따라하면서 특정 파드에 30M 최대대역폭을 제한 설정하고 동작 확인 스샷을 제출!

1. 풀이

'샘플 MSA' 중 1개를 선택하여 배포하고 외부로 노출(Service) 설정을 하자. 설정한 서비스 통신에서 tcpdump 로 파드 내에서 바로 DNAT 되는 부분에 대한 관련 스샷을 제출!

참고 : 4. 서비스 통신 확인

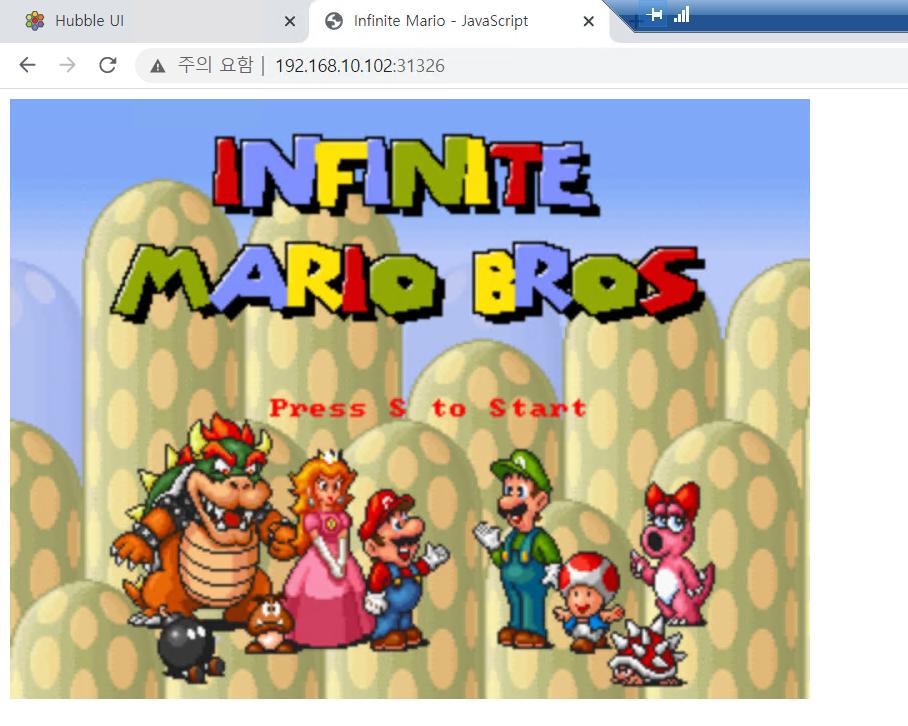

- Mario App 배포

cat <<EOF | kubectl create -f -

apiVersion: apps/v1

kind: Deployment

metadata:

name: mario

labels:

app: mario

spec:

replicas: 1

selector:

matchLabels:

app: mario

template:

metadata:

labels:

app: mario

spec:

containers:

- name: mario

image: pengbai/docker-supermario

---

apiVersion: v1

kind: Service

metadata:

name: mario

spec:

selector:

app: mario

ports:

- port: 80

protocol: TCP

targetPort: 8080

type: NodePort

externalTrafficPolicy: Local

EOF-NodePort 접속 확인

- netpod 생성

패킷덤프 및 curl 명령을 위한 netpod를 생성한다.

cat <<EOF | kubectl create -f -

apiVersion: v1

kind: Pod

metadata:

name: netpod

labels:

app: netpod

spec:

nodeName: k8s-m

containers:

- name: netshoot-pod

image: nicolaka/netshoot

command: ["tail"]

args: ["-f", "/dev/null"]

terminationGracePeriodSeconds: 0

EOF

2. 풀이

1) 실습 6번

apache 웹 파드를 배포하고 Service(NodePort) 설정 후 DSR 확인 및 관련 스샷과 패킷 덤프에서 내용을 제출

참고 : 6. Direct Server Return (DSR)

- Apache App 배포

cat <<EOF | kubectl create -f -

apiVersion: apps/v1

kind: Deployment

metadata:

name: apache

labels:

app: apache

spec:

replicas: 1

selector:

matchLabels:

app: apache

template:

metadata:

labels:

app: apache

spec:

containers:

- name: apache

image: httpd

---

apiVersion: v1

kind: Service

metadata:

name: apache

spec:

selector:

app: apache

ports:

- port: 80

protocol: TCP

targetPort: 80

type: NodePort

EOF- NodePort 접속 확인

- DSR 설정 확인

# DSR 모드 확인

cilium config view | grep dsr

bpf-lb-mode dsr

c0 status --verbose | grep 'KubeProxyReplacement Details:' -A7

Defaulted container "cilium-agent" out of: cilium-agent, mount-cgroup (init), clean-cilium-state (init)

KubeProxyReplacement Details:

Status: Strict

Socket LB Protocols: TCP, UDP

Devices: enp0s3 10.0.2.15, enp0s8 192.168.10.10 (Direct Routing)

Mode: DSR

Backend Selection: Random

Session Affinity: Enabled

Graceful Termination: Enabled- DSR 실습

# apache NodePort 확인

kubectl get svc apache -o jsonpath='{.spec.ports[0].nodePort}';echo

32477

------------------------------------------------------------

# k8s-pc에서 트래픽 발생

SVCNPORT=$(kubectl get svc apache -o jsonpath='{.spec.ports[0].nodePort}')

while true; do curl -s 192.168.10.10:$SVCNPORT | egrep work;echo "-----";sleep 1;done

<html><body><h1>It works!</h1></body></html>

-----

<html><body><h1>It works!</h1></body></html>

-----

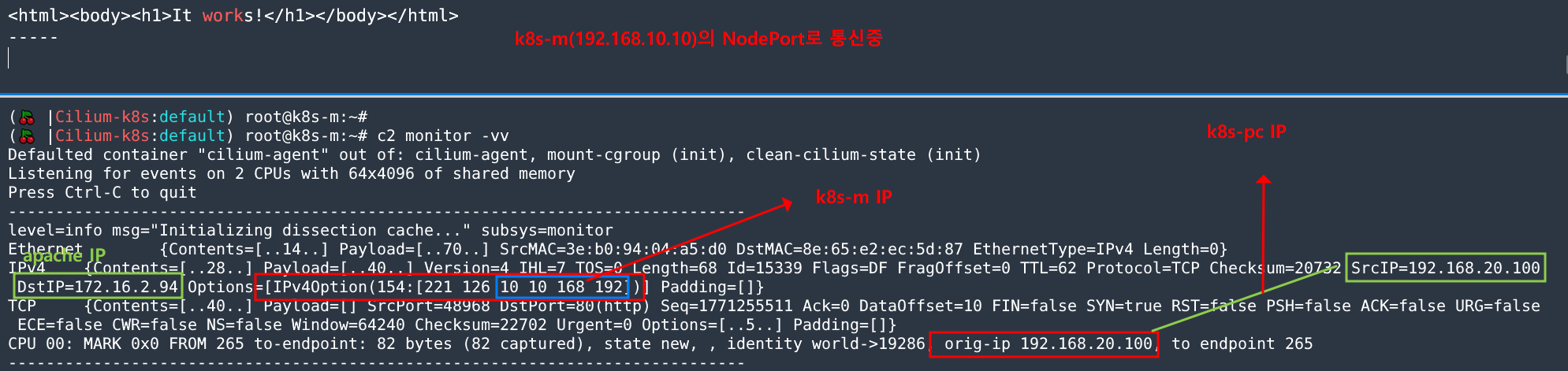

...- cilium monitor

c2 monitor -vv

Defaulted container "cilium-agent" out of: cilium-agent, mount-cgroup (init), clean-cilium-state (init)

Listening for events on 2 CPUs with 64x4096 of shared memory

Press Ctrl-C to quit

------------------------------------------------------------------------------

level=info msg="Initializing dissection cache..." subsys=monitor

Ethernet {Contents=[..14..] Payload=[..70..] SrcMAC=3e:b0:94:04:a5:d0 DstMAC=8e:65:e2:ec:5d:87 EthernetType=IPv4 Length=0}

IPv4 {Contents=[..28..] Payload=[..40..] Version=4 IHL=7 TOS=0 Length=68 Id=15339 Flags=DF FragOffset=0 TTL=62 Protocol=TCP Checksum=20732 SrcIP=192.168.20.100 DstIP=172.16.2.94 Options=[IPv4Option(154:[221 126 10 10 168 192])] Padding=[]}

TCP {Contents=[..40..] Payload=[] SrcPort=48968 DstPort=80(http) Seq=1771255511 Ack=0 DataOffset=10 FIN=false SYN=true RST=false PSH=false ACK=false URG=false ECE=false CWR=false NS=false Window=64240 Checksum=22702 Urgent=0 Options=[..5..] Padding=[]}

CPU 00: MARK 0x0 FROM 265 to-endpoint: 82 bytes (82 captured), state new, , identity world->19286, orig-ip 192.168.20.100, to endpoint 265

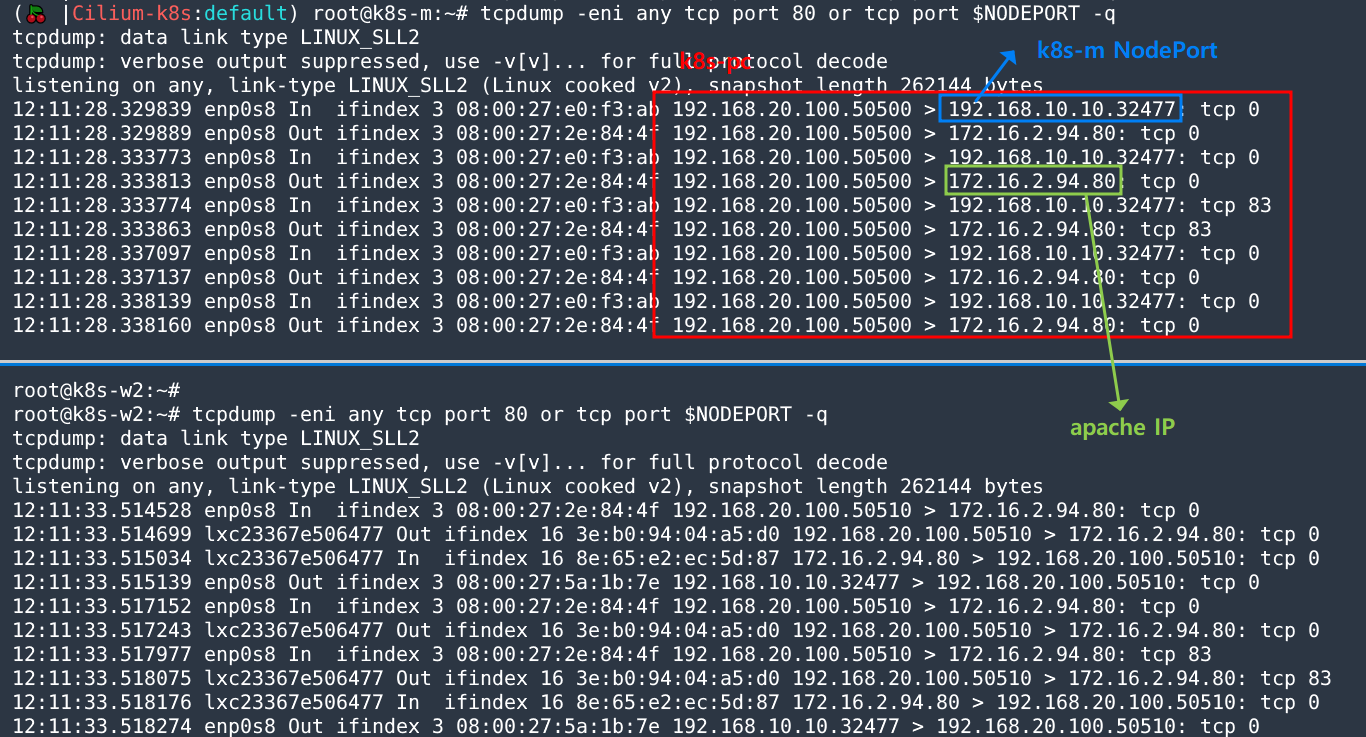

- 마스터 노드 패킷 덤프

(🍒 |Cilium-k8s:default) root@k8s-m:~# NODEPORT=$(kubectl get svc apache -o jsonpath='{.spec.ports[0].nodePort}')

(🍒 |Cilium-k8s:default) root@k8s-m:~# echo $NODEPORT

32477

(🍒 |Cilium-k8s:default) root@k8s-m:~# tcpdump -eni any tcp port 80 or tcp port $NODEPORT -q

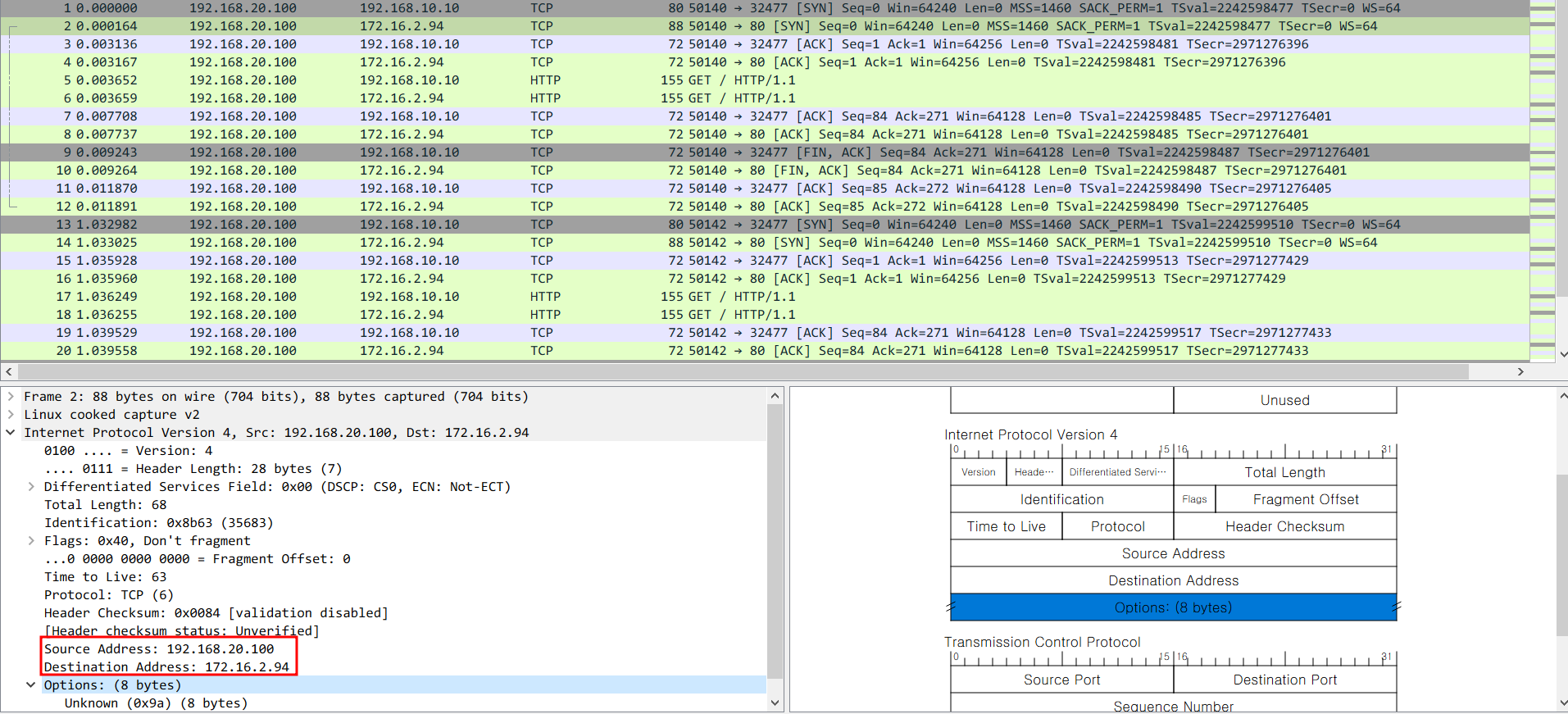

- WireShark

- 워커 노드 패킷 덤프(파드가 떠있는 w2)

root@k8s-w2:~# NODEPORT=$(kubectl get svc apache -o jsonpath='{.spec.ports[0].nodePort}')

root@k8s-w2:~# tcpdump -eni any tcp port 80 or tcp port $NODEPORT -q -v -X

tcpdump: data link type LINUX_SLL2

tcpdump: listening on any, link-type LINUX_SLL2 (Linux cooked v2), snapshot length 262144 bytes

12:18:02.782818 enp0s8 In ifindex 3 08:00:27:2e:84:4f (tos 0x0, ttl 63, id 34517, offset 0, flags [DF], proto TCP (6), length 68, options (unknown 154))

192.168.20.100.51308 > 172.16.2.94.80: tcp 0

0x0000: 4700 0044 86d5 4000 3f06 0512 c0a8 1464 G..D..@.?......d

0x0010: ac10 025e 9a08 dd7e 0a0a a8c0 c86c 0050 ...^...~.....l.P

0x0020: dcaf e1db 0000 0000 a002 faf0 c6ca 0000 ................

0x0030: 0204 05b4 0402 080a 85b3 f5ce 0000 0000 ................

0x0040: 0103 0306 ....

# 옵션 헤더의 Hex 에서 정보 추출

root@k8s-w2:~# Hex='0a 0a a8 c0'

root@k8s-w2:~# for perIP in $Hex; do echo $((0x${perIP})); done | xargs echo | sed 's/ /./g'

10.10.168.192 => 꺼꾸로 보자! 클라이언트가 최초 접근 노드 IP (192.168.10.10)2) 실습 9번

참고 : 9. Cilium Egress Gateway (beta)

- k8s-pc, k8s-rtr 에 웹서버 정보 확인

# 웹서버 접근 테스트

curl localhost

<h1>Web Server : k8s-pc</h1>

# 웹 접속 로그 실시간 출력

tail -f /var/log/apache2/access.log

192.168.10.10 - - [01/Dec/2021:23:35:24 +0000] "GET / HTTP/1.1" 200 264 "-" "curl/7.74.0"- 파드 생성 및 웹 접속 테스트

# 파드 생성

kubectl create -f https://raw.githubusercontent.com/cilium/cilium/1.11.2/examples/kubernetes-dns/dns-sw-app.yaml

# 파드 확인

kubectl get pod -o wide

# 파드에서 nginx 접속 >> k8s-pc 웹서버 접속 시 클라이언트 IP가 어떻게 찍히나요?

kubectl exec mediabot -- curl -s 192.168.20.100

kubectl exec mediabot -- curl -s 192.168.10.254

kubectl exec mediabot -- curl -s wttr.in/seoul # (옵션) 외부 웹서버 접속 테스트

kubectl exec mediabot -- curl -s wttr.in/seoul?format=3

kubectl exec mediabot -- curl -s wttr.in/seoul?format=4

kubectl exec mediabot -- curl -s ipinfo.io

kubectl exec mediabot -- curl -s ipinfo.io/city

kubectl exec mediabot -- curl -s ipinfo.io/loc

kubectl exec mediabot -- curl -s ipinfo.io/org

----------------------------------------------------------

# k8s-rtr, k8s-pc 로그

root@k8s-rtr:~# tail -f /var/log/apache2/access.log

172.16.2.35 - - [05/Mar/2022:12:55:51 +0000] "GET / HTTP/1.1" 200 257 "-" "curl/7.52.1"

root@k8s-pc:~# tail -f /var/log/apache2/access.log

127.0.0.1 - - [05/Mar/2022:12:52:00 +0000] "GET / HTTP/1.1" 200 256 "-" "curl/7.74.0"Configure Egress IPs

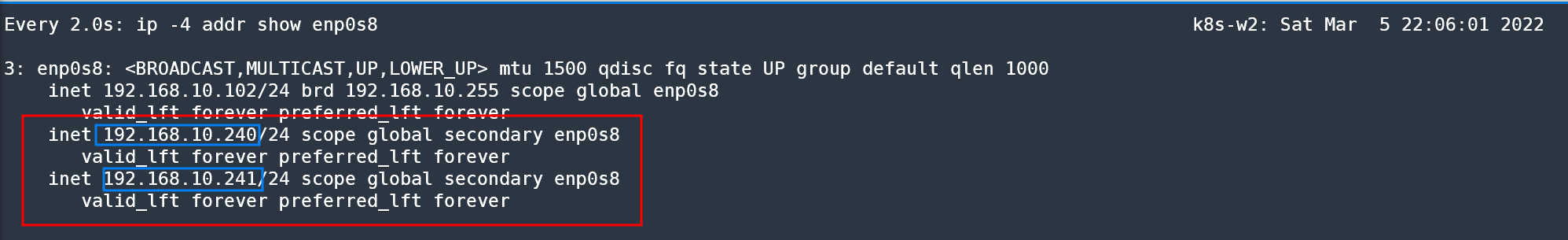

- 인터페이스 모니터링 걸어두기 (Egress GW 가 생성된 워커노드에)

# k8s-w1 , k8s-w2

watch -d 'ip -4 addr show enp0s8'- Egress GW 배포 - EGRESS_IPS 범위 지정 : 예시) 192.168.10.240/24 192.168.10.241/24

cat <<EOF | kubectl create -f -

apiVersion: apps/v1

kind: Deployment

metadata:

name: "egress-ip-assign"

labels:

name: "egress-ip-assign"

spec:

replicas: 1

selector:

matchLabels:

name: "egress-ip-assign"

template:

metadata:

labels:

name: "egress-ip-assign"

spec:

affinity:

# the following pod affinity ensures that the "egress-ip-assign" pod

# runs on the same node as the mediabot pod

podAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: class

operator: In

values:

- mediabot

- key: org

operator: In

values:

- empire

topologyKey: "kubernetes.io/hostname"

hostNetwork: true

containers:

- name: egress-ip

image: docker.io/library/busybox:1.31.1

command: ["/bin/sh","-c"]

securityContext:

privileged: true

env:

- name: EGRESS_IPS

value: "192.168.10.240/24 192.168.10.241/24"

args:

- "for i in \$EGRESS_IPS; do ip address add \$i dev enp0s8; done; sleep 10000000"

lifecycle:

preStop:

exec:

command:

- "/bin/sh"

- "-c"

- "for i in \$EGRESS_IPS; do ip address del \$i dev enp0s8; done"

EOF# 설정 후 k8s-w2 확인

ip -c -4 addr show enp0s8

3: enp0s8: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

inet 192.168.10.102/24 brd 192.168.10.255 scope global enp0s8

valid_lft forever preferred_lft forever

inet 192.168.10.240/24 scope global secondary enp0s8

valid_lft forever preferred_lft forever

inet 192.168.10.241/24 scope global secondary enp0s8

valid_lft forever preferred_lft forever- egress 전용 secondary ip 생성 확인!

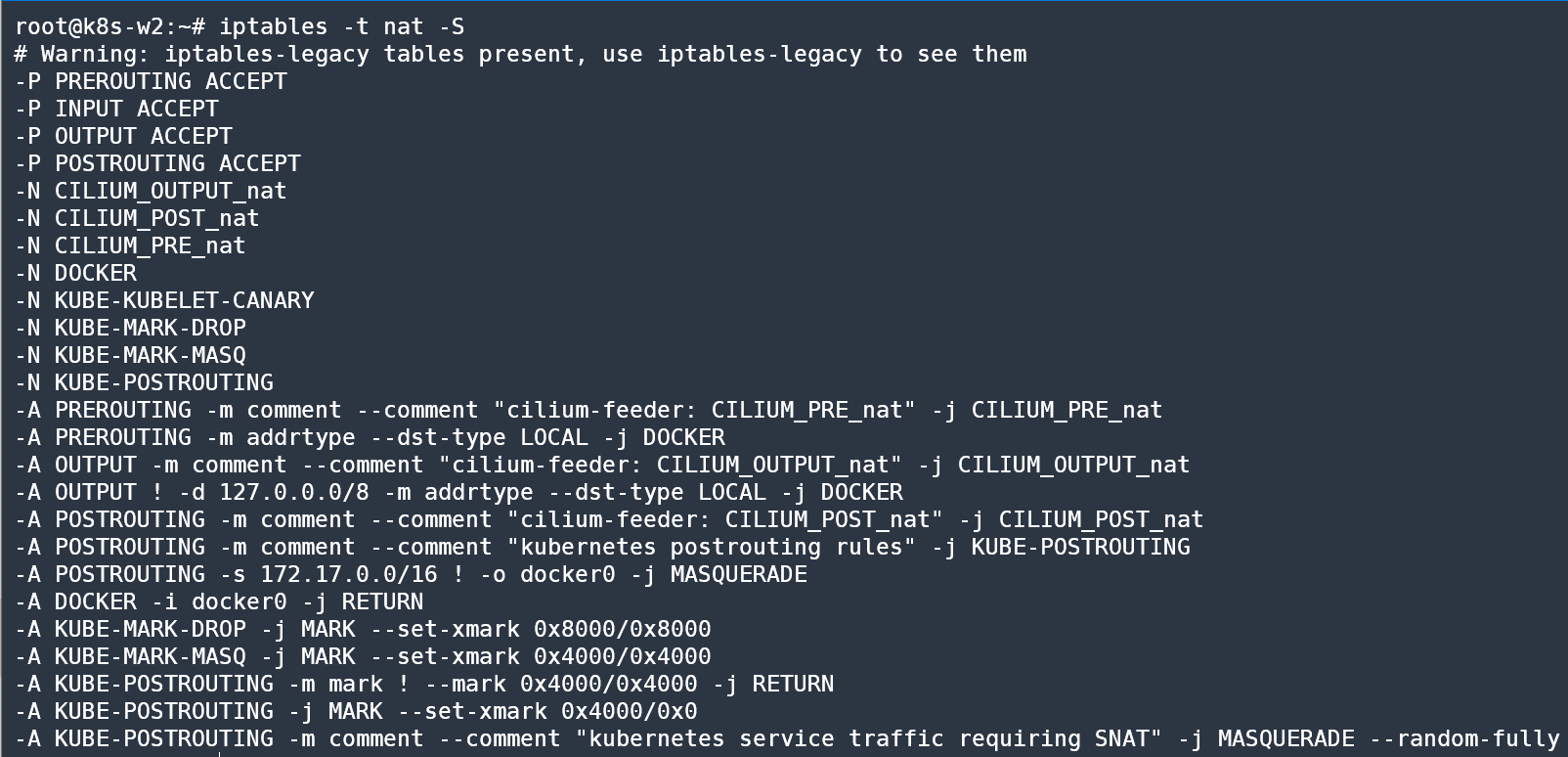

- chain/rule 없음을 확인!

Create Egress NAT Policy

- 192.168.20.100/32 목적지와 통신 할 때는 egressSourceIP 를 192.168.10.240 를 사용!

참고로 destinationCIDRs: 가 0.0.0.0/0 일 경우 모든 네트워크를 의미한다

cat <<EOF | kubectl apply -f -

apiVersion: cilium.io/v2alpha1

kind: CiliumEgressNATPolicy

metadata:

name: egress-sample

spec:

egress:

- podSelector:

matchLabels:

**org: empire

class: mediabot**

io.kubernetes.pod.namespace: default

destinationCIDRs:

- 192.168.20.100/32

egressSourceIP: "192.168.10.240"

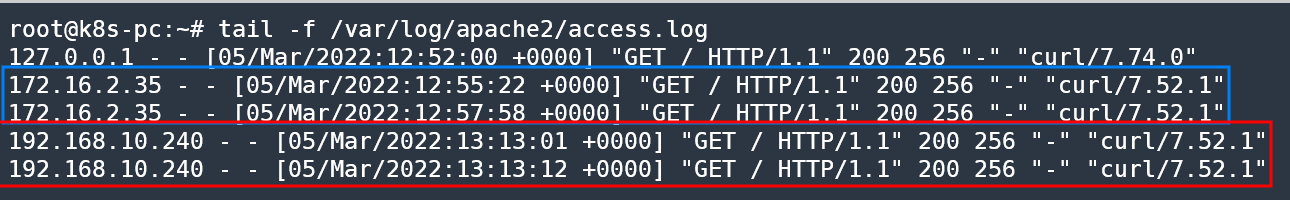

EOF통신 확인 및 추가 테스트

# 파드에서 nginx 접속 : policy 에 맞게 지정된 ip가 출력

kubectl exec mediabot -- curl -s 192.168.20.100

## 로그 확인

root@k8s-pc:~# tail -f /var/log/apache2/access.log

127.0.0.1 - - [05/Mar/2022:12:52:00 +0000] "GET / HTTP/1.1" 200 256 "-" "curl/7.74.0"

172.16.2.35 - - [05/Mar/2022:12:55:22 +0000] "GET / HTTP/1.1" 200 256 "-" "curl/7.52.1"

172.16.2.35 - - [05/Mar/2022:12:57:58 +0000] "GET / HTTP/1.1" 200 256 "-" "curl/7.52.1"

192.168.10.240 - - [05/Mar/2022:13:13:01 +0000] "GET / HTTP/1.1" 200 256 "-" "curl/7.52.1"

# 파드에서 nginx 접속 : policy 에 정의된 목적지가 아니여서 파드 ip 출력

kubectl exec mediabot -- curl -s 192.168.10.254

<h1>Web Server : k8s-rtr</h1>

## 로그 확인

root@k8s-rtr:~# tail -f /var/log/apache2/access.log

172.16.2.35 - - [05/Mar/2022:12:55:51 +0000] "GET / HTTP/1.1" 200 257 "-" "curl/7.52.1"

172.16.2.35 - - [05/Mar/2022:12:58:01 +0000] "GET / HTTP/1.1" 200 257 "-" "curl/7.52.1"

# 파드에서 외부 접속

kubectl exec mediabot -- curl -s wttr.in/busan

(생략)

kubectl exec mediabot -- curl -s wttr.in/busan?format=4

busan: ☀️ 🌡️+7°C 🌬️↘13km/h- k8s-pc에서 egress ip로 변경된 log가 찍히는 것을 확인!

- 신규 추가 파드로 테스트(추후 업데이트 예정)

(중략)

- 실습예제 삭제

kubectl delete ciliumegressnatpolicies egress-sample

kubectl delete deploy egress-ip-assign

kubectl delete pod --all3) 실습 10번

참고 : 10. BGP for LoadBalancer VIP

Cilium BGP(beta) 설정 - 링크

- Cilium BGP(beta) 설정 - 링크

cat <<EOF | kubectl create -f -

apiVersion: v1

kind: ConfigMap

metadata:

name: bgp-config

namespace: kube-system

data:

config.yaml: |

peers:

- peer-address: 192.168.10.254

peer-asn: 64513

my-asn: 64512

address-pools:

- name: default

protocol: bgp

avoid-buggy-ips: true

addresses:

- 172.20.1.0/24

EOF- 기타 설정

# config 확인

kubectl get cm -n kube-system bgp-config3. 풀이

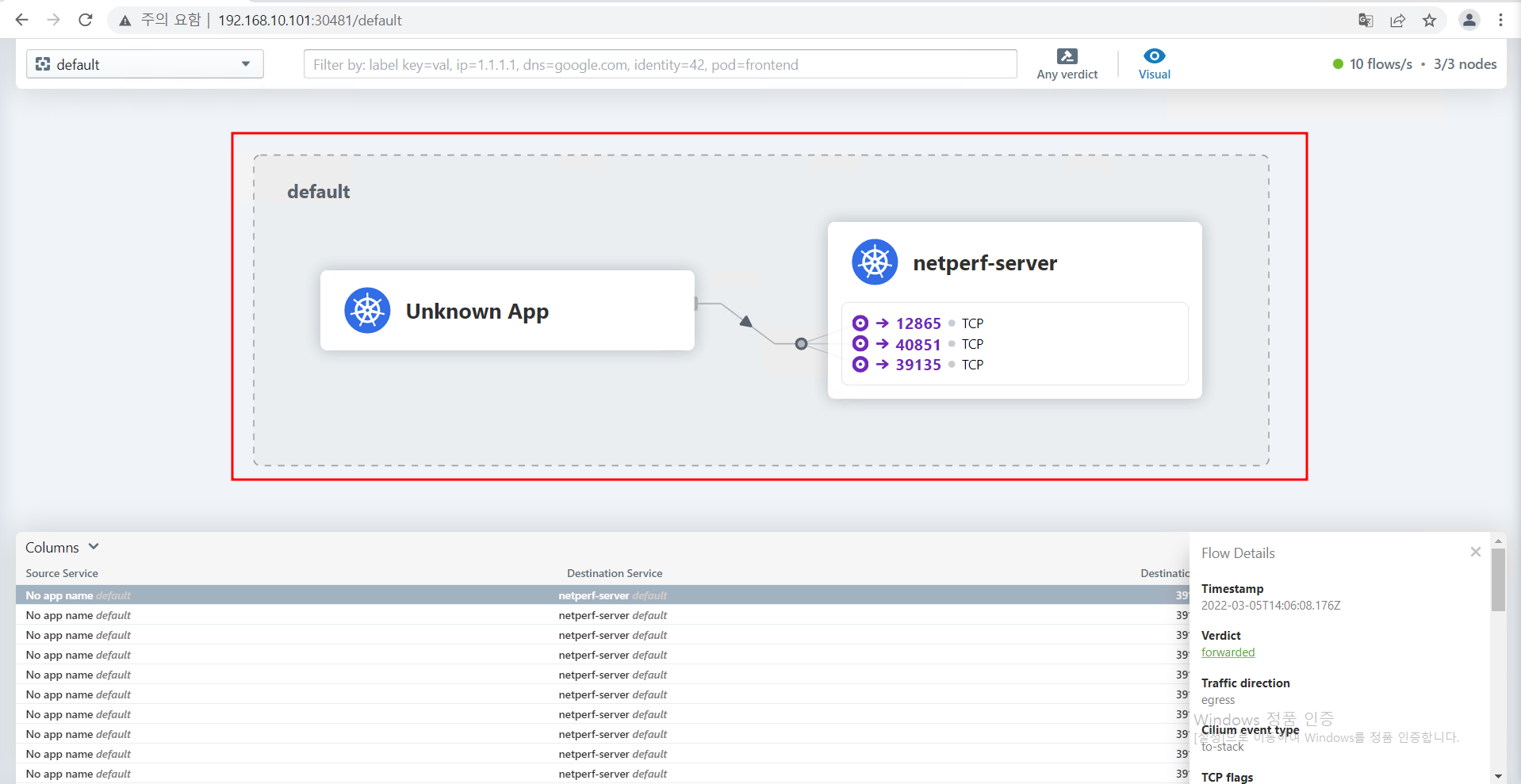

참고 : 8. Bandwidth Manager (beta)

설정 및 확인

# 적용 확인 : egress bandwidth limitation 동작하는 인터페이스 확인

cilium config view | grep bandwidth

enable-bandwidth-manager true

c0 status | grep BandwidthManager

BandwidthManager: EDT with BPF [enp0s3, enp0s8]

# 인터페이스 tc qdisc 확인 : 설정 전후 옵션값들이 상당히 추가된다

tc qdisc

(생략)

tc qdisc show dev enp0s8

qdisc fq 8018: root refcnt 2 limit 10000p flow_limit 100p buckets 1024 orphan_mask 1023 quantum 3028b initial_quantum 15140b low_rate_threshold 550Kbit refill_delay 40ms timer_slack 10us

qdisc clsact ffff: parent ffff:fff1동작 및 확인

# 테스트를 위한 트래픽 발생 서버/클라이언트 파드 생성

cat <<EOF | kubectl create -f -

---

apiVersion: v1

kind: Pod

metadata:

annotations:

# Limits egress bandwidth to 10Mbit/s.

kubernetes.io/egress-bandwidth: "10M"

labels:

# This pod will act as server.

app.kubernetes.io/name: netperf-server

name: netperf-server

spec:

containers:

- name: netperf

image: cilium/netperf

ports:

- containerPort: 12865

---

apiVersion: v1

kind: Pod

metadata:

# This Pod will act as client.

name: netperf-client

spec:

affinity:

# Prevents the client from being scheduled to the

# same node as the server.

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app.kubernetes.io/name

operator: In

values:

- netperf-server

topologyKey: kubernetes.io/hostname

containers:

- name: netperf

args:

- sleep

- infinity

image: cilium/netperf

EOF

# egress BW 제한 정보 확인

kubectl describe pod netperf-server | grep Annotations:

Annotations: kubernetes.io/egress-bandwidth: 10M

# egress BW 제한이 설정된 파드가 있는 cilium pod 에서 제한 정보 확인

c2 bpf bandwidth list

Defaulted container "cilium-agent" out of: cilium-agent, mount-cgroup (init), clean-cilium-state (init)

IDENTITY EGRESS BANDWIDTH (BitsPerSec)

4094 10M

c2 endpoint list

Defaulted container "cilium-agent" out of: cilium-agent, mount-cgroup (init), clean-cilium-state (init)

ENDPOINT POLICY (ingress) POLICY (egress) IDENTITY LABELS (source:key[=value]) IPv6 IPv4 STATUS

ENFORCEMENT ENFORCEMENT

16 Disabled Disabled 4 reserved:health 172.16.2.58 ready

1141 Disabled Disabled 1 reserved:host ready

4094 Disabled Disabled 1709 k8s:app.kubernetes.io/name=netperf-server 172.16.2.176 ready

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=default

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=default

# 트래픽 발생 >> Hubble UI 에서 확인

# egress traffic of the netperf-server Pod has been limited to 10Mbit per second.

NETPERF_SERVER_IP=$(kubectl get pod netperf-server -o jsonpath='{.status.podIP}')

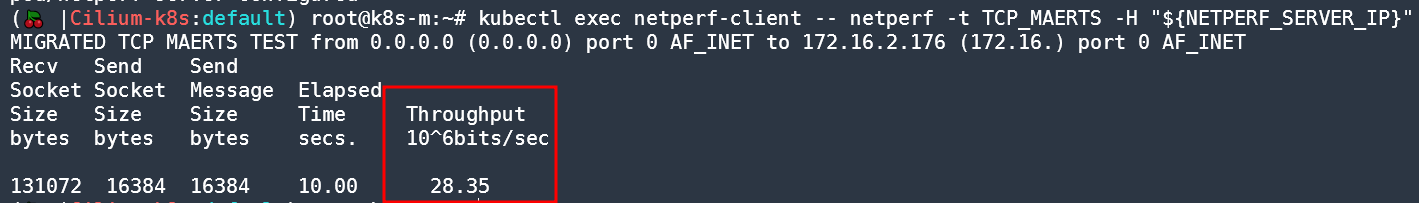

kubectl exec netperf-client -- netperf -t TCP_MAERTS -H "${NETPERF_SERVER_IP}"

MIGRATED TCP MAERTS TEST from 0.0.0.0 (0.0.0.0) port 0 AF_INET to 172.16.2.176 (172.16.) port 0 AF_INET

Recv Send Send

Socket Socket Message Elapsed

Size Size Size Time Throughput

bytes bytes bytes secs. 10^6bits/sec

131072 16384 16384 10.00 9.54

- 속도 30M으로 제한 설정해보자!

kubectl get pod netperf-server -o json | sed -e 's|10M|30M|g' | kubectl apply -f -

Warning: resource pods/netperf-server is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

pod/netperf-server configured

(🍒 |Cilium-k8s:default) root@k8s-m:~# kubectl exec netperf-client -- netperf -t TCP_MAERTS -H "${NETPERF_SERVER_IP}"

MIGRATED TCP MAERTS TEST from 0.0.0.0 (0.0.0.0) port 0 AF_INET to 172.16.2.176 (172.16.) port 0 AF_INET

Recv Send Send

Socket Socket Message Elapsed

Size Size Size Time Throughput

bytes bytes bytes secs. 10^6bits/sec

131072 16384 16384 10.00 28.35 # 28M 제한 확인!