요약

먼저, 이번 과제를 마지막으로 EKS 스터디는 끝이났다. 스터디 완주와 귀여운 Go 캐릭터들이 모여 기쁘다. 스터디를 하면서 많은 일들이 있었다. (실수로 AWS 토큰을 노출해서, 메일도 날라오고..) EKS 실무 관련 경험이 없었는 데, 정말 많은 이론을 배우고, 따라하며 익힐 수 있었다. 앞으로 복습을 하며 추후 예정으로 미뤄뒀던 실습과 이론을 진행하면서 내용을 세부화해서 포스팅할 예정이다.

그동안 스터디를 준비해주신 가시다님과 CloudNet 팀 덕분에 많이 배울 수 있었다. 정말 감사합니다 ㅎㅎ!!

스터디에 관심있으신 분은 CloudNet에서 확인할 수 있습니다!

이번 주차의 주제는 Automation이다. ACK와 Flux에 대해 실습을 진행하고, 아주 간단히 ArgoCD를 진행한다.

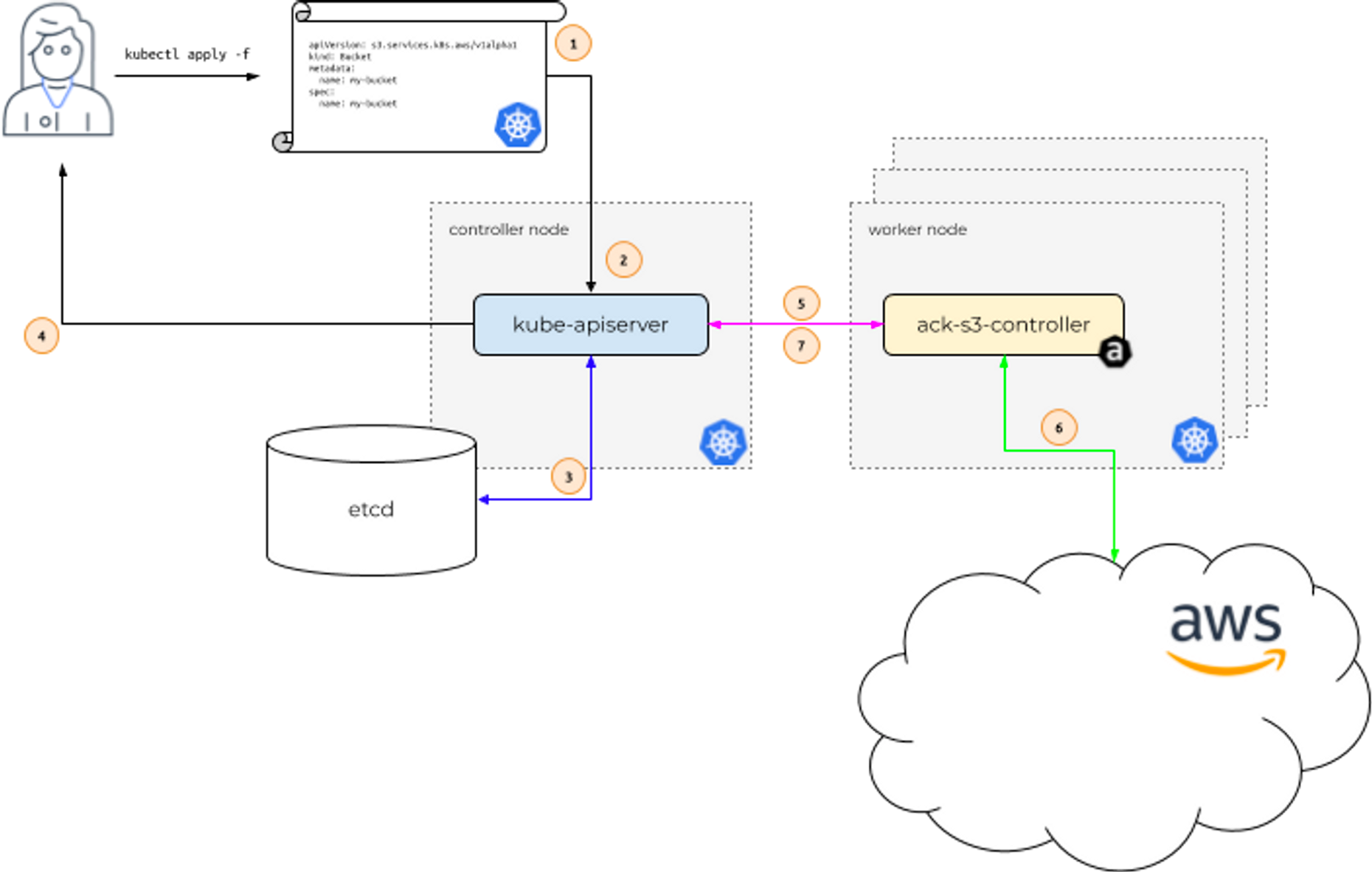

AWS Controller for Kubernetes (ACK)

AWS에서 제작한 오픈소스 툴이며, 클라우드를 잘 모르는 개발자?에게 좋을 듯하다. 하지만 아직 정식으로 오픈한 서비스가 많아보이진 않는다.

자세한 내용은 공식문서 참고!

ACK: aws 서비스 리소스를 k8s 에서 직접 정의하고 사용 할 수 있음

- S3 실습 진행

우선, ACK S3 controller를 받는다.

$export SERVICE=s3

$export RELEASE_VERSION=$(curl -sL https://api.github.com/repos/aws-controllers-k8s/$SERVICE-controller/releases/latest | grep '"tag_name":' | cut -d'"' -f4 | cut -c 2-)

$helm pull oci://public.ecr.aws/aws-controllers-k8s/$SERVICE-chart --version=$RELEASE_VERSION

Pulled: public.ecr.aws/aws-controllers-k8s/s3-chart:1.0.4

Digest: sha256:9cd8574c78c7f226a2520a423a447afd02366a3ec87b5d1ba910992da3e264b8

$tar xzvf $SERVICE-chart-$RELEASE_VERSION.tgz

s3-chart/Chart.yaml

s3-chart/values.yaml

s3-chart/values.schema.json

s3-chart/templates/NOTES.txt

s3-chart/templates/_helpers.tpl

s3-chart/templates/cluster-role-binding.yaml

s3-chart/templates/cluster-role-controller.yaml

s3-chart/templates/deployment.yaml

s3-chart/templates/metrics-service.yaml

s3-chart/templates/role-reader.yaml

s3-chart/templates/role-writer.yaml

s3-chart/templates/service-account.yaml

s3-chart/crds/s3.services.k8s.aws_buckets.yaml

s3-chart/crds/services.k8s.aws_adoptedresources.yaml

s3-chart/crds/services.k8s.aws_fieldexports.yaml

$tree ~/$SERVICE-chart

/root/s3-chart

├── Chart.yaml

├── crds

│ ├── s3.services.k8s.aws_buckets.yaml

│ ├── services.k8s.aws_adoptedresources.yaml

│ └── services.k8s.aws_fieldexports.yaml

├── templates

│ ├── cluster-role-binding.yaml

│ ├── cluster-role-controller.yaml

│ ├── deployment.yaml

│ ├── _helpers.tpl

│ ├── metrics-service.yaml

│ ├── NOTES.txt

│ ├── role-reader.yaml

│ ├── role-writer.yaml

│ └── service-account.yaml

├── values.schema.json

└── values.yaml

2 directories, 15 files받은 helm 차트를 배포!

$export ACK_SYSTEM_NAMESPACE=ack-system

$export AWS_REGION=ap-northeast-2

$

$helm install --create-namespace -n $ACK_SYSTEM_NAMESPACE ack-$SERVICE-controller --set aws.region="$AWS_REGION" ~/$SERVICE-chart

NAME: ack-s3-controller

LAST DEPLOYED: Tue Jun 6 15:41:29 2023

NAMESPACE: ack-system

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

s3-chart has been installed.

This chart deploys "public.ecr.aws/aws-controllers-k8s/s3-controller:1.0.4".

Check its status by running:

kubectl --namespace ack-system get pods -l "app.kubernetes.io/instance=ack-s3-controller"

You are now able to create Amazon Simple Storage Service (S3) resources!

The controller is running in "cluster" mode.

The controller is configured to manage AWS resources in region: "ap-northeast-2"

Visit https://aws-controllers-k8s.github.io/community/reference/ for an API

reference of all the resources that can be created using this controller.

For more information on the AWS Controllers for Kubernetes (ACK) project, visit:

https://aws-controllers-k8s.github.io/community/

이제, 배포를 확인한다.

$helm list --namespace $ACK_SYSTEM_NAMESPACE

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

ack-s3-controller ack-system 1 2023-06-06 15:41:29.434398105 +0900 KST deployed s3-chart-1.0.4 1.0.4

$kubectl -n ack-system get pods

NAME READY STATUS RESTARTS AGE

ack-s3-controller-s3-chart-7c55c6657d-2dl4x 0/1 ContainerCreating 0 8s

$kubectl get crd | grep $SERVICE

buckets.s3.services.k8s.aws 2023-06-06T06:41:27Z

$kubectl get all -n ack-system

NAME READY STATUS RESTARTS AGE

pod/ack-s3-controller-s3-chart-7c55c6657d-2dl4x 1/1 Running 0 15s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/ack-s3-controller-s3-chart 1/1 1 1 15s

NAME DESIRED CURRENT READY AGE

replicaset.apps/ack-s3-controller-s3-chart-7c55c6657d 1 1 1 15s

$kubectl describe sa -n ack-system ack-s3-controller

Name: ack-s3-controller

Namespace: ack-system

Labels: app.kubernetes.io/instance=ack-s3-controller

app.kubernetes.io/managed-by=Helm

app.kubernetes.io/name=s3-chart

app.kubernetes.io/version=1.0.4

helm.sh/chart=s3-chart-1.0.4

k8s-app=s3-chart

Annotations: meta.helm.sh/release-name: ack-s3-controller

meta.helm.sh/release-namespace: ack-system

Image pull secrets: <none>

Mountable secrets: <none>

Tokens: <none>

Events: <none>

$echo $ACK_SYSTEM_NAMESPACE

ack-system

$echo $RELEASE_VERSION

1.0.4IRSA 설정 - S3 Full Access

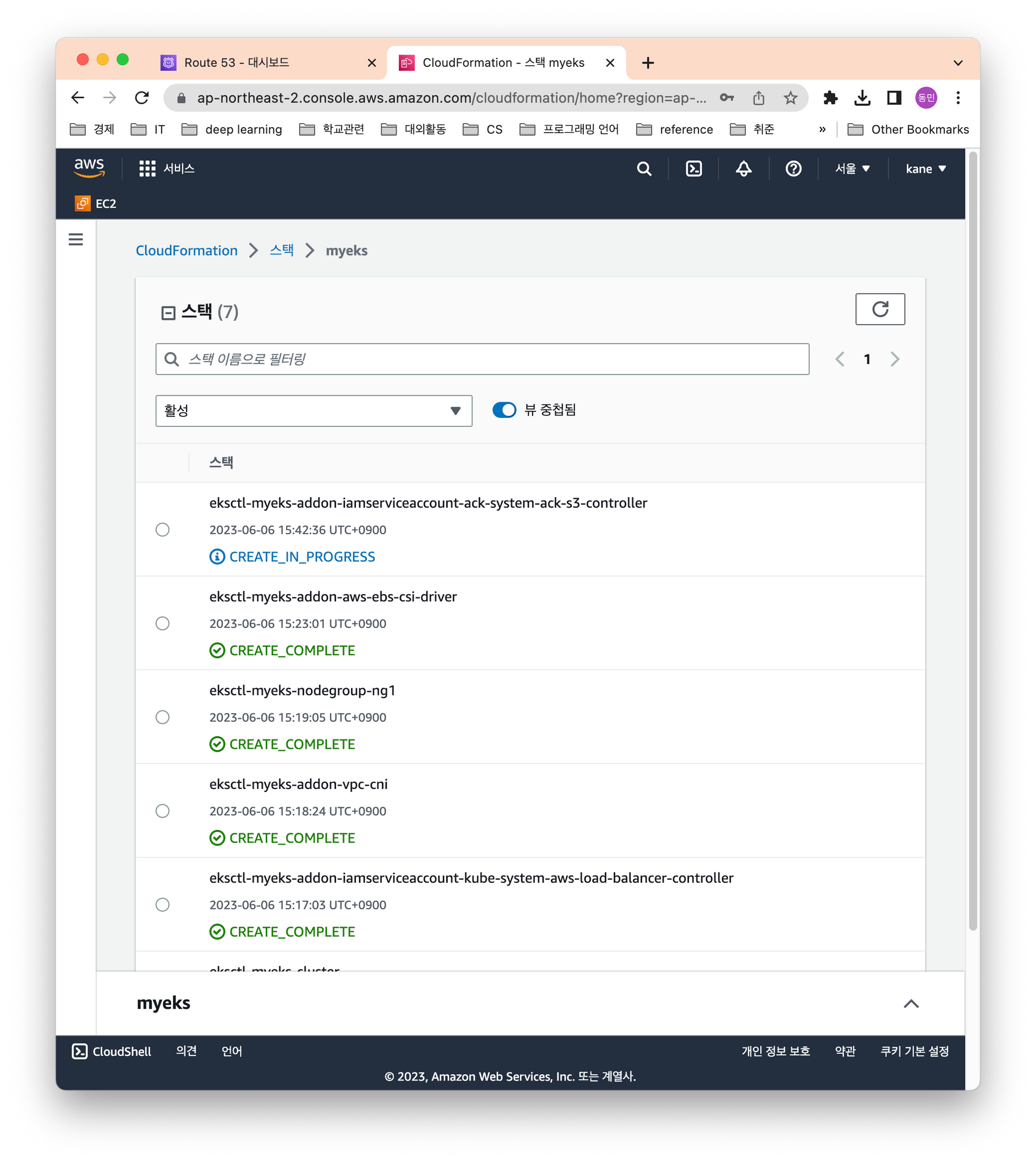

파드에 권한이 없다면, AWS S3에 접근할 수 없다. eksctl를 통해 ack-s3-controller 서비스 어카운트에 정책을 붙인다.

$eksctl create iamserviceaccount \

> --name ack-$SERVICE-controller \

> --namespace ack-system \

> --cluster $CLUSTER_NAME \

> --attach-policy-arn $(aws iam list-policies --query 'Policies[?PolicyName==`AmazonS3FullAccess`].Arn' --output text) \

> --override-existing-serviceaccounts --approve

2023-06-06 15:42:36 [ℹ] 1 existing iamserviceaccount(s) (kube-system/aws-load-balancer-controller) will be excluded

2023-06-06 15:42:36 [ℹ] 1 iamserviceaccount (ack-system/ack-s3-controller) was included (based on the include/exclude rules)

2023-06-06 15:42:36 [!] metadata of serviceaccounts that exist in Kubernetes will be updated, as --override-existing-serviceaccounts was set

2023-06-06 15:42:36 [ℹ] 1 task: {

2 sequential sub-tasks: {

create IAM role for serviceaccount "ack-system/ack-s3-controller",

create serviceaccount "ack-system/ack-s3-controller",

} }2023-06-06 15:42:36 [ℹ] building iamserviceaccount stack "eksctl-myeks-addon-iamserviceaccount-ack-system-ack-s3-controller"

2023-06-06 15:42:36 [ℹ] deploying stack "eksctl-myeks-addon-iamserviceaccount-ack-system-ack-s3-controller"

2023-06-06 15:42:36 [ℹ] waiting for CloudFormation stack "eksctl-myeks-addon-iamserviceaccount-ack-system-ack-s3-controller"

2023-06-06 15:43:06 [ℹ] waiting for CloudFormation stack "eksctl-myeks-addon-iamserviceaccount-ack-system-ack-s3-controller"

2023-06-06 15:43:59 [ℹ] waiting for CloudFormation stack "eksctl-myeks-addon-iamserviceaccount-ack-system-ack-s3-controller"

2023-06-06 15:43:59 [ℹ] serviceaccount "ack-system/ack-s3-controller" already exists

2023-06-06 15:43:59 [ℹ] updated serviceaccount "ack-system/ack-s3-controller"웹 콘솔에서도 IAM 서비스어카운트가 생성된 것을 확인할 수 있다.

터미널에서 자세한 내용 확인

# 생성 확인

$eksctl get iamserviceaccount --cluster $CLUSTER_NAME

NAMESPACE NAME ROLE ARN

ack-system ack-s3-controller arn:aws:iam::011116120544:role/eksctl-myeks-addon-iamserviceaccount-ack-sys-Role1-CGT73XW0JNSS

kube-system aws-load-balancer-controller arn:aws:iam::011116120544:role/eksctl-myeks-addon-iamserviceaccount-kube-sy-Role1-1OBUCIYN8PY2F

$k get sa -n ack-system

NAME SECRETS AGE

ack-s3-controller 0 3m39s

default 0 3m39s

$eksctl get iamserviceaccount --cluster $CLUSTER_NAME

NAMESPACE NAME ROLE ARN

ack-system ack-s3-controller arn:aws:iam::011116120544:role/eksctl-myeks-addon-iamserviceaccount-ack-sys-Role1-CGT73XW0JNSS

kube-system aws-load-balancer-controller arn:aws:iam::011116120544:role/eksctl-myeks-addon-iamserviceaccount-kube-sy-Role1-1OBUCIYN8PY2F

$kubectl get sa -n ack-system

NAME SECRETS AGE

ack-s3-controller 0 4m12s

default 0 4m12s

$

$kubectl describe sa ack-$SERVICE-controller -n ack-system

Name: ack-s3-controller

Namespace: ack-system

Labels: app.kubernetes.io/instance=ack-s3-controller

app.kubernetes.io/managed-by=eksctl

app.kubernetes.io/name=s3-chart

app.kubernetes.io/version=1.0.4

helm.sh/chart=s3-chart-1.0.4

k8s-app=s3-chart

Annotations: eks.amazonaws.com/role-arn: arn:aws:iam::011116120544:role/eksctl-myeks-addon-iamserviceaccount-ack-sys-Role1-CGT73XW0JNSS

meta.helm.sh/release-name: ack-s3-controller

meta.helm.sh/release-namespace: ack-system

Image pull secrets: <none>

Mountable secrets: <none>

Tokens: <none>

Events: <none>

$kubectl -n ack-system rollout restart deploy ack-$SERVICE-controller-$SERVICE-chart

deployment.apps/ack-s3-controller-s3-chart restarted

$kubectl describe pod -n ack-system -l k8s-app=$SERVICE-chart

Name: ack-s3-controller-s3-chart-5d5bd5d57c-sfbb5

Namespace: ack-system

Priority: 0

Service Account: ack-s3-controller

Node: ip-192-168-2-99.ap-northeast-2.compute.internal/192.168.2.99

Start Time: Tue, 06 Jun 2023 15:46:23 +0900

Labels: app.kubernetes.io/instance=ack-s3-controller

app.kubernetes.io/managed-by=Helm

app.kubernetes.io/name=s3-chart

k8s-app=s3-chart

pod-template-hash=5d5bd5d57c

Annotations: kubectl.kubernetes.io/restartedAt: 2023-06-06T15:46:23+09:00

kubernetes.io/psp: eks.privileged

seccomp.security.alpha.kubernetes.io/pod: runtime/default

Status: Running

SeccompProfile: RuntimeDefault

IP: 192.168.2.89

IPs:

IP: 192.168.2.89

Controlled By: ReplicaSet/ack-s3-controller-s3-chart-5d5bd5d57c

Containers:

controller:

Container ID: containerd://1af33fc46028ce439b7b7dc809267b3bbf84f22ba2e7681f6929ddc0b68063b8

Image: public.ecr.aws/aws-controllers-k8s/s3-controller:1.0.4

Image ID: public.ecr.aws/aws-controllers-k8s/s3-controller@sha256:c103185184be38ec4d113d99c06889d4facd4025cd5238f141ebbcc0bad8b155

Port: 8080/TCP

Host Port: 0/TCP

Command:

./bin/controller

Args:

--aws-region

$(AWS_REGION)

--aws-endpoint-url

$(AWS_ENDPOINT_URL)

--enable-development-logging

$(ACK_ENABLE_DEVELOPMENT_LOGGING)

--log-level

$(ACK_LOG_LEVEL)

--resource-tags

$(ACK_RESOURCE_TAGS)

--watch-namespace

$(ACK_WATCH_NAMESPACE)

--deletion-policy

$(DELETION_POLICY)

State: Running

Started: Tue, 06 Jun 2023 15:46:28 +0900

Ready: True

Restart Count: 0

Limits:

cpu: 100m

memory: 128Mi

Requests:

cpu: 50m

memory: 64Mi

Environment:

ACK_SYSTEM_NAMESPACE: ack-system (v1:metadata.namespace)

AWS_REGION: ap-northeast-2

AWS_ENDPOINT_URL:

ACK_WATCH_NAMESPACE:

DELETION_POLICY: delete

ACK_ENABLE_DEVELOPMENT_LOGGING: false

ACK_LOG_LEVEL: info

ACK_RESOURCE_TAGS: services.k8s.aws/controller-version=%CONTROLLER_SERVICE%-%CONTROLLER_VERSION%,services.k8s.aws/namespace=%K8S_NAMESPACE%

AWS_STS_REGIONAL_ENDPOINTS: regional

AWS_ROLE_ARN: arn:aws:iam::011116120544:role/eksctl-myeks-addon-iamserviceaccount-ack-sys-Role1-CGT73XW0JNSS

AWS_WEB_IDENTITY_TOKEN_FILE: /var/run/secrets/eks.amazonaws.com/serviceaccount/token

Mounts:

/var/run/secrets/eks.amazonaws.com/serviceaccount from aws-iam-token (ro)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-kpfz4 (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

aws-iam-token:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 86400

kube-api-access-kpfz4:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: Burstable

Node-Selectors: kubernetes.io/os=linux

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 8s default-scheduler Successfully assigned ack-system/ack-s3-controller-s3-chart-5d5bd5d57c-sfbb5 to ip-192-168-2-99.ap-northeast-2.compute.internal

Normal Pulled 5s kubelet Container image "public.ecr.aws/aws-controllers-k8s/s3-controller:1.0.4" already present on machine

Normal Created 5s kubelet Created container controller

Normal Started 3s kubelet Started container controller

이제, S3 관련 테스트를 진행한다.

- Bucket 생성

$export AWS_ACCOUNT_ID=$(aws sts get-caller-identity --query "Account" --output text)

$export BUCKET_NAME=my-ack-s3-bucket-$AWS_ACCOUNT_ID

$read -r -d '' BUCKET_MANIFEST <<EOF

> apiVersion: s3.services.k8s.aws/v1alpha1

> kind: Bucket

> metadata:

> name: $BUCKET_NAME

> spec:

> name: $BUCKET_NAME

> EOF

$echo "${BUCKET_MANIFEST}" > bucket.yaml

$cat bucket.yaml | yh

apiVersion: s3.services.k8s.aws/v1alpha1

kind: Bucket

metadata:

name: my-ack-s3-bucket-011116120544

spec:

name: my-ack-s3-bucket-011116120544

$aws s3 ls

$kubectl create -f bucket.yaml

bucket.s3.services.k8s.aws/my-ack-s3-bucket-011116120544 created

# 생성 확인

$aws s3 ls

2023-06-06 15:48:02 my-ack-s3-bucket-011116120544

$kubectl get buckets

NAME AGE

my-ack-s3-bucket-011116120544 10s

$kubectl describe bucket/$BUCKET_NAME | head -6

Name: my-ack-s3-bucket-011116120544

Namespace: default

Labels: <none>

Annotations: <none>

API Version: s3.services.k8s.aws/v1alpha1

Kind: Bucket

$aws s3 ls | grep $BUCKET_NAME

2023-06-06 15:48:02 my-ack-s3-bucket-011116120544

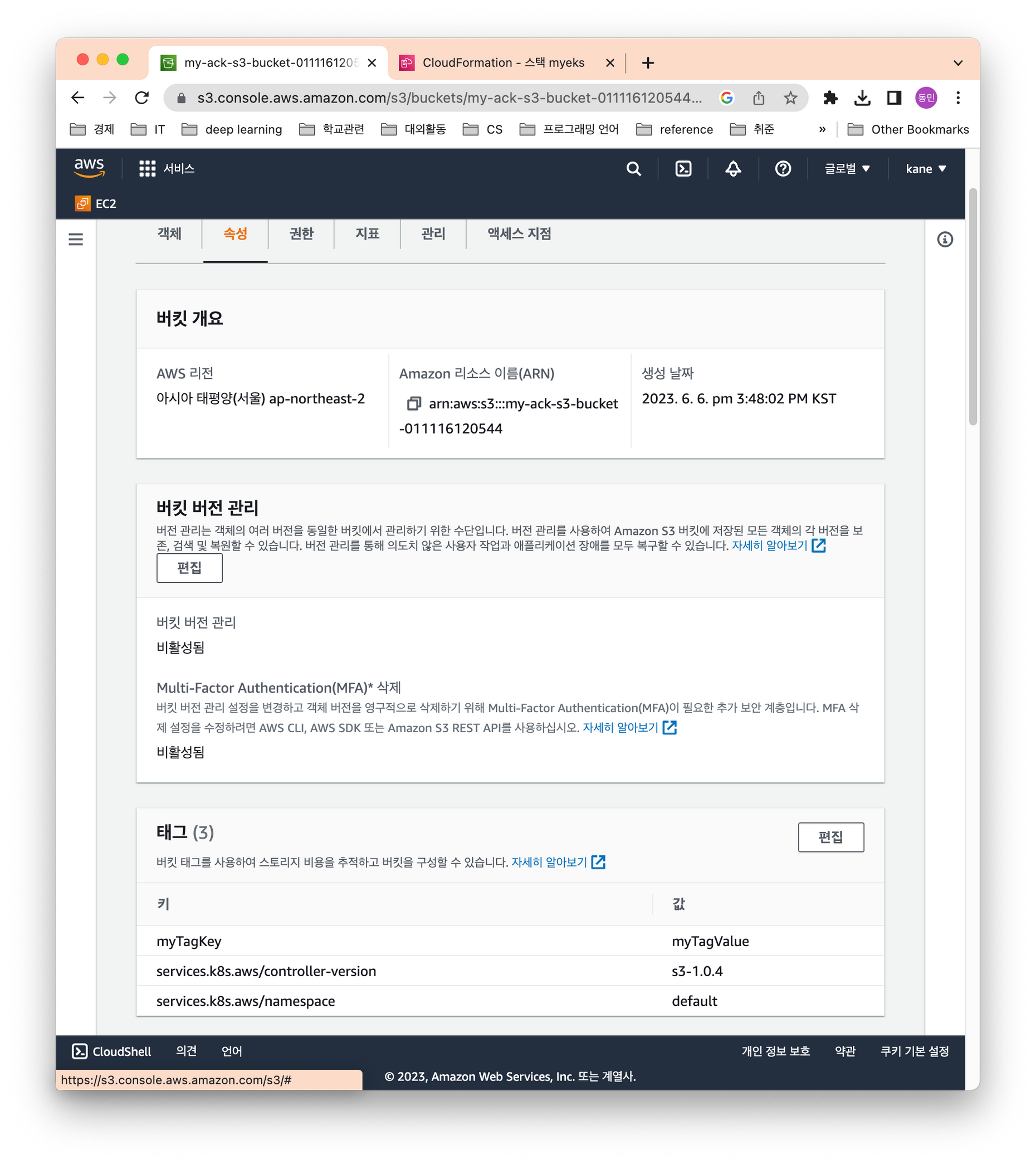

- S3 버킷 업데이트 : 태그 정보 입력

$read -r -d '' BUCKET_MANIFEST <<EOF

> apiVersion: s3.services.k8s.aws/v1alpha1

> kind: Bucket

> metadata:

> name: $BUCKET_NAME

> spec:

> name: $BUCKET_NAME

> tagging:

> tagSet:

> - key: myTagKey

> value: myTagValue

> EOF

$echo "${BUCKET_MANIFEST}" > bucket.yaml

#S3 버킷 설정 업데이트 실행 : 필요 주석 자동 업뎃 내용이니 무시해도됨!

$kubectl apply -f bucket.yaml

Warning: resource buckets/my-ack-s3-bucket-011116120544 is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

bucket.s3.services.k8s.aws/my-ack-s3-bucket-011116120544 configured

S3 버킷 업데이트 결과를 확인한다.

$kubectl describe bucket/$BUCKET_NAME | grep Spec: -A5

Spec:

Name: my-ack-s3-bucket-011116120544

Tagging:

Tag Set:

Key: myTagKey

Value: myTagValue

$kubectl describe bucket/$BUCKET_NAME | grep Spec: -A5

Spec:

Name: my-ack-s3-bucket-011116120544

Tagging:

Tag Set:

Key: myTagKey

Value: myTagValue

콘솔에서도 값 변경 확인

이제 실습을 종료하고, 자원을 삭제한다.

$kubectl delete -f bucket.yaml

bucket.s3.services.k8s.aws "my-ack-s3-bucket-011116120544" deleted

$kubectl get bucket/$BUCKET_NAME

Error from server (NotFound): buckets.s3.services.k8s.aws "my-ack-s3-bucket-011116120544" not found

$export SERVICE=s3

$helm uninstall -n $ACK_SYSTEM_NAMESPACE ack-$SERVICE-controller

release "ack-s3-controller" uninstalled

$kubectl delete -f ~/$SERVICE-chart/crds

customresourcedefinition.apiextensions.k8s.io "buckets.s3.services.k8s.aws" deleted

customresourcedefinition.apiextensions.k8s.io "adoptedresources.services.k8s.aws" deleted

customresourcedefinition.apiextensions.k8s.io "fieldexports.services.k8s.aws" deleted

$eksctl delete iamserviceaccount --cluster myeks --name ack-$SERVICE-controller --namespace ack-system

2023-06-06 15:50:31 [ℹ] 1 iamserviceaccount (ack-system/ack-s3-controller) was included (based on the include/exclude rules)

2023-06-06 15:50:31 [ℹ] 1 task: {

2 sequential sub-tasks: {

delete IAM role for serviceaccount "ack-system/ack-s3-controller" [async],

delete serviceaccount "ack-system/ack-s3-controller",

} }2023-06-06 15:50:31 [ℹ] will delete stack "eksctl-myeks-addon-iamserviceaccount-ack-system-ack-s3-controller"

2023-06-06 15:50:31 [ℹ] serviceaccount "ack-system/ack-s3-controller" was already deleted

$Flux

argocd와 유사한 gitops 툴이다. kustomize에 특화된 도구이며, 테라폼 코드를 실행하는 기능이 있다고 한다.

하지만, helm or kustomize에 의존적이다.

자세한 내용은 악분님 블로그 참고 Blog

Flux 설치한다.

$curl -s https://fluxcd.io/install.sh | sudo bash

[INFO] Downloading metadata https://api.github.com/repos/fluxcd/flux2/releases/latest

[INFO] Using 2.0.0-rc.5 as release

[INFO] Downloading hash https://github.com/fluxcd/flux2/releases/download/v2.0.0-rc.5/flux_2.0.0-rc.5_checksums.txt

[INFO] Downloading binary https://github.com/fluxcd/flux2/releases/download/v2.0.0-rc.5/flux_2.0.0-rc.5_linux_amd64.tar.gz

[INFO] Verifying binary download

which: no shasum in (/sbin:/bin:/usr/sbin:/usr/bin)

[INFO] Installing flux to /usr/local/bin/flux

$. <(flux completion bash)

# 설치 확인

$flux --version

flux version 2.0.0-rc.5

이제 깃허브와 연동한다. 깃허브 토큰을 발급받아서 진행한다.

# 깃허브 토큰 등록

$export GITHUB_TOKEN=ghp_mawAkDy...

$export GITHUB_USER=han-03..

# flux 연결

$flux bootstrap github \

> --owner=$GITHUB_USER \

> --repository=fleet-infra \

> --branch=main \

> --path=./clusters/my-cluster \

> --personal

► connecting to github.com

✔ repository "https://github.com/han/fleet-infra" created

► cloning branch "main" from Git repository "https://github.com/han/fleet-infra.git"

✔ cloned repository

► generating component manifests

# Warning: 'patchesJson6902' is deprecated. Please use 'patches' instead. Run 'kustomize edit fix' to update your Kustomization automatically.

✔ generated component manifests

✔ committed sync manifests to "main" ("061daf49d8f729dba1dd4bc38023e891df92d225")

► pushing component manifests to "https://github.com/han/fleet-infra.git"

► installing components in "flux-system" namespace

✔ installed components

✔ reconciled components

► determining if source secret "flux-system/flux-system" exists

► generating source secret

✔ public key: ecdsa-sha2-nistp384 AAAAE2VjZHNhLXNoYTItbmlzdHAzOD...

✔ configured deploy key "flux-system-main-flux-system-./clusters/my-cluster" for "https://github.com/han/fleet-infra"

► applying source secret "flux-system/flux-system"

✔ reconciled source secret

► generating sync manifests

✔ generated sync manifests

✔ committed sync manifests to "main" ("cb4137dd69c7891da32982d5991deb9ebd901278")

► pushing sync manifests to "https://github.com/han/fleet-infra.git"

► applying sync manifests

✔ reconciled sync configuration

◎ waiting for Kustomization "flux-system/flux-system" to be reconciled

✔ Kustomization reconciled successfully

► confirming components are healthy

✔ helm-controller: deployment ready

✔ kustomize-controller: deployment ready

✔ notification-controller: deployment ready

✔ source-controller: deployment ready

✔ all components are healthy

#설치 확인

$kubectl get pods -n flux-system

NAME READY STATUS RESTARTS AGE

helm-controller-fbdd59577-dgm6p 1/1 Running 0 24s

kustomize-controller-6b67b54cf8-xvjz4 1/1 Running 0 24s

notification-controller-78f4869c94-8dc52 1/1 Running 0 24s

source-controller-75db64d9f7-jhxmk 1/1 Running 0 24s

$kubectl get-all -n flux-system

NAME NAMESPACE AGE

configmap/kube-root-ca.crt flux-system 29s

endpoints/notification-controller flux-system 26s

endpoints/source-controller flux-system 26s

endpoints/webhook-receiver flux-system 26s

...

$kubectl get crd | grep fluxc

alerts.notification.toolkit.fluxcd.io 2023-06-06T06:58:28Z

buckets.source.toolkit.fluxcd.io 2023-06-06T06:58:28Z

gitrepositories.source.toolkit.fluxcd.io 2023-06-06T06:58:28Z

helmcharts.source.toolkit.fluxcd.io 2023-06-06T06:58:28Z

helmreleases.helm.toolkit.fluxcd.io 2023-06-06T06:58:28Z

helmrepositories.source.toolkit.fluxcd.io 2023-06-06T06:58:28Z

kustomizations.kustomize.toolkit.fluxcd.io 2023-06-06T06:58:28Z

ocirepositories.source.toolkit.fluxcd.io 2023-06-06T06:58:29Z

providers.notification.toolkit.fluxcd.io 2023-06-06T06:58:29Z

receivers.notification.toolkit.fluxcd.io 2023-06-06T06:58:29Z

# 리포지토리 확인

$kubectl get gitrepository -n flux-system

NAME URL AGE READY STATUS

flux-system ssh://git@github.com/han/fleet-infra 25s True stored artifact for revision 'main@sha1:cb4137dd69c7891da32982d5991deb9ebd901278'

Gitops 도구를 설치한다.

$curl --silent --location "https://github.com/weaveworks/weave-gitops/releases/download/v0.24.0/gitops-$(uname)-$(uname -m).tar.gz" | tar xz -C /tmp

$sudo mv /tmp/gitops /usr/local/bin

$gitops version

To improve our product, we would like to collect analytics data. You can read more about what data we collect here: https://docs.gitops.weave.works/docs/feedback-and-telemetry/

Would you like to turn on analytics to help us improve our product: Y

Current Version: 0.24.0

GitCommit: cc1d0e680c55e0aaf5bfa0592a0a454fb2064bc1

BuildTime: 2023-05-24T16:29:14Z

Branch: releases/v0.24.0

$PASSWORD="password"

$gitops create dashboard ww-gitops --password=$PASSWORD

✚ Generating GitOps Dashboard manifests ...

► Creating GitOps Dashboard objects ...

✚ Generating GitOps Dashboard manifests ...

✔ Generated GitOps Dashboard manifests

► Checking for a cluster in the kube config ...

► Checking if Flux is already installed ...

► Getting Flux version ...

✔ Flux &{v2.0.0-rc.5 flux-system} is already installed

► Applying GitOps Dashboard manifests

► Installing the GitOps Dashboard ...

✔ GitOps Dashboard has been installed

► Request reconciliation of dashboard (timeout 3m0s) ...

◎ Waiting for GitOps Dashboard reconciliation

✔ GitOps Dashboard ww-gitops is ready

✔ Installed GitOps Dashboard

$flux -n flux-system get helmrelease

NAME REVISION SUSPENDED READY MESSAGE

ww-gitops 4.0.22 False True Release reconciliation succeeded

$kubectl -n flux-system get pod,svc

NAME READY STATUS RESTARTS AGE

pod/helm-controller-fbdd59577-dgm6p 1/1 Running 0 2m15s

pod/kustomize-controller-6b67b54cf8-xvjz4 1/1 Running 0 2m15s

pod/notification-controller-78f4869c94-8dc52 1/1 Running 0 2m15s

pod/source-controller-75db64d9f7-jhxmk 1/1 Running 0 2m15s

pod/ww-gitops-weave-gitops-66dc44989f-wmmd9 1/1 Running 0 46s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/notification-controller ClusterIP 10.100.0.14 <none> 80/TCP 2m15s

service/source-controller ClusterIP 10.100.20.46 <none> 80/TCP 2m15s

service/webhook-receiver ClusterIP 10.100.76.241 <none> 80/TCP 2m15s

service/ww-gitops-weave-gitops ClusterIP 10.100.101.129 <none> 9001/TCP 46s

# External DNS 연결을 위한 ingress 설정

$CERT_ARN=`aws acm list-certificates --query 'CertificateSummaryList[].CertificateArn[]' --output text`

$echo $CERT_ARN

arn:aws:acm:ap-northeast-2:011116120544:certificate/836b6dfa-0955-4401-a721-ecd8689b6025

$cat <<EOT > gitops-ingress.yaml

> apiVersion: networking.k8s.io/v1

> kind: Ingress

> metadata:

> name: gitops-ingress

> annotations:

> alb.ingress.kubernetes.io/certificate-arn: $CERT_ARN

> alb.ingress.kubernetes.io/group.name: study

> alb.ingress.kubernetes.io/listen-ports: '[{"HTTPS":443}, {"HTTP":80}]'

> alb.ingress.kubernetes.io/load-balancer-name: myeks-ingress-alb

> alb.ingress.kubernetes.io/scheme: internet-facing

> alb.ingress.kubernetes.io/ssl-redirect: "443"

> alb.ingress.kubernetes.io/success-codes: 200-399

> alb.ingress.kubernetes.io/target-type: ip

> spec:

> ingressClassName: alb

> rules:

> - host: gitops.$MyDomain

> http:

> paths:

> - backend:

> service:

> name: ww-gitops-weave-gitops

> port:

> number: 9001

> path: /

> pathType: Prefix

> EOT

$kubectl apply -f gitops-ingress.yaml -n flux-system

ingress.networking.k8s.io/gitops-ingress created

$kubectl get ingress -n flux-system

NAME CLASS HOSTS ADDRESS PORTS AGE

gitops-ingress alb gitops.dongmin.link myeks-ingress-alb-1372943946.ap-northeast-2.elb.amazonaws.com 80 3s

#GitOps 접속 정보 확인 >> 웹 접속 후 정보 확인

$echo -e "GitOps Web https://gitops.$MyDomain"

GitOps Web https://gitops.dongmin.link

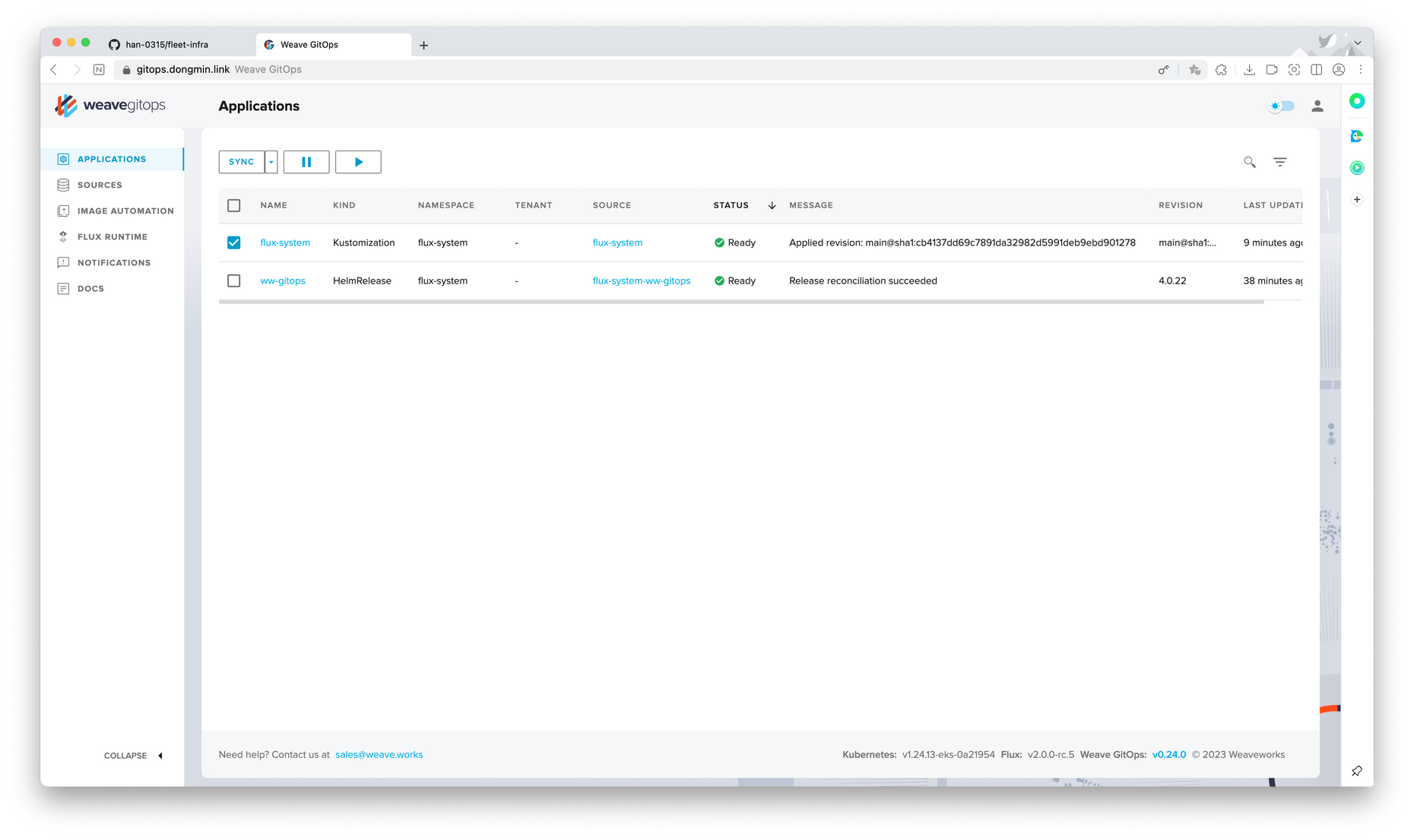

웹 UI 모습

소스 생성 방식

소스 생성 : 유형 - git, helm, oci, bucket

flux create source {소스 유형}

테스트 진행

#악분(최성욱)님이 준비한 repo로 git 소스 생성

$GITURL="https://github.com/sungwook-practice/fluxcd-test.git"

$flux create source git nginx-example1 --url=$GITURL --branch=main --interval=30s

✚ generating GitRepository source

► applying GitRepository source

✔ GitRepository source created

◎ waiting for GitRepository source reconciliation

✔ GitRepository source reconciliation completed

✔ fetched revision: main@sha1:4478b54cb7a8eaf1ee2665e2b3dd5bcfd55e9da9

$flux get sources git

NAME REVISION SUSPENDED READY MESSAGE

flux-system main@sha1:cb4137dd False True stored artifact for revision 'main@sha1:cb4137dd'

nginx-example1 main@sha1:4478b54c False True stored artifact for revision 'main@sha1:4478b54c'

# 악분님 repo와 연결된 것을 확인할 수 있음

$kubectl -n flux-system get gitrepositories

NAME URL AGE READY STATUS

flux-system ssh://git@github.com/han../fleet-infra 40m True stored artifact for revision 'main@sha1:cb4137dd69c7891da32982d5991deb9ebd901278'

nginx-example1 https://github.com/sungwook-practice/fluxcd-test.git 37s True stored artifact for revision 'main@sha1:4478b54cb7a8eaf1ee2665e2b3dd5bcfd55e9da9'

# 배포

$flux create kustomization nginx-example1 --target-namespace=default --interval=1m --source=nginx-example1 --path="./nginx" --health-check-timeout=2m

✚ generating Kustomization

► applying Kustomization

✔ Kustomization created

◎ waiting for Kustomization reconciliation

✔ Kustomization nginx-example1 is ready

✔ applied revision main@sha1:4478b54cb7a8eaf1ee2665e2b3dd5bcfd55e9da9

# 배포 확인

$flux get kustomizations

NAME REVISION SUSPENDED READY MESSAGE

flux-system main@sha1:cb4137dd False True Applied revision: main@sha1:cb4137dd

nginx-example1 main@sha1:4478b54c False True Applied revision: main@sha1:4478b54c

이제 Repo를 삭제한다. 처음 애플리케이션을 생성할 때 prune 옵션에 따라 리소스 삭제 유무가 달라진다.

$flux delete kustomization nginx-example1

Are you sure you want to delete this kustomization: y

► deleting kustomization nginx-example1 in flux-system namespace

✔ kustomization deleted

# flux kustomizations만 삭제되고, EKS 리소스가 삭제가 되지 않음

$flux get kustomizations

NAME REVISION SUSPENDED READY MESSAGE

flux-system main@sha1:cb4137dd False True Applied revision: main@sha1:cb4137dd

$kubectl get pod,svc nginx-example1

NAME READY STATUS RESTARTS AGE

pod/nginx-example1 1/1 Running 0 98s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/nginx-example1 ClusterIP 10.100.61.6 <none> 80/TCP 98s

# 옵션변경

# flux 애플리케이션 다시 생성 : --prune 옵션 true

$flux create kustomization nginx-example1 \

> --target-namespace=default \

> --prune=true \

> --interval=1m \

> --source=nginx-example1 \

> --path="./nginx" \

> --health-check-timeout=2m

✚ generating Kustomization

► applying Kustomization

✔ Kustomization created

◎ waiting for Kustomization reconciliation

✔ Kustomization nginx-example1 is ready

✔ applied revision main@sha1:4478b54cb7a8eaf1ee2665e2b3dd5bcfd55e9da9

$flux get kustomizations

NAME REVISION SUSPENDED READY MESSAGE

flux-system main@sha1:cb4137dd False True Applied revision: main@sha1:cb4137dd

nginx-example1 main@sha1:4478b54c False True Applied revision: main@sha1:4478b54c

# 리소스까지 삭제되는 모습

$flux delete kustomization nginx-example1

Are you sure you want to delete this kustomization: y

► deleting kustomization nginx-example1 in flux-system namespace

✔ kustomization deleted

$flux get kustomizations

NAME REVISION SUSPENDED READY MESSAGE

flux-system main@sha1:cb4137dd False True Applied revision: main@sha1:cb4137dd

$kubectl get pod,svc nginx-example1

Error from server (NotFound): pods "nginx-example1" not found

Error from server (NotFound): services "nginx-example1" not found

테스트를 마무리 하고 자원을 삭제한다.

$flux delete source git nginx-example1

Are you sure you want to delete this source git: y

? Are you sure you want to delete this source git? [y/N] y█

✔ source git deleted

$flux get sources git

NAME REVISION SUSPENDED READY MESSAGE

flux-system main@sha1:cb4137dd False True stored artifact for revision 'main@sha1:cb4137dd'

$

$kubectl -n flux-system get gitrepositories

NAME URL AGE READY STATUS

flux-system ssh://git@github.com/han/fleet-infra 44m True stored artifact for revision 'main@sha1:cb4137dd69c7891da32982d5991deb9ebd901278'ArgoCD

쿠버네티스 GitOps 환경에서 지속적인 배포를 위한 오픈소스 도구이다. CNCF 프로젝트 중 하나이다.

자세한 내용은 GitHub 참고

여기서는 간단하게 ArgoCD를 배포하고, 로그인만 진행합니다.

# argo CD 생성

$kubectl create namespace argocd

$kubectl apply -n argocd -f https://raw.githubusercontent.com/argoproj/argo-cd/stable/manifests/ha/install.yaml

$curl -sSL -o argocd-linux-amd64 https://github.com/argoproj/argo-cd/releases/latest/download/argocd-linux-amd64

$sudo install -m 555 argocd-linux-amd64 /usr/local/bin/argocd

$rm argocd-linux-amd64

$kubectl patch svc argocd-server -n argocd -p '{"spec": {"type": "LoadBalancer"}}'

$kubectl -n argocd get secret argocd-initial-admin-secret -o jsonpath="{.data.password}" | base64 -d; echo

4IVTqkP2MhiTdIZo

$kubectl get svc argocd-server -n argocd

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

argocd-server LoadBalancer 10.100.98.251 a677d8882cdab494ebfee894df436abc-367513095.ap-northeast-2.elb.amazonaws.com 80:31331/TCP,443:30926/TCP 29m