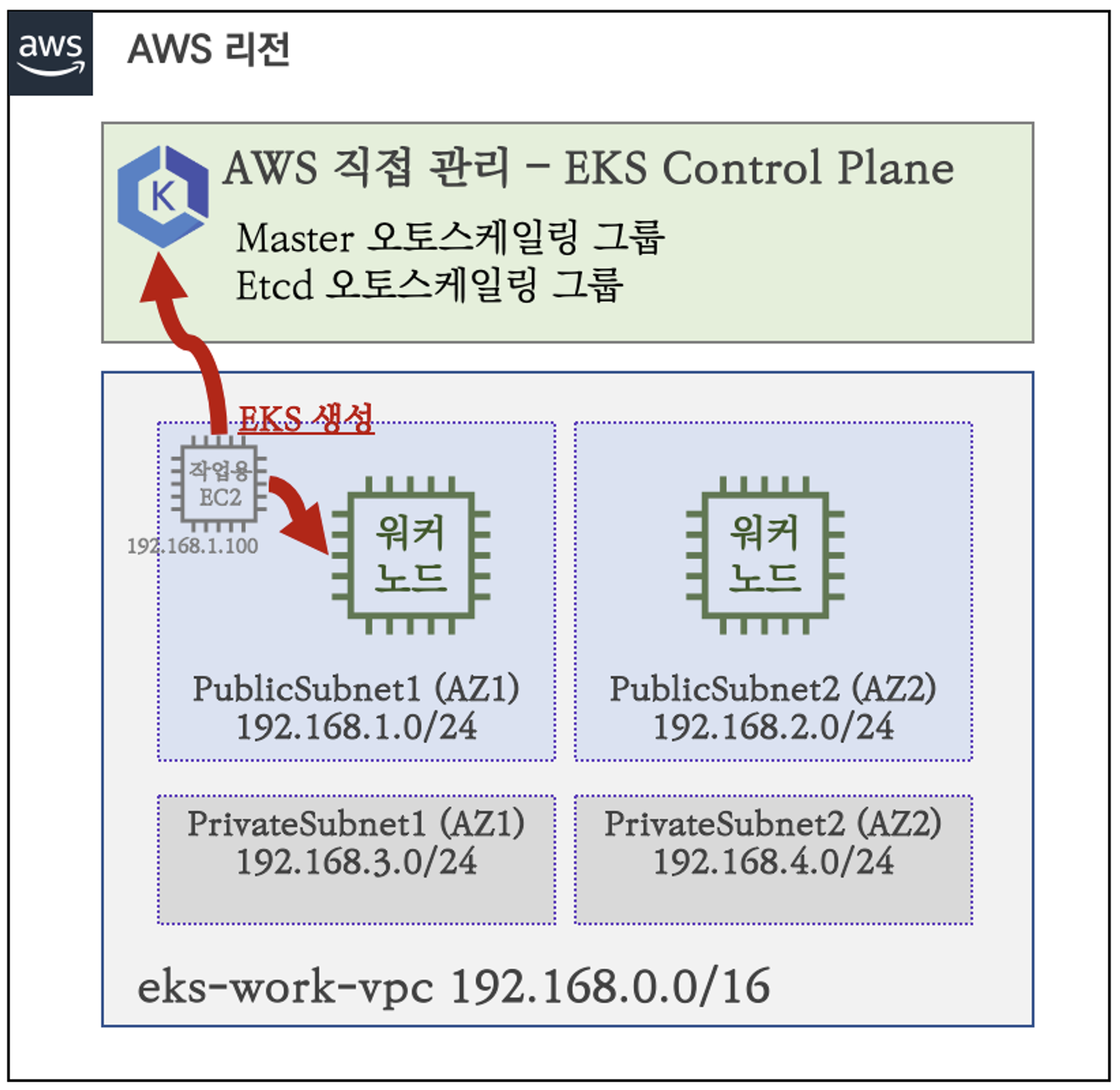

1. EKS 배포

- 아래와 같은 아키텍처로 배포

- EKS 클러스터를 프로비저닝 하기 위한 환경을 세팅하는 템플릿으로 배포합니다.

- VPC, Cluseter_name, Subnet 등

# yaml 파일 다운로드

curl -O https://s3.ap-northeast-2.amazonaws.com/cloudformation.cloudneta.net/K8S/myeks-1week.yaml

# 배포

# aws cloudformation deploy --template-file ~/Downloads/myeks-1week.yaml --stack-name mykops --parameter-overrides KeyName=<My SSH Keyname> SgIngressSshCidr=<My Home Public IP Address>/32 --region <리전>

예시) aws cloudformation deploy --template-file ~/Downloads/myeks-1week.yaml \

--stack-name myeks --parameter-overrides KeyName=kp-gasida SgIngressSshCidr=$(curl -s ipinfo.io/ip)/32 --region ap-northeast-2

# CloudFormation 스택 배포 완료 후 EC2 IP 출력

aws cloudformation describe-stacks --stack-name myeks --query 'Stacks[*].Outputs[*].OutputValue' --output text

예시) 3.35.137.31

# ec2 에 SSH 접속 : root / qwe123

예시) ssh root@3.35.137.31

ssh root@$(aws cloudformation describe-stacks --stack-name myeks --query 'Stacks[*].Outputs[0].OutputValue' --output text)

root@@X.Y.Z.A's password: qwe123

- ssh 로 cloudformation 스택으로 생성한 인스턴스에 접속

- 자격 구성 및 변수를 설정

# 자격 구성 설정 없이 확인

aws ec2 describe-instances

# IAM User 자격 구성 : 실습 편리를 위해 administrator 권한을 가진 IAM User 의 자격 증명 입력

aws configure

AWS Access Key ID [None]: AKIA5...

AWS Secret Access Key [None]: CVNa2...

Default region name [None]: ap-northeast-2

Default output format [None]: json

# 자격 구성 적용 확인 : 노드 IP 확인

aws ec2 describe-instances

# EKS 배포할 VPC 정보 확인

aws ec2 describe-vpcs --filters "Name=tag:Name,Values=$CLUSTER_NAME-VPC" | jq

aws ec2 describe-vpcs --filters "Name=tag:Name,Values=$CLUSTER_NAME-VPC" | jq Vpcs[]

aws ec2 describe-vpcs --filters "Name=tag:Name,Values=$CLUSTER_NAME-VPC" | jq Vpcs[].VpcId

aws ec2 describe-vpcs --filters "Name=tag:Name,Values=$CLUSTER_NAME-VPC" | jq -r .Vpcs[].VpcId

export VPCID=$(aws ec2 describe-vpcs --filters "Name=tag:Name,Values=$CLUSTER_NAME-VPC" | jq -r .Vpcs[].VpcId)

echo "export VPCID=$VPCID" >> /etc/profile

echo $VPCID

# EKS 배포할 VPC에 속한 Subnet 정보 확인

aws ec2 describe-subnets --filters "Name=vpc-id,Values=$VPCID" --output json | jq

aws ec2 describe-subnets --filters "Name=vpc-id,Values=$VPCID" --output yaml

## 퍼블릭 서브넷 ID 확인

aws ec2 describe-subnets --filters Name=tag:Name,Values="$CLUSTER_NAME-PublicSubnet1" | jq

aws ec2 describe-subnets --filters Name=tag:Name,Values="$CLUSTER_NAME-PublicSubnet1" --query "Subnets[0].[SubnetId]" --output text

export PubSubnet1=$(aws ec2 describe-subnets --filters Name=tag:Name,Values="$CLUSTER_NAME-PublicSubnet1" --query "Subnets[0].[SubnetId]" --output text)

export PubSubnet2=$(aws ec2 describe-subnets --filters Name=tag:Name,Values="$CLUSTER_NAME-PublicSubnet2" --query "Subnets[0].[SubnetId]" --output text)

echo "export PubSubnet1=$PubSubnet1" >> /etc/profile

echo "export PubSubnet2=$PubSubnet2" >> /etc/profile

echo $PubSubnet1

echo $PubSubnet2- 정상적으로 변수들이 들어갔는지 확인

# 변수 확인

echo $AWS_DEFAULT_REGION

echo $CLUSTER_NAME

echo $VPCID

echo $PubSubnet1,$PubSubnet2- eks 클러스터 & 관리형노드그룹 배포

eksctl create cluster --name $CLUSTER_NAME --region=$AWS_DEFAULT_REGION --nodegroup-name=$CLUSTER_NAME-nodegroup --node-type=t3.medium \

--node-volume-size=30 --vpc-public-subnets "$PubSubnet1,$PubSubnet2" --version 1.28 --ssh-access --external-dns-access --verbose 42. EKS Node 들여다보기

Node 에 SSH 로 접속

- 노드 IP 확인 및 PrivateIP 변수 지정

# 명령어

aws ec2 describe-instances --query "Reservations[*].Instances[*].{PublicIPAdd:PublicIpAddress,PrivateIPAdd:PrivateIpAddress,InstanceName:Tags[?Key=='Name']|[0].Value,Status:State.Name}" --filters Name=instance-state-name,Values=running --output table

kubectl get node --label-columns=topology.kubernetes.io/zone

kubectl get node --label-columns=topology.kubernetes.io/zone --selector=topology.kubernetes.io/zone=ap-northeast-2a

kubectl get node --label-columns=topology.kubernetes.io/zone --selector=topology.kubernetes.io/zone=ap-northeast-2c

N1=$(kubectl get node --label-columns=topology.kubernetes.io/zone --selector=topology.kubernetes.io/zone=ap-northeast-2a -o jsonpath={.items[0].status.addresses[0].address})

N2=$(kubectl get node --label-columns=topology.kubernetes.io/zone --selector=topology.kubernetes.io/zone=ap-northeast-2c -o jsonpath={.items[0].status.addresses[0].address})

echo $N1, $N2

echo "export N1=$N1" >> /etc/profile

echo "export N2=$N2" >> /etc/profile

# 출력

(leeeuijoo@myeks:N/A) [root@myeks-host ~]# aws ec2 describe-instances --query "Reservations[*].Instances[*].{PublicIPAdd:PublicIpAddress,PrivateIPAdd:PrivateIpAddress,InstanceName:Tags[?Key=='Name']|[0].Value,Status:State.Name}" --filters Name=instance-state-name,Values=running --output table

-----------------------------------------------------------------------------

| DescribeInstances |

+-----------------------------+----------------+----------------+-----------+

| InstanceName | PrivateIPAdd | PublicIPAdd | Status |

+-----------------------------+----------------+----------------+-----------+

| myeks-myeks-nodegroup-Node | 192.168.2.221 | 13.124.23.199 | running |

| myeks-host | 192.168.1.100 | 43.201.86.192 | running |

| myeks-myeks-nodegroup-Node | 192.168.1.198 | 52.78.117.242 | running |

+-----------------------------+----------------+----------------+-----------+

(leeeuijoo@myeks:N/A) [root@myeks-host ~]#

(leeeuijoo@myeks:N/A) [root@myeks-host ~]# kubectl get node --label-columns=topology.kubernetes.io/zone

NAME STATUS ROLES AGE VERSION ZONE

ip-192-168-1-198.ap-northeast-2.compute.internal Ready <none> 11m v1.28.5-eks-5e0fdde ap-northeast-2a

ip-192-168-2-221.ap-northeast-2.compute.internal Ready <none> 11m v1.28.5-eks-5e0fdde ap-northeast-2c

(leeeuijoo@myeks:N/A) [root@myeks-host ~]# kubectl get node --label-columns=topology.kubernetes.io/zone --selector=topology.kubernetes.io/zone=ap-northeast-2a

NAME STATUS ROLES AGE VERSION ZONE

ip-192-168-1-198.ap-northeast-2.compute.internal Ready <none> 11m v1.28.5-eks-5e0fdde ap-northeast-2a

(leeeuijoo@myeks:N/A) [root@myeks-host ~]# kubectl get node --label-columns=topology.kubernetes.io/zone --selector=topology.kubernetes.io/zone=ap-northeast-2c

NAME STATUS ROLES AGE VERSION ZONE

ip-192-168-2-221.ap-northeast-2.compute.internal Ready <none> 11m v1.28.5-eks-5e0fdde ap-northeast-2c

(leeeuijoo@myeks:N/A) [root@myeks-host ~]# N1=$(kubectl get node --label-columns=topology.kubernetes.io/zone --selector=topology.kubernetes.io/zone=ap-northeast-2a -o jsonpath={.items[0].status.addresses[0].address})

(leeeuijoo@myeks:N/A) [root@myeks-host ~]# N2=$(kubectl get node --label-columns=topology.kubernetes.io/zone --selector=topology.kubernetes.io/zone=ap-northeast-2c -o jsonpath={.items[0].status.addresses[0].address})

(leeeuijoo@myeks:N/A) [root@myeks-host ~]# echo $N1, $N2

192.168.1.198, 192.168.2.221

(leeeuijoo@myeks:N/A) [root@myeks-host ~]# echo "export N1=$N1" >> /etc/profile

(leeeuijoo@myeks:N/A) [root@myeks-host ~]# echo "export N2=$N2" >> /etc/profile

(leeeuijoo@myeks:N/A) [root@myeks-host ~]#-

eksctl-host 에서 노드의IP나 coredns 파드IP로 ping 테스트

-

ping 가지 않음

-

아직 보안그룹에 추가해주지 않았기 때문

-

ping -c 1 $N1

ping -c 1 $N2

# 출력

(leeeuijoo@myeks:N/A) [root@myeks-host ~]# ping -c 1 $N1

PING 192.168.1.198 (192.168.1.198) 56(84) bytes of data.

--- 192.168.1.198 ping statistics ---

1 packets transmitted, 0 received, 100% packet loss, time 0ms

(leeeuijoo@myeks:N/A) [root@myeks-host ~]# ping -c 1 $N2

PING 192.168.2.221 (192.168.2.221) 56(84) bytes of data.- 노드 보안그룹 ID 확인하여 노드 보안그룹에 eksctl-host 에서 노드(파드)에 접속 가능하게 룰(Rule) 추가 설정해줍니다.

- 현재 베스천 호스트의 IP를 추가해줍니다.

# 노드 보안그룹 확인

aws ec2 describe-security-groups --filters Name=group-name,Values=*nodegroup* --query "SecurityGroups[*].[GroupId]" --output text

NGSGID=$(aws ec2 describe-security-groups --filters Name=group-name,Values=*nodegroup* --query "SecurityGroups[*].[GroupId]" --output text)

echo $NGSGID

echo "export NGSGID=$NGSGID" >> /etc/profile

# 보안 그룹 rule 추가

aws ec2 authorize-security-group-ingress --group-id $NGSGID --protocol '-1' --cidr 192.168.1.100/32

- Ping 확인

- 정상으로 ICMP 프로토콜이 동작합니다.

(leeeuijoo@myeks:N/A) [root@myeks-host ~]# ping -c 2 $N1

PING 192.168.1.198 (192.168.1.198) 56(84) bytes of data.

64 bytes from 192.168.1.198: icmp_seq=1 ttl=255 time=3.18 ms

64 bytes from 192.168.1.198: icmp_seq=2 ttl=255 time=0.248 ms

--- 192.168.1.198 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1001ms

rtt min/avg/max/mdev = 0.248/1.717/3.186/1.469 ms

(leeeuijoo@myeks:N/A) [root@myeks-host ~]# ping -c 2 $N2

PING 192.168.2.221 (192.168.2.221) 56(84) bytes of data.

64 bytes from 192.168.2.221: icmp_seq=1 ttl=255 time=1.66 ms

64 bytes from 192.168.2.221: icmp_seq=2 ttl=255 time=1.02 ms

--- 192.168.2.221 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1001ms

rtt min/avg/max/mdev = 1.025/1.346/1.668/0.323 ms- Worker Node 접속

ssh -i ~/.ssh/id_rsa ec2-user@$N1 hostname

ssh -i ~/.ssh/id_rsa ec2-user@$N2 hostname

ssh ec2-user@$N1

ssh ec2-user@$N2

# 출력

(leeeuijoo@myeks:N/A) [root@myeks-host ~]# ssh ec2-user@$N1

Last login: Tue Feb 27 23:46:45 2024 from 52.94.122.139

, #_

~\_ ####_ Amazon Linux 2

~~ \_#####\

~~ \###| AL2 End of Life is 2025-06-30.

~~ \#/ ___

~~ V~' '->

~~~ / A newer version of Amazon Linux is available!

~~._. _/

_/ _/ Amazon Linux 2023, GA and supported until 2028-03-15.

_/m/' https://aws.amazon.com/linux/amazon-linux-2023/

10 package(s) needed for security, out of 10 available

Run "sudo yum update" to apply all updates.

[ec2-user@ip-192-168-1-198 ~]$ exit

logout

Connection to 192.168.1.198 closed.

(leeeuijoo@myeks:N/A) [root@myeks-host ~]# ssh ec2-user@$N2

Last login: Tue Feb 27 23:46:45 2024 from 52.94.122.139

, #_

~\_ ####_ Amazon Linux 2

~~ \_#####\

~~ \###| AL2 End of Life is 2025-06-30.

~~ \#/ ___

~~ V~' '->

~~~ / A newer version of Amazon Linux is available!

~~._. _/

_/ _/ Amazon Linux 2023, GA and supported until 2028-03-15.

_/m/' https://aws.amazon.com/linux/amazon-linux-2023/

10 package(s) needed for security, out of 10 available

Run "sudo yum update" to apply all updates.

[ec2-user@ip-192-168-2-221 ~]$ exit

logout

Connection to 192.168.2.221 closed.노드 네트워크 정보 확인

- AWS VPC CNI 사용 확인

kubectl -n kube-system get ds aws-node

kubectl describe daemonset aws-node --namespace kube-system | grep Image | cut -d "/" -f 2

# 출력

(leeeuijoo@myeks:N/A) [root@myeks-host ~]# kubectl -n kube-system get ds aws-node

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

aws-node 2 2 2 2 2 <none> 29m

(leeeuijoo@myeks:N/A) [root@myeks-host ~]# kubectl describe daemonset aws-node --namespace kube-system | grep Image | cut -d "/" -f 2

amazon-k8s-cni-init:v1.15.1-eksbuild.1

amazon-k8s-cni:v1.15.1-eksbuild.1

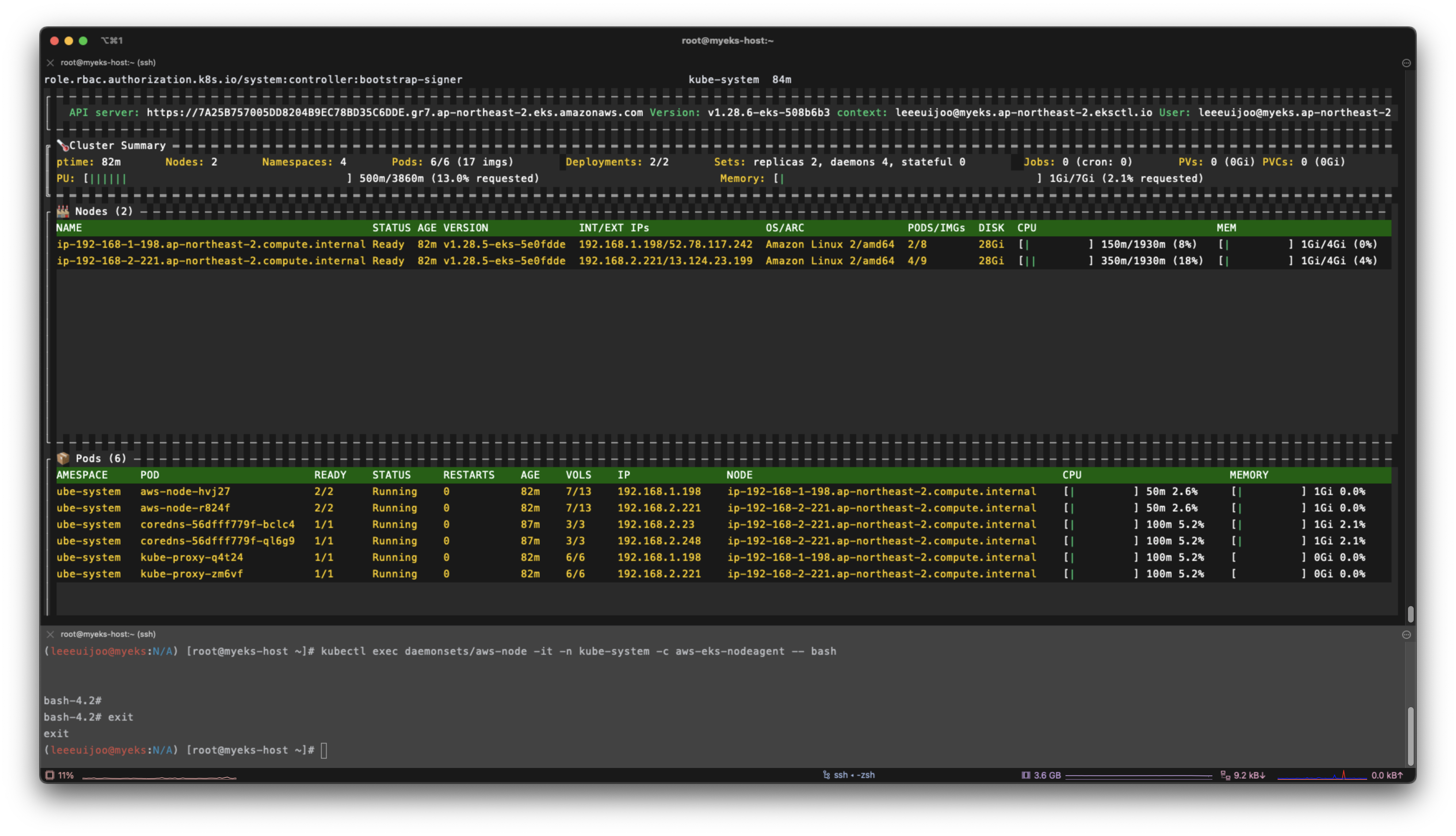

amazon- 파드 IP 와 노드 정보 확인

kubectl get pod -n kube-system -o wide

kubectl get pod -n kube-system -l k8s-app=kube-dns -owide

# 노드 정보 확인

for i in $N1 $N2; do echo ">> node $i <<"; ssh ec2-user@$i hostname; echo; done

for i in $N1 $N2; do echo ">> node $i <<"; ssh ec2-user@$i sudo ip -c addr; echo; done

for i in $N1 $N2; do echo ">> node $i <<"; ssh ec2-user@$i sudo ip -c route; echo; done

for i in $N1 $N2; do echo ">> node $i <<"; ssh ec2-user@$i sudo iptables -t nat -S; echo; done

# 출력

(leeeuijoo@myeks:N/A) [root@myeks-host ~]# kubectl get pod -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

aws-node-hvj27 2/2 Running 0 26m 192.168.1.198 ip-192-168-1-198.ap-northeast-2.compute.internal <none> <none>

aws-node-r824f 2/2 Running 0 26m 192.168.2.221 ip-192-168-2-221.ap-northeast-2.compute.internal <none> <none>

coredns-56dfff779f-bclc4 1/1 Running 0 31m 192.168.2.23 ip-192-168-2-221.ap-northeast-2.compute.internal <none> <none>

coredns-56dfff779f-ql6g9 1/1 Running 0 31m 192.168.2.248 ip-192-168-2-221.ap-northeast-2.compute.internal <none> <none>

kube-proxy-q4t24 1/1 Running 0 26m 192.168.1.198 ip-192-168-1-198.ap-northeast-2.compute.internal <none> <none>

kube-proxy-zm6vf 1/1 Running 0 26m 192.168.2.221 ip-192-168-2-221.ap-northeast-2.compute.internal <none> <none>

(leeeuijoo@myeks:N/A) [root@myeks-host ~]# kubectl get pod -n kube-system -l k8s-app=kube-dns -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns-56dfff779f-bclc4 1/1 Running 0 31m 192.168.2.23 ip-192-168-2-221.ap-northeast-2.compute.internal <none> <none>

coredns-56dfff779f-ql6g9 1/1 Running 0 31m 192.168.2.248 ip-192-168-2-221.ap-northeast-2.compute.internal <none> <none>- Node cgroup Version 확인

for i in $N1 $N2; do echo ">> node $i <<"; ssh ec2-user@$i stat -fc %T /sys/fs/cgroup/; echo; done

# 출력

(leeeuijoo@myeks:N/A) [root@myeks-host ~]# for i in $N1 $N2; do echo ">> node $i <<"; ssh ec2-user@$i stat -fc %T /sys/fs/cgroup/; echo; done

>> node 192.168.1.198 <<

tmpfs

>> node 192.168.2.221 <<

tmpfs- 노드 프로세스 정보 확인

for i in $N1 $N2; do echo ">> node $i <<"; ssh ec2-user@$i sudo systemctl status kubelet; echo; done

for i in $N1 $N2; do echo ">> node $i <<"; ssh ec2-user@$i sudo pstree; echo; done

for i in $N1 $N2; do echo ">> node $i <<"; ssh ec2-user@$i ps afxuwww; echo; done

for i in $N1 $N2; do echo ">> node $i <<"; ssh ec2-user@$i ps axf |grep /usr/bin/containerd; echo; done

for i in $N1 $N2; do echo ">> node $i <<"; ssh ec2-user@$i ls /etc/kubernetes/manifests/; echo; done

for i in $N1 $N2; do echo ">> node $i <<"; ssh ec2-user@$i ls /etc/kubernetes/kubelet/; echo; done

for i in $N1 $N2; do echo ">> node $i <<"; ssh ec2-user@$i cat /etc/kubernetes/kubelet/kubelet-config.json; echo; done

# 출력

## Kubelet 프로세스 확인

(leeeuijoo@myeks:N/A) [root@myeks-host ~]# for i in $N1 $N2; do echo ">> node $i <<"; ssh ec2-user@$i sudo systemctl status kubelet; echo; done

>> node 192.168.1.198 <<

● kubelet.service - Kubernetes Kubelet

Loaded: loaded (/etc/systemd/system/kubelet.service; enabled; vendor preset: disabled)

Drop-In: /etc/systemd/system/kubelet.service.d

└─10-kubelet-args.conf, 30-kubelet-extra-args.conf

Active: active (running) since 목 2024-03-07 12:14:58 UTC; 30min ago

Docs: https://github.com/kubernetes/kubernetes

Process: 2923 ExecStartPre=/sbin/iptables -P FORWARD ACCEPT -w 5 (code=exited, status=0/SUCCESS)

Main PID: 2932 (kubelet)

Tasks: 13

Memory: 92.0M

CGroup: /runtime.slice/kubelet.service

└─2932 /usr/bin/kubelet --config /etc/kubernetes/kubelet/kubelet-config.json --kubeconfig /var/lib/kubelet/kubeconfig --container-runtime-endpoint unix:///run/containerd/containerd.sock --image-credential-provider-config /etc/eks/image-credential-provider/config.json --image-credential-provider-bin-dir /etc/eks/image-credential-provider --node-ip=192.168.1.198 --pod-infra-container-image=602401143452.dkr.ecr.ap-northeast-2.amazonaws.com/eks/pause:3.5 --v=2 --hostname-override=ip-192-168-1-198.ap-northeast-2.compute.internal --cloud-provider=external --node-labels=eks.amazonaws.com/sourceLaunchTemplateVersion=1,alpha.eksctl.io/cluster-name=myeks,alpha.eksctl.io/nodegroup-name=myeks-nodegroup,eks.amazonaws.com/nodegroup-image=ami-0568448c1f29d8a06,eks.amazonaws.com/capacityType=ON_DEMAND,eks.amazonaws.com/nodegroup=myeks-nodegroup,eks.amazonaws.com/sourceLaunchTemplateId=lt-0707a4cf87214ec13 --max-pods=17

3월 07 12:15:26 ip-192-168-1-198.ap-northeast-2.compute.internal kubelet[2932]: I0307 12:15:26.803561 2932 kubelet.go:2502] "SyncLoop (probe)" probe="readiness" status="" pod="kube-system/aws-node-hvj27"

3월 07 12:15:26 ip-192-168-1-198.ap-northeast-2.compute.internal kubelet[2932]: I0307 12:15:26.835043 2932 kubelet.go:2502] "SyncLoop (probe)" probe="readiness" status="ready" pod="kube-system/aws-node-hvj27"

3월 07 12:15:26 ip-192-168-1-198.ap-northeast-2.compute.internal kubelet[2932]: I0307 12:15:26.842852 2932 pod_startup_latency_tracker.go:102] "Observed pod startup duration" pod="kube-system/aws-node-hvj27" podStartSLOduration=12.002100003 podCreationTimestamp="2024-03-07 12:14:59 +0000 UTC" firstStartedPulling="2024-03-07 12:15:10.669401265 +0000 UTC m=+11.680330042" lastFinishedPulling="2024-03-07 12:15:26.510116457 +0000 UTC m=+27.521045162" observedRunningTime="2024-03-07 12:15:26.842499435 +0000 UTC m=+27.853428160" watchObservedRunningTime="2024-03-07 12:15:26.842815123 +0000 UTC m=+27.853743846"

3월 07 12:15:31 ip-192-168-1-198.ap-northeast-2.compute.internal kubelet[2932]: I0307 12:15:31.779902 2932 csr.go:261] certificate signing request csr-nn6pl is approved, waiting to be issued

3월 07 12:15:31 ip-192-168-1-198.ap-northeast-2.compute.internal kubelet[2932]: I0307 12:15:31.802604 2932 csr.go:257] certificate signing request csr-nn6pl is issued

3월 07 12:15:32 ip-192-168-1-198.ap-northeast-2.compute.internal kubelet[2932]: I0307 12:15:32.804258 2932 certificate_manager.go:356] kubernetes.io/kubelet-serving: Certificate expiration is 2025-03-07 12:11:00 +0000 UTC, rotation deadline is 2024-12-27 18:17:49.80743319 +0000 UTC

3월 07 12:15:32 ip-192-168-1-198.ap-northeast-2.compute.internal kubelet[2932]: I0307 12:15:32.804298 2932 certificate_manager.go:356] kubernetes.io/kubelet-serving: Waiting 7086h2m17.003138985s for next certificate rotation

3월 07 12:15:33 ip-192-168-1-198.ap-northeast-2.compute.internal kubelet[2932]: I0307 12:15:33.805195 2932 certificate_manager.go:356] kubernetes.io/kubelet-serving: Certificate expiration is 2025-03-07 12:11:00 +0000 UTC, rotation deadline is 2024-12-02 15:10:57.580359155 +0000 UTC

3월 07 12:15:33 ip-192-168-1-198.ap-northeast-2.compute.internal kubelet[2932]: I0307 12:15:33.805239 2932 certificate_manager.go:356] kubernetes.io/kubelet-serving: Waiting 6482h55m23.775124028s for next certificate rotation

3월 07 12:19:59 ip-192-168-1-198.ap-northeast-2.compute.internal kubelet[2932]: I0307 12:19:59.257156 2932 kubelet.go:1450] "Image garbage collection succeeded"

>> node 192.168.2.221 <<

● kubelet.service - Kubernetes Kubelet

Loaded: loaded (/etc/systemd/system/kubelet.service; enabled; vendor preset: disabled)

Drop-In: /etc/systemd/system/kubelet.service.d

└─10-kubelet-args.conf, 30-kubelet-extra-args.conf

Active: active (running) since 목 2024-03-07 12:14:57 UTC; 30min ago

Docs: https://github.com/kubernetes/kubernetes

Process: 2921 ExecStartPre=/sbin/iptables -P FORWARD ACCEPT -w 5 (code=exited, status=0/SUCCESS)

Main PID: 2929 (kubelet)

Tasks: 13

Memory: 93.1M

CGroup: /runtime.slice/kubelet.service

└─2929 /usr/bin/kubelet --config /etc/kubernetes/kubelet/kubelet-config.json --kubeconfig /var/lib/kubelet/kubeconfig --container-runtime-endpoint unix:///run/containerd/containerd.sock --image-credential-provider-config /etc/eks/image-credential-provider/config.json --image-credential-provider-bin-dir /etc/eks/image-credential-provider --node-ip=192.168.2.221 --pod-infra-container-image=602401143452.dkr.ecr.ap-northeast-2.amazonaws.com/eks/pause:3.5 --v=2 --hostname-override=ip-192-168-2-221.ap-northeast-2.compute.internal --cloud-provider=external --node-labels=eks.amazonaws.com/sourceLaunchTemplateVersion=1,alpha.eksctl.io/cluster-name=myeks,alpha.eksctl.io/nodegroup-name=myeks-nodegroup,eks.amazonaws.com/nodegroup-image=ami-0568448c1f29d8a06,eks.amazonaws.com/capacityType=ON_DEMAND,eks.amazonaws.com/nodegroup=myeks-nodegroup,eks.amazonaws.com/sourceLaunchTemplateId=lt-0707a4cf87214ec13 --max-pods=17

3월 07 12:15:23 ip-192-168-2-221.ap-northeast-2.compute.internal kubelet[2929]: I0307 12:15:23.635852 2929 kubelet.go:2502] "SyncLoop (probe)" probe="readiness" status="" pod="kube-system/aws-node-r824f"

3월 07 12:15:23 ip-192-168-2-221.ap-northeast-2.compute.internal kubelet[2929]: I0307 12:15:23.666597 2929 kubelet.go:2502] "SyncLoop (probe)" probe="readiness" status="ready" pod="kube-system/aws-node-r824f"

3월 07 12:15:23 ip-192-168-2-221.ap-northeast-2.compute.internal kubelet[2929]: I0307 12:15:23.694976 2929 pod_startup_latency_tracker.go:102] "Observed pod startup duration" pod="kube-system/aws-node-r824f" podStartSLOduration=11.091679477 podCreationTimestamp="2024-03-07 12:14:58 +0000 UTC" firstStartedPulling="2024-03-07 12:15:08.585493622 +0000 UTC m=+10.617794014" lastFinishedPulling="2024-03-07 12:15:23.188742707 +0000 UTC m=+25.221043113" observedRunningTime="2024-03-07 12:15:23.662443822 +0000 UTC m=+25.694744240" watchObservedRunningTime="2024-03-07 12:15:23.694928576 +0000 UTC m=+25.727228990"

3월 07 12:15:31 ip-192-168-2-221.ap-northeast-2.compute.internal kubelet[2929]: I0307 12:15:31.774998 2929 csr.go:261] certificate signing request csr-rh2vj is approved, waiting to be issued

3월 07 12:15:31 ip-192-168-2-221.ap-northeast-2.compute.internal kubelet[2929]: I0307 12:15:31.802017 2929 csr.go:257] certificate signing request csr-rh2vj is issued

3월 07 12:15:32 ip-192-168-2-221.ap-northeast-2.compute.internal kubelet[2929]: I0307 12:15:32.803739 2929 certificate_manager.go:356] kubernetes.io/kubelet-serving: Certificate expiration is 2025-03-07 12:11:00 +0000 UTC, rotation deadline is 2024-12-23 15:08:08.194099082 +0000 UTC

3월 07 12:15:32 ip-192-168-2-221.ap-northeast-2.compute.internal kubelet[2929]: I0307 12:15:32.803780 2929 certificate_manager.go:356] kubernetes.io/kubelet-serving: Waiting 6986h52m35.39032337s for next certificate rotation

3월 07 12:15:33 ip-192-168-2-221.ap-northeast-2.compute.internal kubelet[2929]: I0307 12:15:33.804537 2929 certificate_manager.go:356] kubernetes.io/kubelet-serving: Certificate expiration is 2025-03-07 12:11:00 +0000 UTC, rotation deadline is 2025-01-12 13:12:38.581573378 +0000 UTC

3월 07 12:15:33 ip-192-168-2-221.ap-northeast-2.compute.internal kubelet[2929]: I0307 12:15:33.804581 2929 certificate_manager.go:356] kubernetes.io/kubelet-serving: Waiting 7464h57m4.776996707s for next certificate rotation

3월 07 12:19:58 ip-192-168-2-221.ap-northeast-2.compute.internal kubelet[2929]: I0307 12:19:58.278019 2929 kubelet.go:1450] "Image garbage collection succeeded"

## 프로세스 트리 확인

(leeeuijoo@myeks:N/A) [root@myeks-host ~]# for i in $N1 $N2; do echo ">> node $i <<"; ssh ec2-user@$i sudo pstree; echo; done

>> node 192.168.1.198 <<

systemd-+-2*[agetty]

|-amazon-ssm-agen-+-ssm-agent-worke---9*[{ssm-agent-worke}]

| `-8*[{amazon-ssm-agen}]

|-auditd---{auditd}

|-chronyd

|-containerd---16*[{containerd}]

|-containerd-shim-+-aws-vpc-cni-+-aws-k8s-agent---7*[{aws-k8s-agent}]

| | `-4*[{aws-vpc-cni}]

| |-controller---7*[{controller}]

| |-pause

| `-11*[{containerd-shim}]

|-containerd-shim-+-kube-proxy---4*[{kube-proxy}]

| |-pause

| `-11*[{containerd-shim}]

|-crond

|-dbus-daemon

|-2*[dhclient]

|-gssproxy---5*[{gssproxy}]

|-irqbalance---{irqbalance}

|-kubelet---12*[{kubelet}]

|-lvmetad

|-master-+-pickup

| `-qmgr

|-rngd

|-rpcbind

|-rsyslogd---2*[{rsyslogd}]

|-sshd---sshd---sshd---sudo---pstree

|-systemd-journal

|-systemd-logind

`-systemd-udevd

>> node 192.168.2.221 <<

systemd-+-2*[agetty]

|-amazon-ssm-agen-+-ssm-agent-worke---9*[{ssm-agent-worke}]

| `-7*[{amazon-ssm-agen}]

|-auditd---{auditd}

|-chronyd

|-containerd---15*[{containerd}]

|-containerd-shim-+-kube-proxy---5*[{kube-proxy}]

| |-pause

| `-10*[{containerd-shim}]

|-containerd-shim-+-aws-vpc-cni-+-aws-k8s-agent---7*[{aws-k8s-agent}]

| | `-3*[{aws-vpc-cni}]

| |-controller---6*[{controller}]

| |-pause

| `-11*[{containerd-shim}]

|-containerd-shim-+-coredns---7*[{coredns}]

| |-pause

| `-11*[{containerd-shim}]

|-containerd-shim-+-coredns---7*[{coredns}]

| |-pause

| `-10*[{containerd-shim}]

|-crond

|-dbus-daemon

|-2*[dhclient]

|-gssproxy---5*[{gssproxy}]

|-irqbalance---{irqbalance}

|-kubelet---12*[{kubelet}]

|-lvmetad

|-master-+-pickup

| `-qmgr

|-rngd

|-rpcbind

|-rsyslogd---2*[{rsyslogd}]

|-sshd---sshd---sshd---sudo---pstree

|-systemd-journal

|-systemd-logind

`-systemd-udevd

## afxuwww

...

## containerd process 정보 확인

(leeeuijoo@myeks:N/A) [root@myeks-host ~]# for i in $N1 $N2; do echo ">> node $i <<"; ssh ec2-user@$i ps axf |grep /usr/bin/containerd; echo; done

>> node 192.168.1.198 <<

2793 ? Ssl 0:27 /usr/bin/containerd

3017 ? Sl 0:02 /usr/bin/containerd-shim-runc-v2 -namespace k8s.io -id 2c8a7f89ab7f3647652d90dbc988c74b574e1991e1eea2931fb518221f104502 -address /run/containerd/containerd.sock

3028 ? Sl 0:00 /usr/bin/containerd-shim-runc-v2 -namespace k8s.io -id 27912fcde3f609df9f98596c9dacfa27a17fdb2909fc945639a1fbfb44a1574e -address /run/containerd/containerd.sock

>> node 192.168.2.221 <<

2793 ? Ssl 0:27 /usr/bin/containerd

3013 ? Sl 0:00 /usr/bin/containerd-shim-runc-v2 -namespace k8s.io -id bcca61055da2a7ab31512308c9b8e39ea22c37234d43b5712f6476efa095e407 -address /run/containerd/containerd.sock

3014 ? Sl 0:02 /usr/bin/containerd-shim-runc-v2 -namespace k8s.io -id 8d530d660415ac2c5b36e579d36f1a5b60ce679b6a7a3afb67a72c17e2406d8a -address /run/containerd/containerd.sock

3560 ? Sl 0:00 /usr/bin/containerd-shim-runc-v2 -namespace k8s.io -id d9c513660ccc53ebf6cd88fb974d606ec05ea29ac4deabfbe60c8000d4131167 -address /run/containerd/containerd.sock

3578 ? Sl 0:00 /usr/bin/containerd-shim-runc-v2 -namespace k8s.io -id 9d86f43a60022e5a60eee3218b7dc96844840ba4c56c10570c6ba354b3797059 -address /run/containerd/containerd.sock

## 쿠버 매니페스트 프로세스 확인 - 아직 매니페스트 배포 x

(leeeuijoo@myeks:N/A) [root@myeks-host ~]# for i in $N1 $N2; do echo ">> node $i <<"; ssh ec2-user@$i ls /etc/kubernetes/manifests/; echo; done

>> node 192.168.1.198 <<

>> node 192.168.2.221 <<

## kubelet 확인

(leeeuijoo@myeks:N/A) [root@myeks-host ~]# for i in $N1 $N2; do echo ">> node $i <<"; ssh ec2-user@$i ls /etc/kubernetes/kubelet/; echo; done

>> node 192.168.1.198 <<

kubelet-config.json

>> node 192.168.2.221 <<

kubelet-config.json

(leeeuijoo@myeks:N/A) [root@myeks-host ~]# for i in $N1 $N2; do echo ">> node $i <<"; ssh ec2-user@$i cat /etc/kubernetes/kubelet/kubelet-config.json; echo; done

>> node 192.168.1.198 <<

{

"kind": "KubeletConfiguration",

"apiVersion": "kubelet.config.k8s.io/v1beta1",

"address": "0.0.0.0",

"authentication": {

"anonymous": {

"enabled": false

},

"webhook": {

"cacheTTL": "2m0s",

"enabled": true

},

"x509": {

"clientCAFile": "/etc/kubernetes/pki/ca.crt"

}

},

"authorization": {

"mode": "Webhook",

"webhook": {

"cacheAuthorizedTTL": "5m0s",

"cacheUnauthorizedTTL": "30s"

}

},

"clusterDomain": "cluster.local",

"hairpinMode": "hairpin-veth",

"readOnlyPort": 0,

"cgroupDriver": "systemd",

"cgroupRoot": "/",

"featureGates": {

"RotateKubeletServerCertificate": true

},

"protectKernelDefaults": true,

"serializeImagePulls": false,

"serverTLSBootstrap": true,

"tlsCipherSuites": [

"TLS_ECDHE_ECDSA_WITH_AES_128_GCM_SHA256",

"TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256",

"TLS_ECDHE_ECDSA_WITH_CHACHA20_POLY1305",

"TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384",

"TLS_ECDHE_RSA_WITH_CHACHA20_POLY1305",

"TLS_ECDHE_ECDSA_WITH_AES_256_GCM_SHA384",

"TLS_RSA_WITH_AES_256_GCM_SHA384",

"TLS_RSA_WITH_AES_128_GCM_SHA256"

],

"clusterDNS": [

"10.100.0.10"

],

"evictionHard": {

"memory.available": "100Mi",

"nodefs.available": "10%",

"nodefs.inodesFree": "5%"

},

"kubeReserved": {

"cpu": "70m",

"ephemeral-storage": "1Gi",

"memory": "442Mi"

},

"providerID": "aws:///ap-northeast-2a/i-04abe7caee6eb9d11",

"systemReservedCgroup": "/system",

"kubeReservedCgroup": "/runtime"

}

>> node 192.168.2.221 <<

{

"kind": "KubeletConfiguration",

"apiVersion": "kubelet.config.k8s.io/v1beta1",

"address": "0.0.0.0",

"authentication": {

"anonymous": {

"enabled": false

},

"webhook": {

"cacheTTL": "2m0s",

"enabled": true

},

"x509": {

"clientCAFile": "/etc/kubernetes/pki/ca.crt"

}

},

"authorization": {

"mode": "Webhook",

"webhook": {

"cacheAuthorizedTTL": "5m0s",

"cacheUnauthorizedTTL": "30s"

}

},

"clusterDomain": "cluster.local",

"hairpinMode": "hairpin-veth",

"readOnlyPort": 0,

"cgroupDriver": "systemd",

"cgroupRoot": "/",

"featureGates": {

"RotateKubeletServerCertificate": true

},

"protectKernelDefaults": true,

"serializeImagePulls": false,

"serverTLSBootstrap": true,

"tlsCipherSuites": [

"TLS_ECDHE_ECDSA_WITH_AES_128_GCM_SHA256",

"TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256",

"TLS_ECDHE_ECDSA_WITH_CHACHA20_POLY1305",

"TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384",

"TLS_ECDHE_RSA_WITH_CHACHA20_POLY1305",

"TLS_ECDHE_ECDSA_WITH_AES_256_GCM_SHA384",

"TLS_RSA_WITH_AES_256_GCM_SHA384",

"TLS_RSA_WITH_AES_128_GCM_SHA256"

],

"clusterDNS": [

"10.100.0.10"

],

"evictionHard": {

"memory.available": "100Mi",

"nodefs.available": "10%",

"nodefs.inodesFree": "5%"

},

"kubeReserved": {

"cpu": "70m",

"ephemeral-storage": "1Gi",

"memory": "442Mi"

},

"providerID": "aws:///ap-northeast-2c/i-064862ab063e800fa",

"systemReservedCgroup": "/system",

"kubeReservedCgroup": "/runtime"

}- 노드 스토리지 정보 확인

for i in $N1 $N2; do echo ">> node $i <<"; ssh ec2-user@$i lsblk; echo; done

for i in $N1 $N2; do echo ">> node $i <<"; ssh ec2-user@$i df -hT /; echo; done

# 출력

(leeeuijoo@myeks:N/A) [root@myeks-host ~]# for i in $N1 $N2; do echo ">> node $i <<"; ssh ec2-user@$i lsblk; echo; done

>> node 192.168.1.198 <<

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

nvme0n1 259:0 0 30G 0 disk

├─nvme0n1p1 259:1 0 30G 0 part /

└─nvme0n1p128 259:2 0 1M 0 part

>> node 192.168.2.221 <<

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

nvme0n1 259:0 0 30G 0 disk

├─nvme0n1p1 259:1 0 30G 0 part /

└─nvme0n1p128 259:2 0 1M 0 part

(leeeuijoo@myeks:N/A) [root@myeks-host ~]# for i in $N1 $N2; do echo ">> node $i <<"; ssh ec2-user@$i df -hT /; echo; done

>> node 192.168.1.198 <<

Filesystem Type Size Used Avail Use% Mounted on

/dev/nvme0n1p1 xfs 30G 3.8G 27G 13% /

>> node 192.168.2.221 <<

Filesystem Type Size Used Avail Use% Mounted on

/dev/nvme0n1p1 xfs 30G 3.9G 27G 13% /

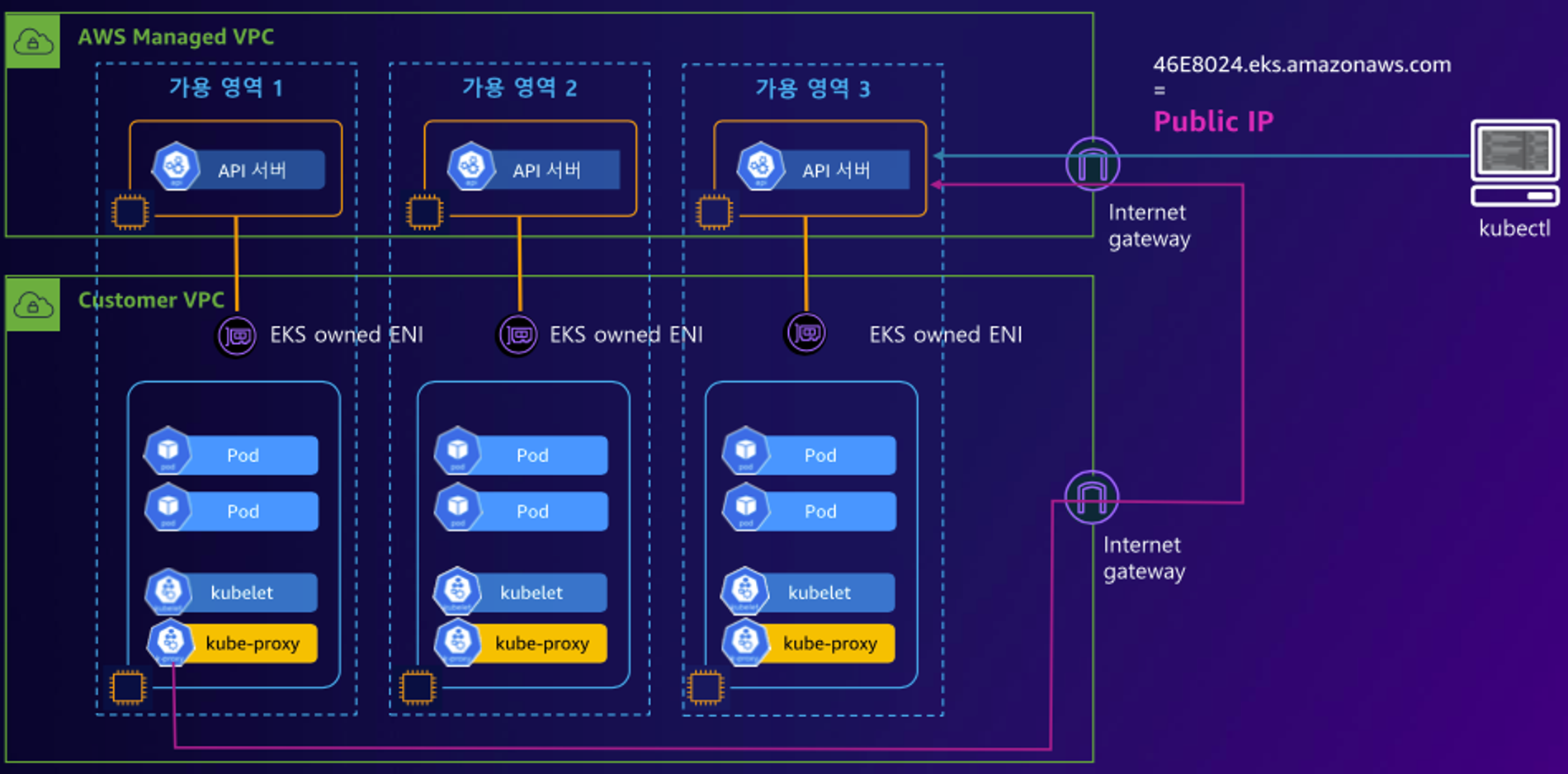

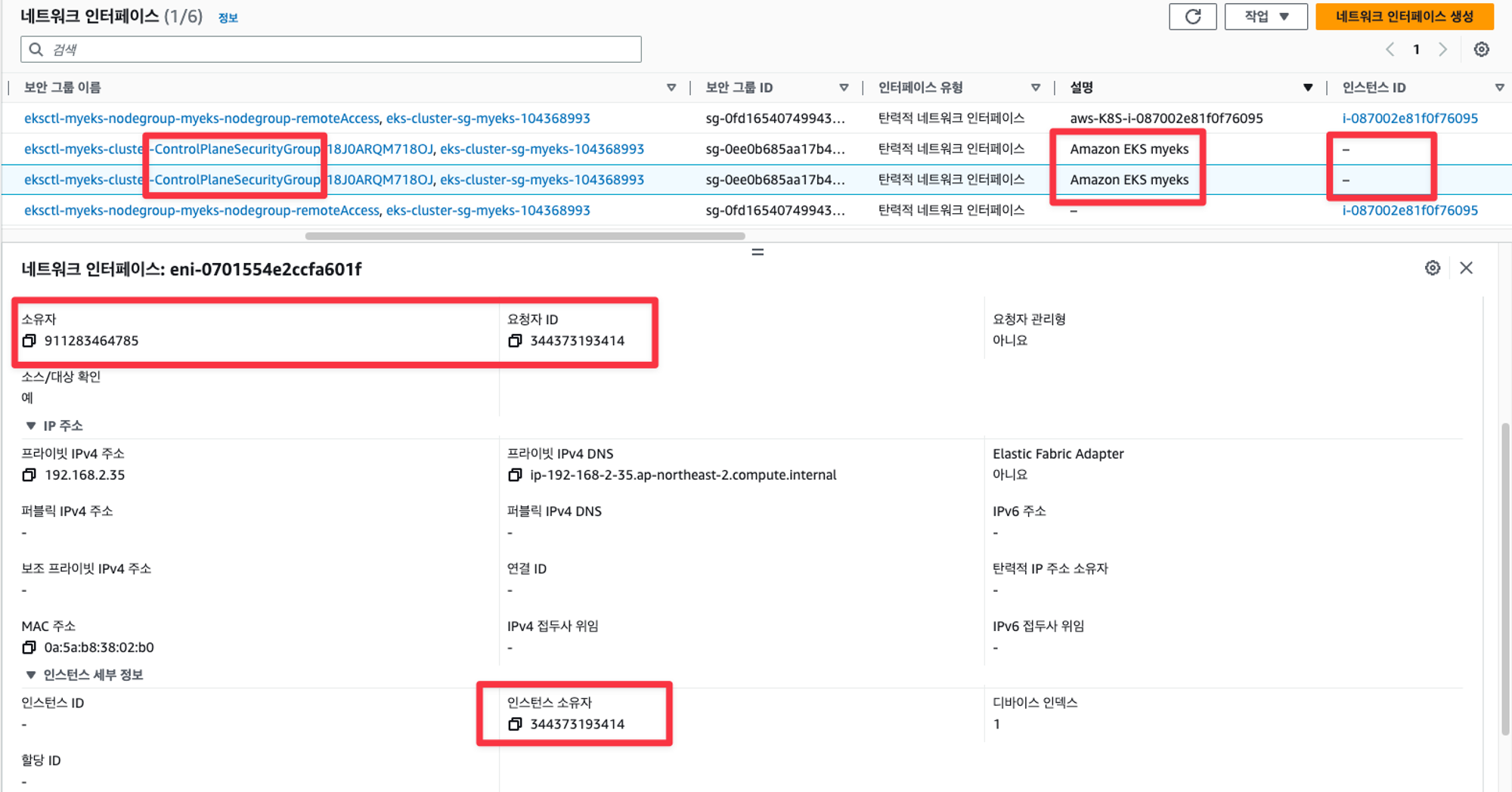

EKS owned ENI 확인

- EKS owned ENI : 관리형 노드 그룹의 워커 노드는 내 소유지만, 연결된 ENI(NIC)의 인스턴스(관리형 노드)는 AWS 소유이다

- kubelet, kube-proxy 통신 Peer Address 확인

for i in $N1 $N2; do echo ">> node $i <<"; ssh ec2-user@$i sudo ss -tnp; echo; done

# 출력

(leeeuijoo@myeks:N/A) [root@myeks-host ~]# for i in $N1 $N2; do echo ">> node $i <<"; ssh ec2-user@$i sudo ss -tnp; echo; done

>> node 192.168.1.198 <<

State Recv-Q Send-Q Local Address:Port Peer Address:Port Process

ESTAB 0 0 192.168.1.198:41842 52.95.194.61:443 users:(("ssm-agent-worke",pid=2555,fd=10))

ESTAB 0 0 192.168.1.198:47750 15.165.51.28:443 users:(("kube-proxy",pid=3140,fd=7))

ESTAB 0 0 192.168.1.198:40328 10.100.0.1:443 users:(("controller",pid=3551,fd=11))

ESTAB 0 0 192.168.1.198:47758 10.100.0.1:443 users:(("aws-k8s-agent",pid=3393,fd=7))

ESTAB 0 0 192.168.1.198:40138 15.165.162.92:443 users:(("kubelet",pid=2932,fd=11))

ESTAB 0 56 192.168.1.198:22 192.168.1.100:50854 users:(("sshd",pid=15594,fd=3),("sshd",pid=15562,fd=3))

ESTAB 0 0 192.168.1.198:51708 52.95.195.99:443 users:(("ssm-agent-worke",pid=2555,fd=14))

>> node 192.168.2.221 <<

State Recv-Q Send-Q Local Address:Port Peer Address:Port Process

ESTAB 0 0 192.168.2.221:36534 10.100.0.1:443 users:(("controller",pid=3801,fd=11))

ESTAB 0 0 192.168.2.221:40562 15.165.162.92:443 users:(("kubelet",pid=2929,fd=20))

ESTAB 0 0 192.168.2.221:53754 10.100.0.1:443 users:(("aws-k8s-agent",pid=3394,fd=7))

ESTAB 0 0 192.168.2.221:35726 52.95.195.99:443 users:(("ssm-agent-worke",pid=2472,fd=14))

ESTAB 0 0 192.168.2.221:34730 15.165.51.28:443 users:(("kube-proxy",pid=3143,fd=7))

ESTAB 0 0 192.168.2.221:48982 52.95.194.61:443 users:(("ssm-agent-worke",pid=2472,fd=11))

ESTAB 0 0 192.168.2.221:22 192.168.1.100:54534 users:(("sshd",pid=15916,fd=3),("sshd",pid=15884,fd=3))- 클러스터 엔드포인트 DNS 확인

APIDNS=$(aws eks describe-cluster --name $CLUSTER_NAME | jq -r .cluster.endpoint | cut -d '/' -f 3)

dig +short $APIDNS

# 출력

(leeeuijoo@myeks:N/A) [root@myeks-host ~]# APIDNS=$(aws eks describe-cluster --name $CLUSTER_NAME | jq -r .cluster.endpoint | cut -d '/' -f 3)

(leeeuijoo@myeks:N/A) [root@myeks-host ~]# dig +short $APIDNS

15.165.162.92

15.165.51.28EKS 보안 그룹

-

각 보안 그룹이 어떻게 적용되는지 생각해보기

-

eksctl-myeks-cluster-ControlPlaneSecurityGroup-AGUZPBU562P1

- 컨트롤플레인에 접속할 시, 컨트롤 플레인과 워커 노드그룹과의 통신을 위한 보안그룹

-

eksctl-myeks-cluster-ClusterSharedNodeSecurityGroup-5TX5WY51YKQG

- 관리노드, 자체 관리노드 간에 서로 통신하기 위한 보안그룹, 클러스터내의 모든 노드들 간의 통신을 위한 보안 그룹

-

eks-cluster-sg-myeks-104368993

- 컨트롤플레인 + 관리노드 + 셀프노드 모두 간에 서로 통신 하기 위한 보안그룹

-

eksctl-myeks-nodegroup-myeks-nodegroup-remoteAccess

- 노드그룹에 SSH 접속 허용 정책

-

myeks-EKSEC2SG-1COQWCAKEDED

- 베스천 보안 그룹

-

aws ec2 describe-security-groups --query 'SecurityGroups[*].[GroupId, GroupName]' --output text

(leeeuijoo@myeks:N/A) [root@myeks-host ~]# aws ec2 describe-security-groups --query 'SecurityGroups[*].[GroupId, GroupName]' --output text

sg-0239c0a5e05197b25 default

sg-0b722e2b25d4cd8a7 eksctl-myeks-cluster-ClusterSharedNodeSecurityGroup-5TX5WY51YKQG

sg-0de6a6b3a39719a7e eks-cluster-sg-myeks-104368993

sg-066ad17d777fd703f myeks-EKSEC2SG-1COQWCAKEDED

sg-0b501c0b29846c955 eksctl-myeks-cluster-ControlPlaneSecurityGroup-AGUZPBU562P1

sg-0ad66961f5a7631cb default

sg-0667bcaed6e6b045f default

sg-0a6273614729e7dad eksctl-myeks-nodegroup-myeks-nodegroup-remoteAccess3. 클러스터 관리 편의성

- kubectl 자동 완성 기능과 alias 사용

- 이미 베스천에 설정되어 있음

source <(kubectl completion bash)

alias k=kubectl

complete -F __start_kubectl k'- kubectl cli 플러그인 매니저 쿠버네티스 크루(krew) 설치

- 이미 베스천에 설치되어 있음

# 설치

curl -fsSLO https://github.com/kubernetes-sigs/krew/releases/download/v0.4.4/krew-linux_amd64.tar.gz

tar zxvf krew-linux_amd64.tar.gz

./krew-linux_amd64 install krew

tree -L 3 /root/.krew/bin

# Usage

kubectl krew

kubectl krew update

kubectl krew search

kubectl krew list

# 출력

(leeeuijoo@myeks:N/A) [root@myeks-host ~]# kubectl krew

krew is the kubectl plugin manager.

You can invoke krew through kubectl: "kubectl krew [command]..."

Usage:

kubectl krew [command]

Available Commands:

help Help about any command

index Manage custom plugin indexes

info Show information about an available plugin

install Install kubectl plugins

list List installed kubectl plugins

search Discover kubectl plugins

uninstall Uninstall plugins

update Update the local copy of the plugin index

upgrade Upgrade installed plugins to newer versions

version Show krew version and diagnostics

Flags:

-h, --help help for krew

-v, --v Level number for the log level verbosity

Use "kubectl krew [command] --help" for more information about a command.

(leeeuijoo@myeks:N/A) [root@myeks-host ~]# kubectl krew update

Updated the local copy of plugin index.

.

.

.

(leeeuijoo@myeks:N/A) [root@myeks-host ~]# kubectl krew search

...

(leeeuijoo@myeks:N/A) [root@myeks-host ~]# kubectl krew list

PLUGIN VERSION

ctx v0.9.5

get-all v1.3.8

krew v0.4.4

neat v2.0.3

ns v0.9.5- krew 로 kube-ctx, kube-ns 설치 및 사용

- 이미 베스천 호스트에 설치되어 있음

- kube-ctx : 쿠버네티스 컨텍스트 사용

# 설치

kubectl krew install ctx

# 컨텍스트 확인

kubectl ctx

# 컨텍스트 사용

kubectl ctx <각자 자신의 컨텍스트 이름>

# 출력

(leeeuijoo@myeks:N/A) [root@myeks-host ~]# k ctx

leeeuijoo@myeks.ap-northeast-2.eksctl.io

(leeeuijoo@myeks:N/A) [root@myeks-host ~]# k ctx

leeeuijoo@myeks.ap-northeast-2.eksctl.io

(leeeuijoo@myeks:N/A) [root@myeks-host ~]# k ctx leeeuijoo@myeks.ap-northeast-2.eksctl.io

Switched to context "leeeuijoo@myeks.ap-northeast-2.eksctl.io".

- kube-ns : 네임스페이스 사용

- 네임스페이스는 가상의 클러스터라고 생각하면 됨

- 현재 네임스페이스를 바꿀 수 있음

# 설치

kubectl krew install ns

# 네임스페이스 확인

kubectl ns

# 터미널1

watch kubectl get pod

# kube-system 네임스페이스 선택 사용

kubectl ns kube-system

# default 네임스페이스 선택 사용

kubectl ns -

혹은

kubectl ns default

# 출력

(leeeuijoo@myeks:N/A) [root@myeks-host ~]# k get ns

NAME STATUS AGE

default Active 79m

kube-node-lease Active 79m

kube-public Active 79m

kube-system Active 79m

(leeeuijoo@myeks:N/A) [root@myeks-host ~]# k ns

default

kube-node-lease

kube-public

kube-system

(leeeuijoo@myeks:N/A) [root@myeks-host ~]# k ns kube-system

Context "leeeuijoo@myeks.ap-northeast-2.eksctl.io" modified.

Active namespace is "kube-system".

(leeeuijoo@myeks:kube-system) [root@myeks-host ~]# k ns

default

kube-node-lease

kube-public

kube-system

(leeeuijoo@myeks:kube-system) [root@myeks-host ~]# k ns default

Context "leeeuijoo@myeks.ap-northeast-2.eksctl.io" modified.

Active namespace is "default".

(leeeuijoo@myeks:default) [root@myeks-host ~]# k ns

default

kube-node-lease

kube-public

kube-system- krew 로 기타 플러그인 설치 및 사용 : df-pv get-all ktop neat oomd view-secret,mtail, tree

kubectl krew install df-pv get-all ktop neat oomd view-secret mtail tree

(leeeuijoo@myeks:default) [root@myeks-host ~]# kubectl krew install df-pv get-all ktop neat oomd view-secret mtail tree

Updated the local copy of plugin index.

Installing plugin: df-pv

Installed plugin: df-pv

\

| Use this plugin:

| kubectl df-pv

| Documentation:

| https://github.com/yashbhutwala/kubectl-df-pv

/

WARNING: You installed plugin "df-pv" from the krew-index plugin repository.

These plugins are not audited for security by the Krew maintainers.

Run them at your own risk.

Installing plugin: get-all

W0307 22:31:28.258665 11100 install.go:160] Skipping plugin "get-all", it is already installed

Installing plugin: ktop

Installed plugin: ktop

\

| Use this plugin:

| kubectl ktop

| Documentation:

| https://github.com/vladimirvivien/ktop

| Caveats:

| \

| | * By default, ktop displays metrics for resources in the default namespace. You can override this behavior

| | by providing a --namespace or use -A for all namespaces.

| /

/

WARNING: You installed plugin "ktop" from the krew-index plugin repository.

These plugins are not audited for security by the Krew maintainers.

Run them at your own risk.

Installing plugin: neat

W0307 22:31:30.021254 11100 install.go:160] Skipping plugin "neat", it is already installed

Installing plugin: oomd

Installed plugin: oomd

\

| Use this plugin:

| kubectl oomd

| Documentation:

| https://github.com/jdockerty/kubectl-oomd

/

WARNING: You installed plugin "oomd" from the krew-index plugin repository.

These plugins are not audited for security by the Krew maintainers.

Run them at your own risk.

Installing plugin: view-secret

Installed plugin: view-secret

\

| Use this plugin:

| kubectl view-secret

| Documentation:

| https://github.com/elsesiy/kubectl-view-secret

/

WARNING: You installed plugin "view-secret" from the krew-index plugin repository.

These plugins are not audited for security by the Krew maintainers.

Run them at your own risk.

Installing plugin: mtail

Installed plugin: mtail

\

| Use this plugin:

| kubectl mtail

| Documentation:

| https://gitlab.com/grzesuav/kubectl-mtail

| Caveats:

| \

| | This plugin needs the following programs:

| | * jq

| /

/

WARNING: You installed plugin "mtail" from the krew-index plugin repository.

These plugins are not audited for security by the Krew maintainers.

Run them at your own risk.

Installing plugin: tree

Installed plugin: tree

\

| Use this plugin:

| kubectl tree

| Documentation:

| https://github.com/ahmetb/kubectl-tree

| Caveats:

| \

| | * For resources that are not in default namespace, currently you must

| | specify -n/--namespace explicitly (the current namespace setting is not

| | yet used).

| /

/

WARNING: You installed plugin "tree" from the krew-index plugin repository.

These plugins are not audited for security by the Krew maintainers.

Run them at your own risk.

- get-all 플러그안

- 모든 k8s 리소스를 볼 수 있음

- 네임스페이스 지정 가능

(leeeuijoo@myeks:default) [root@myeks-host ~]# k get-all -n kube-system

NAME NAMESPACE AGE

configmap/amazon-vpc-cni kube-system 83m

configmap/aws-auth kube-system 78m

configmap/coredns kube-system 83m

configmap/extension-apiserver-authentication kube-system 84m

configmap/kube-apiserver-legacy-service-account-token-tracking kube-system 84m

configmap/kube-proxy kube-system 83m

configmap/kube-proxy-config kube-system 83m

configmap/kube-root-ca.crt kube-system 84m

endpoints/kube-dns kube-system 83m

pod/aws-node-hvj27 kube-system 78m

pod/aws-node-r824f kube-system 78m

pod/coredns-56dfff779f-bclc4 kube-system 83m

pod/coredns-56dfff779f-ql6g9 kube-system 83m

pod/kube-proxy-q4t24 kube-system 78m

pod/kube-proxy-zm6vf kube-system 78m

serviceaccount/attachdetach-controller kube-system 84m

serviceaccount/aws-cloud-provider kube-system 84m

serviceaccount/aws-node kube-system 83m

serviceaccount/certificate-controller kube-system 84m

serviceaccount/clusterrole-aggregation-controller kube-system 84m

serviceaccount/coredns kube-system 83m

serviceaccount/cronjob-controller kube-system 84m

serviceaccount/daemon-set-controller kube-system 84m

serviceaccount/default kube-system 84m

serviceaccount/deployment-controller kube-system 84m

serviceaccount/disruption-controller kube-system 84m

serviceaccount/eks-vpc-resource-controller kube-system 84m

serviceaccount/endpoint-controller kube-system 84m

serviceaccount/endpointslice-controller kube-system 84m

serviceaccount/endpointslicemirroring-controller kube-system 84m

serviceaccount/ephemeral-volume-controller kube-system 84m

serviceaccount/expand-controller kube-system 84m

serviceaccount/generic-garbage-collector kube-system 84m

serviceaccount/horizontal-pod-autoscaler kube-system 84m

serviceaccount/job-controller kube-system 84m

serviceaccount/kube-proxy kube-system 83m

serviceaccount/namespace-controller kube-system 84m

serviceaccount/node-controller kube-system 84m

serviceaccount/persistent-volume-binder kube-system 84m

serviceaccount/pod-garbage-collector kube-system 84m

serviceaccount/pv-protection-controller kube-system 84m

serviceaccount/pvc-protection-controller kube-system 84m

serviceaccount/replicaset-controller kube-system 84m

serviceaccount/replication-controller kube-system 84m

serviceaccount/resourcequota-controller kube-system 84m

serviceaccount/root-ca-cert-publisher kube-system 84m

serviceaccount/service-account-controller kube-system 84m

serviceaccount/service-controller kube-system 84m

serviceaccount/statefulset-controller kube-system 84m

serviceaccount/tagging-controller kube-system 84m

serviceaccount/ttl-after-finished-controller kube-system 84m

serviceaccount/ttl-controller kube-system 84m

service/kube-dns kube-system 83m

controllerrevision.apps/aws-node-56fd578595 kube-system 83m

controllerrevision.apps/kube-proxy-557dc69d57 kube-system 83m

daemonset.apps/aws-node kube-system 83m

daemonset.apps/kube-proxy kube-system 83m

deployment.apps/coredns kube-system 83m

replicaset.apps/coredns-56dfff779f kube-system 83m

lease.coordination.k8s.io/apiserver-5icngiytssw45tc3aelbkm2nj4 kube-system 80m

lease.coordination.k8s.io/apiserver-jiddcrfp6co6gxiygbup2pfex4 kube-system 84m

lease.coordination.k8s.io/cloud-controller-manager kube-system 84m

lease.coordination.k8s.io/cp-vpc-resource-controller kube-system 84m

lease.coordination.k8s.io/eks-certificates-controller kube-system 84m

lease.coordination.k8s.io/kube-controller-manager kube-system 84m

lease.coordination.k8s.io/kube-scheduler kube-system 84m

endpointslice.discovery.k8s.io/kube-dns-5z4nc kube-system 83m

poddisruptionbudget.policy/coredns kube-system 83m

rolebinding.rbac.authorization.k8s.io/eks-vpc-resource-controller-rolebinding kube-system 84m

rolebinding.rbac.authorization.k8s.io/eks:addon-manager kube-system 84m

rolebinding.rbac.authorization.k8s.io/eks:authenticator kube-system 84m

rolebinding.rbac.authorization.k8s.io/eks:az-poller kube-system 84m

rolebinding.rbac.authorization.k8s.io/eks:certificate-controller kube-system 84m

rolebinding.rbac.authorization.k8s.io/eks:cloud-controller-manager:apiserver-authentication-reader kube-system 84m

rolebinding.rbac.authorization.k8s.io/eks:fargate-manager kube-system 84m

rolebinding.rbac.authorization.k8s.io/eks:k8s-metrics kube-system 84m

rolebinding.rbac.authorization.k8s.io/eks:network-policy-controller kube-system 83m

rolebinding.rbac.authorization.k8s.io/eks:node-manager kube-system 84m

rolebinding.rbac.authorization.k8s.io/eks:service-operations kube-system 84m

rolebinding.rbac.authorization.k8s.io/system::extension-apiserver-authentication-reader kube-system 84m

rolebinding.rbac.authorization.k8s.io/system::leader-locking-kube-controller-manager kube-system 84m

rolebinding.rbac.authorization.k8s.io/system::leader-locking-kube-scheduler kube-system 84m

rolebinding.rbac.authorization.k8s.io/system:controller:bootstrap-signer kube-system 84m

rolebinding.rbac.authorization.k8s.io/system:controller:cloud-provider kube-system 84m

rolebinding.rbac.authorization.k8s.io/system:controller:token-cleaner kube-system 84m

role.rbac.authorization.k8s.io/eks-vpc-resource-controller-role kube-system 84m

role.rbac.authorization.k8s.io/eks:addon-manager kube-system 84m

role.rbac.authorization.k8s.io/eks:authenticator kube-system 84m

role.rbac.authorization.k8s.io/eks:az-poller kube-system 84m

role.rbac.authorization.k8s.io/eks:certificate-controller kube-system 84m

role.rbac.authorization.k8s.io/eks:fargate-manager kube-system 84m

role.rbac.authorization.k8s.io/eks:k8s-metrics kube-system 84m

role.rbac.authorization.k8s.io/eks:network-policy-controller kube-system 84m

role.rbac.authorization.k8s.io/eks:node-manager kube-system 84m

role.rbac.authorization.k8s.io/eks:service-operations-configmaps kube-system 84m

role.rbac.authorization.k8s.io/extension-apiserver-authentication-reader kube-system 84m

role.rbac.authorization.k8s.io/system::leader-locking-kube-controller-manager kube-system 84m

role.rbac.authorization.k8s.io/system::leader-locking-kube-scheduler kube-system 84m

role.rbac.authorization.k8s.io/system:controller:bootstrap-signer kube-system 84m

role.rbac.authorization.k8s.io/system:controller:cloud-provider kube-system 84m

role.rbac.authorization.k8s.io/system:controller:token-cleaner kube-system 84m- ktop

- k8s 의 모든 정보를 UI 형식으로 제공

- 사용 :

kubectl ktop

- 기타

# oomd 사용

kubectl oomd

# df-pv 사용

kubectl df-pv

# view-secret 사용 : 시크릿 복호화

kubectl view-secret

- kube-ps1 설치 및 사용

# 설치 및 설정

git clone https://github.com/jonmosco/kube-ps1.git /root/kube-ps1

cat <<"EOT" >> /root/.bash_profile

source /root/kube-ps1/kube-ps1.sh

KUBE_PS1_SYMBOL_ENABLE=true

function get_cluster_short() {

echo "$1" | cut -d . -f1

}

KUBE_PS1_CLUSTER_FUNCTION=get_cluster_short

KUBE_PS1_SUFFIX=') '

PS1='$(kube_ps1)'$PS1

EOT

# 적용

exit

exit

# default 네임스페이스 선택

kubectl ns default

## 사용

(leeeuijoo@myeks:default) [root@myeks-host ~]# k ns default

Context "leeeuijoo@myeks.ap-northeast-2.eksctl.io" modified.

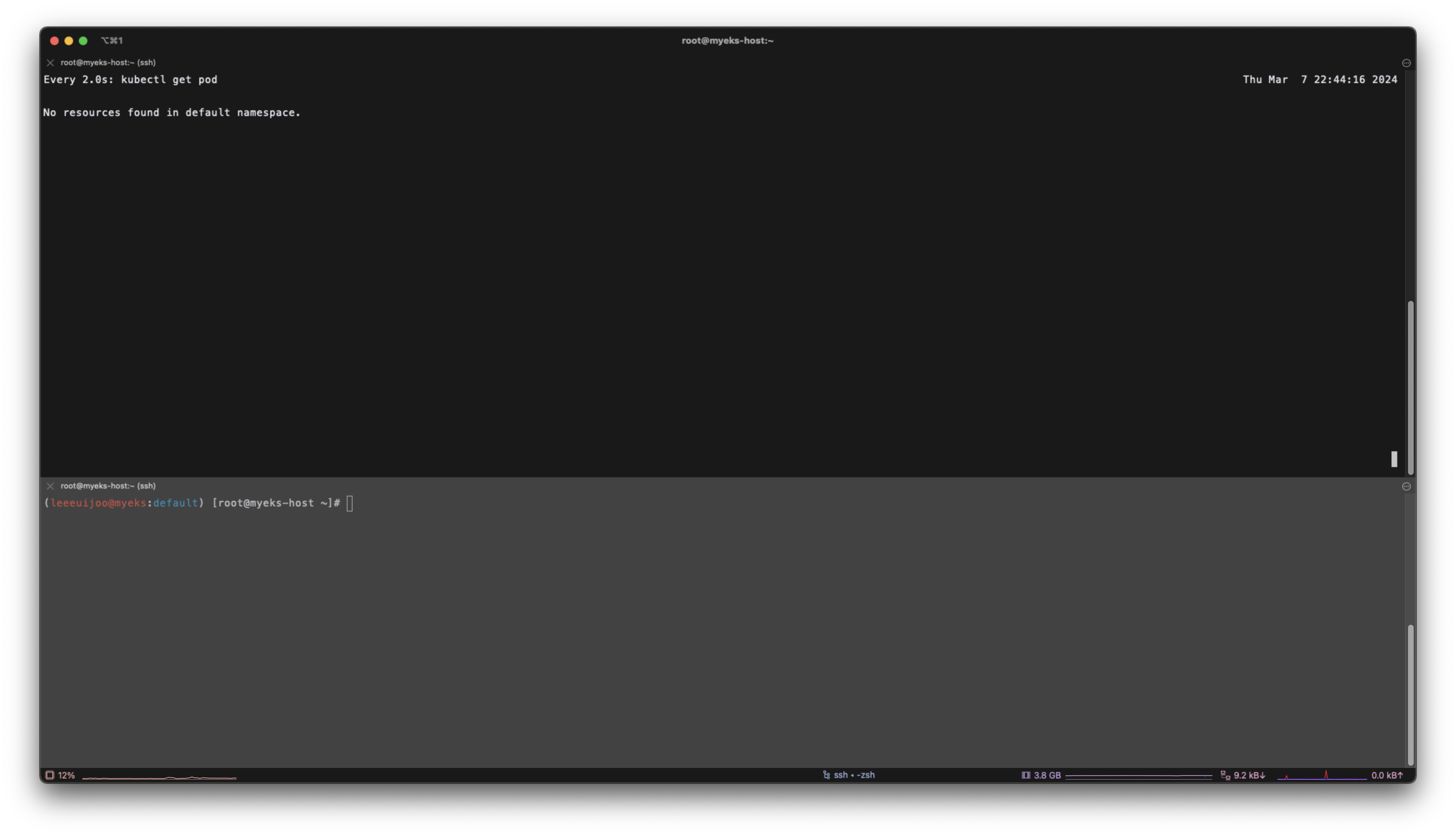

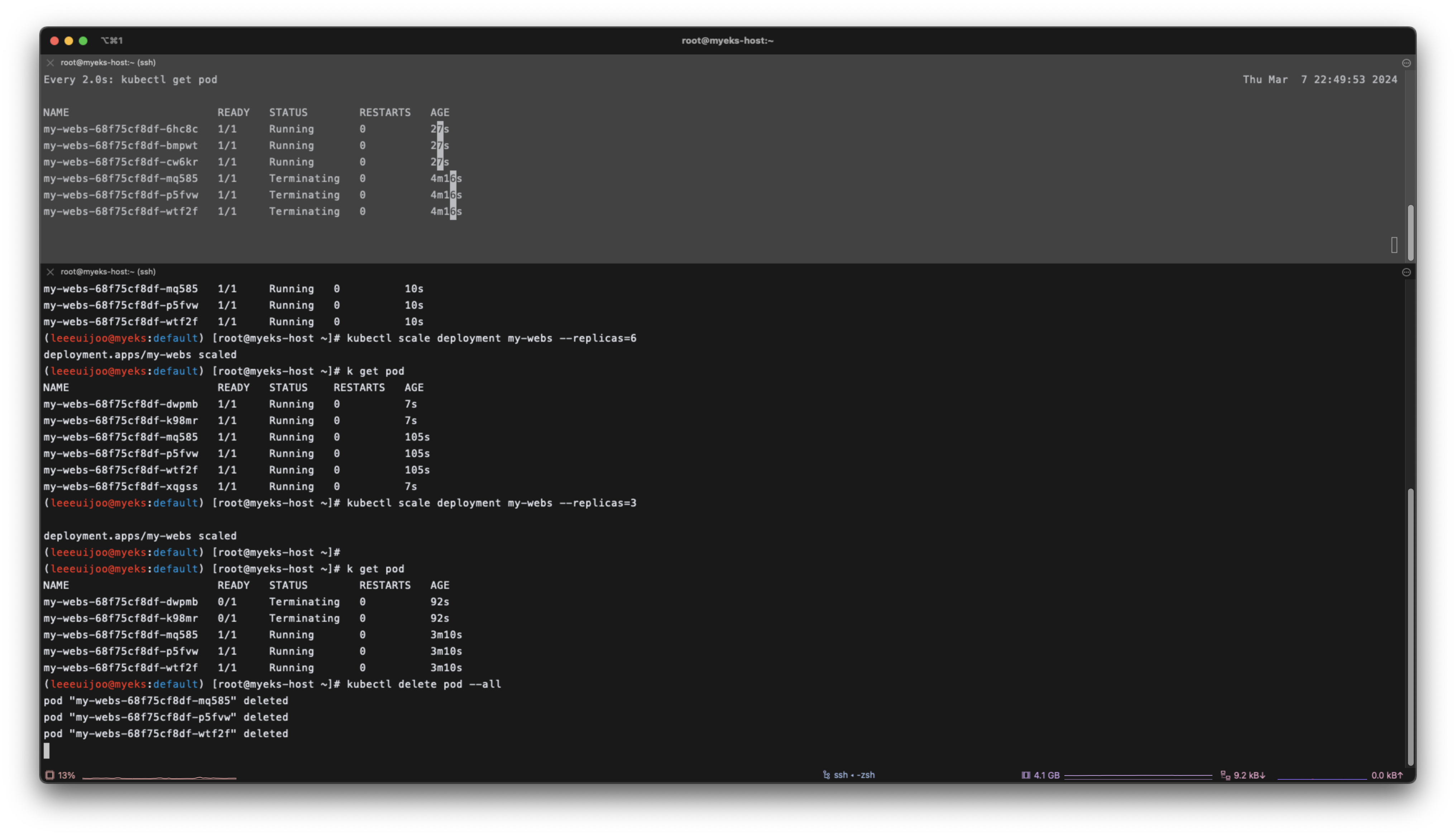

Active namespace is "default".4. Pod, Deployment, Service, LB 생성 테스트

# 터미널1 (모니터링)

watch -d 'kubectl get pod'

# 터미널2

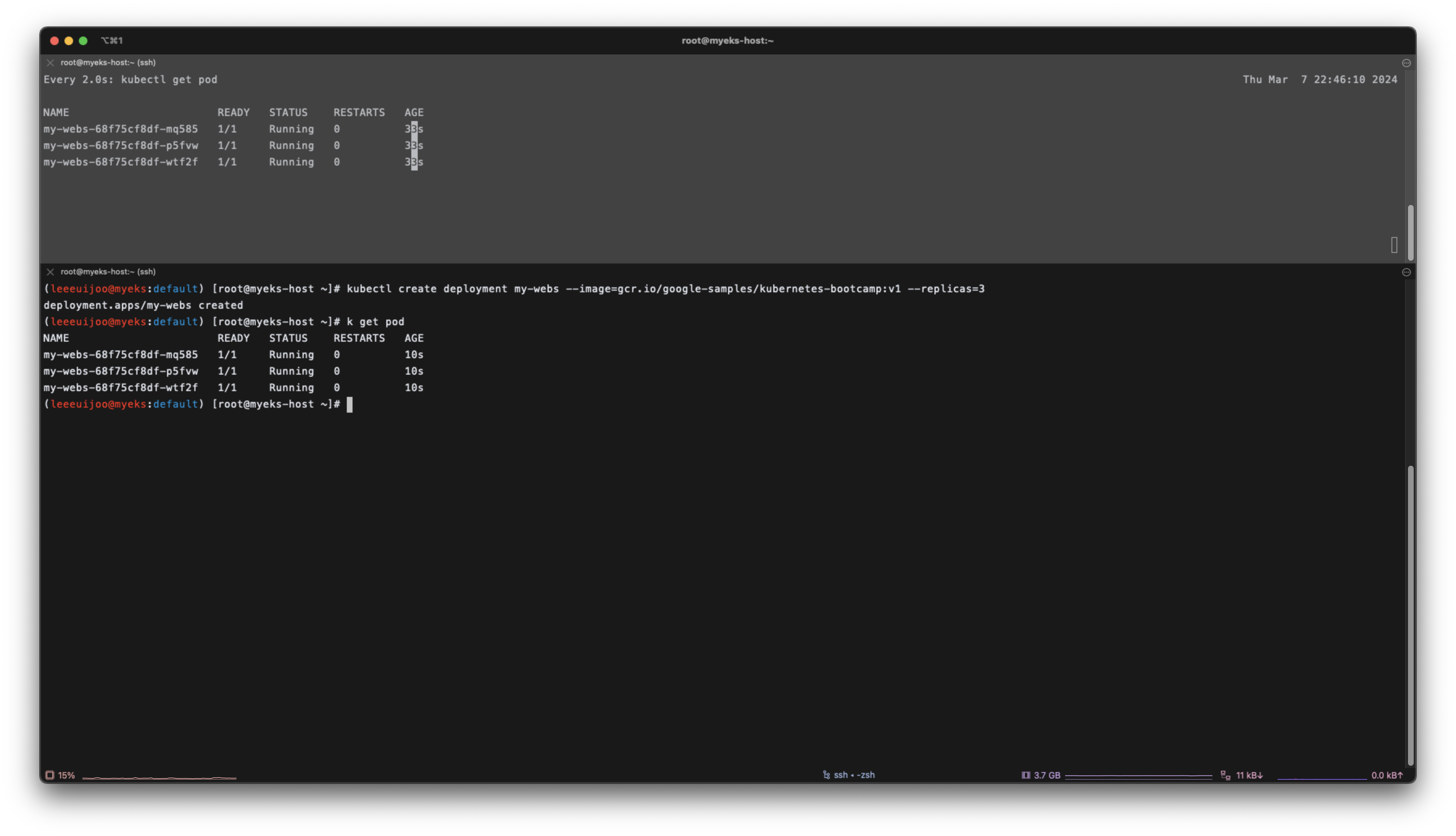

# Deployment 배포(Pod 3개)

kubectl create deployment my-webs --image=gcr.io/google-samples/kubernetes-bootcamp:v1 --replicas=3

kubectl get pod -w

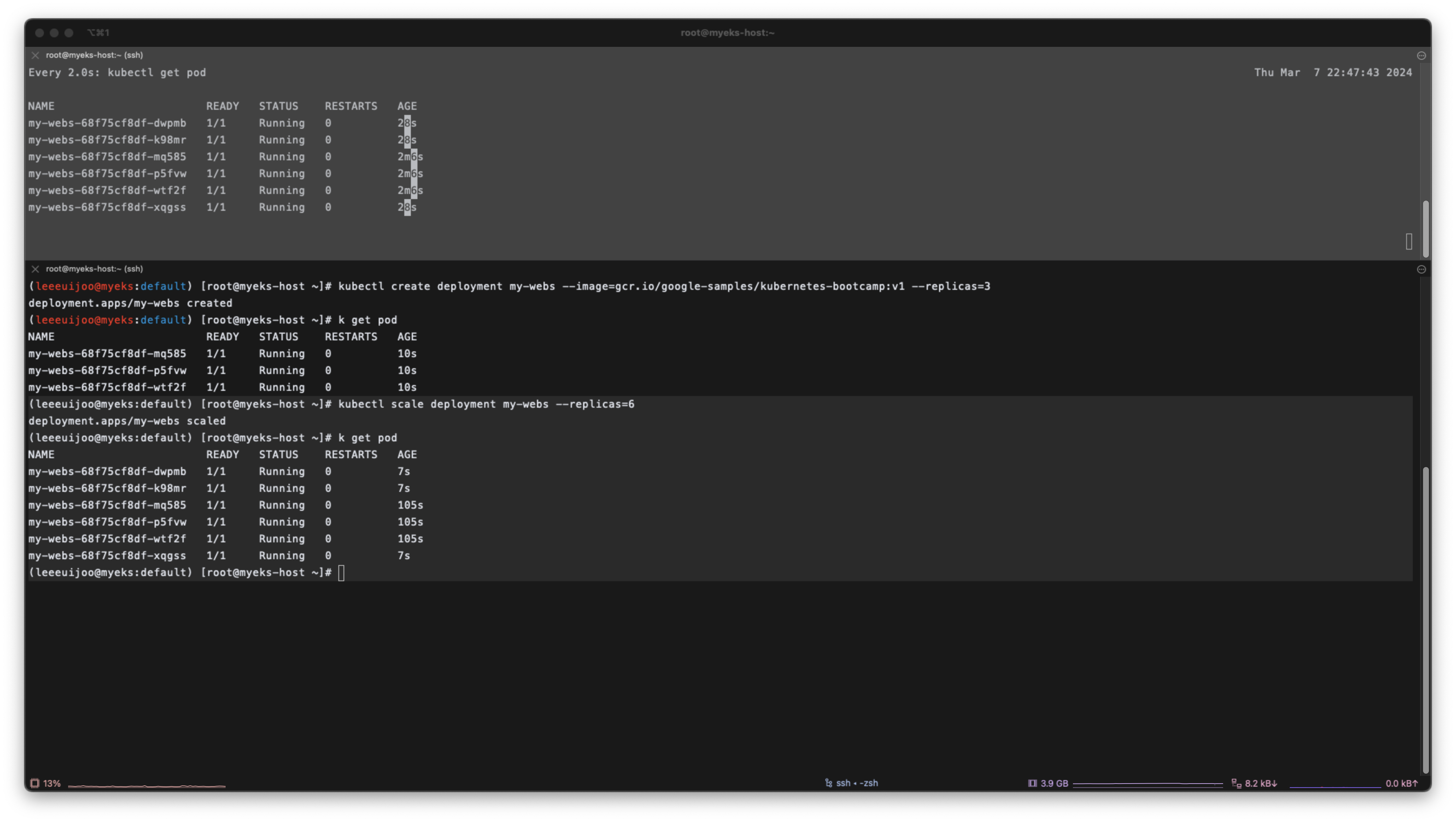

# 파드 증가 및 감소

kubectl scale deployment my-webs --replicas=6 && kubectl get pod -w

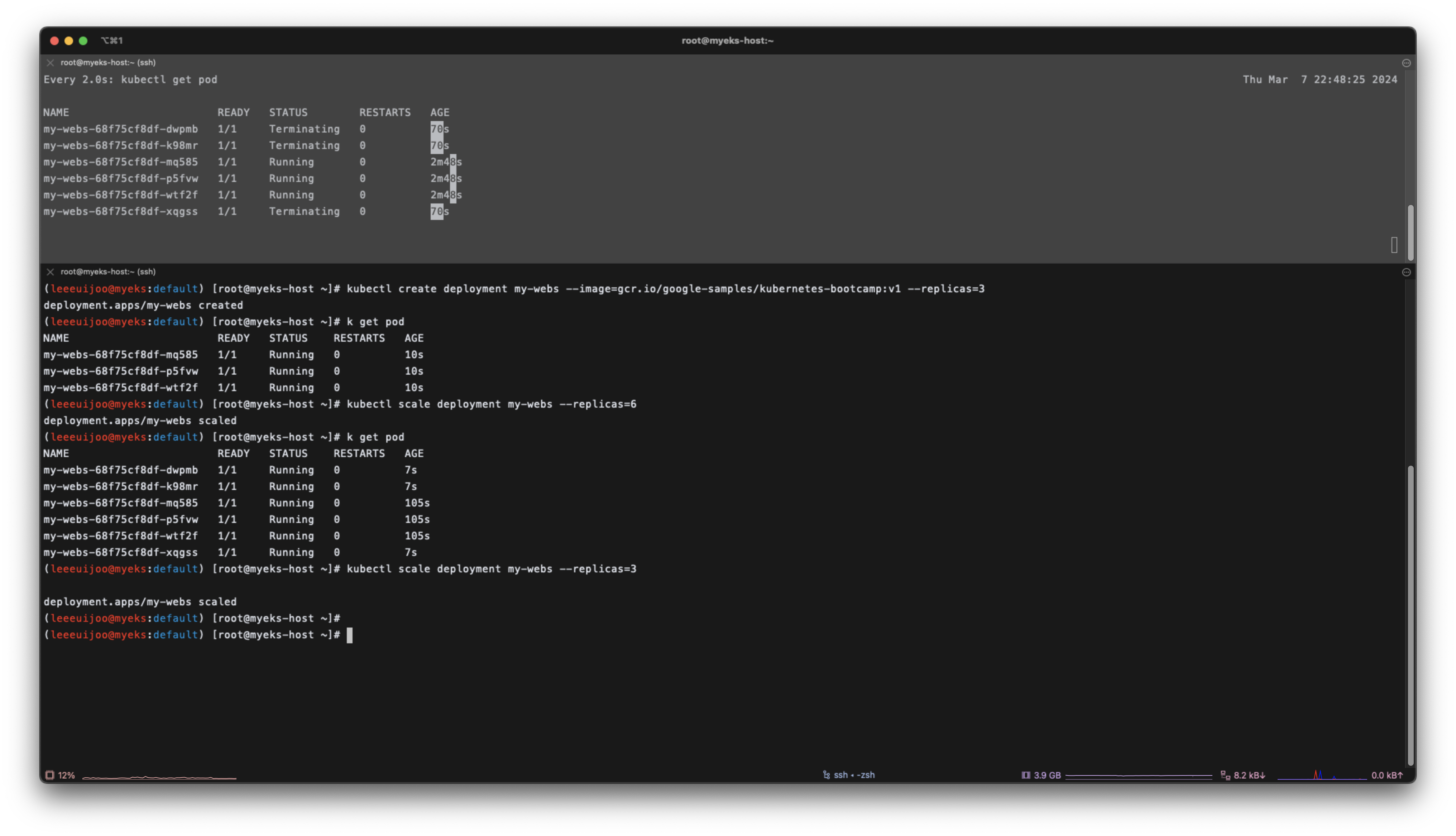

kubectl scale deployment my-webs --replicas=3

kubectl get pod

# 강제로 파드 삭제 : 바라는상태 + 선언형에 대한 대략적인 확인

kubectl delete pod --all && kubectl get pod -w

kubectl get pod

# 실습 완료 후 Deployment 삭제

kubectl delete deploy my-webs

- default namespace no resource

(leeeuijoo@myeks:default) [root@myeks-host ~]# kubectl create deployment my-webs --image=gcr.io/google-samples/kubernetes-bootcamp:v1 --replicas=3

deployment.apps/my-webs created

(leeeuijoo@myeks:default) [root@myeks-host ~]# k get pod

NAME READY STATUS RESTARTS AGE

my-webs-68f75cf8df-mq585 1/1 Running 0 10s

my-webs-68f75cf8df-p5fvw 1/1 Running 0 10s

my-webs-68f75cf8df-wtf2f 1/1 Running 0 10s

- 파드 스케일 업 & 다운

(leeeuijoo@myeks:default) [root@myeks-host ~]# kubectl scale deployment my-webs --replicas=6

deployment.apps/my-webs scaled

(leeeuijoo@myeks:default) [root@myeks-host ~]# k get pod

NAME READY STATUS RESTARTS AGE

my-webs-68f75cf8df-dwpmb 1/1 Running 0 7s

my-webs-68f75cf8df-k98mr 1/1 Running 0 7s

my-webs-68f75cf8df-mq585 1/1 Running 0 105s

my-webs-68f75cf8df-p5fvw 1/1 Running 0 105s

my-webs-68f75cf8df-wtf2f 1/1 Running 0 105s

my-webs-68f75cf8df-xqgss 1/1 Running 0 7s

(leeeuijoo@myeks:default) [root@myeks-host ~]# kubectl scale deployment my-webs --replicas=3

deployment.apps/my-webs scaled

(leeeuijoo@myeks:default) [root@myeks-host ~]# kubectl delete pod --all

pod "my-webs-68f75cf8df-mq585" deleted

pod "my-webs-68f75cf8df-p5fvw" deleted

pod "my-webs-68f75cf8df-wtf2f" deleted

(leeeuijoo@myeks:default) [root@myeks-host ~]# k get pod

NAME READY STATUS RESTARTS AGE

my-webs-68f75cf8df-6hc8c 1/1 Running 0 41s

my-webs-68f75cf8df-bmpwt 1/1 Running 0 41s

my-webs-68f75cf8df-cw6kr 1/1 Running 0 41s

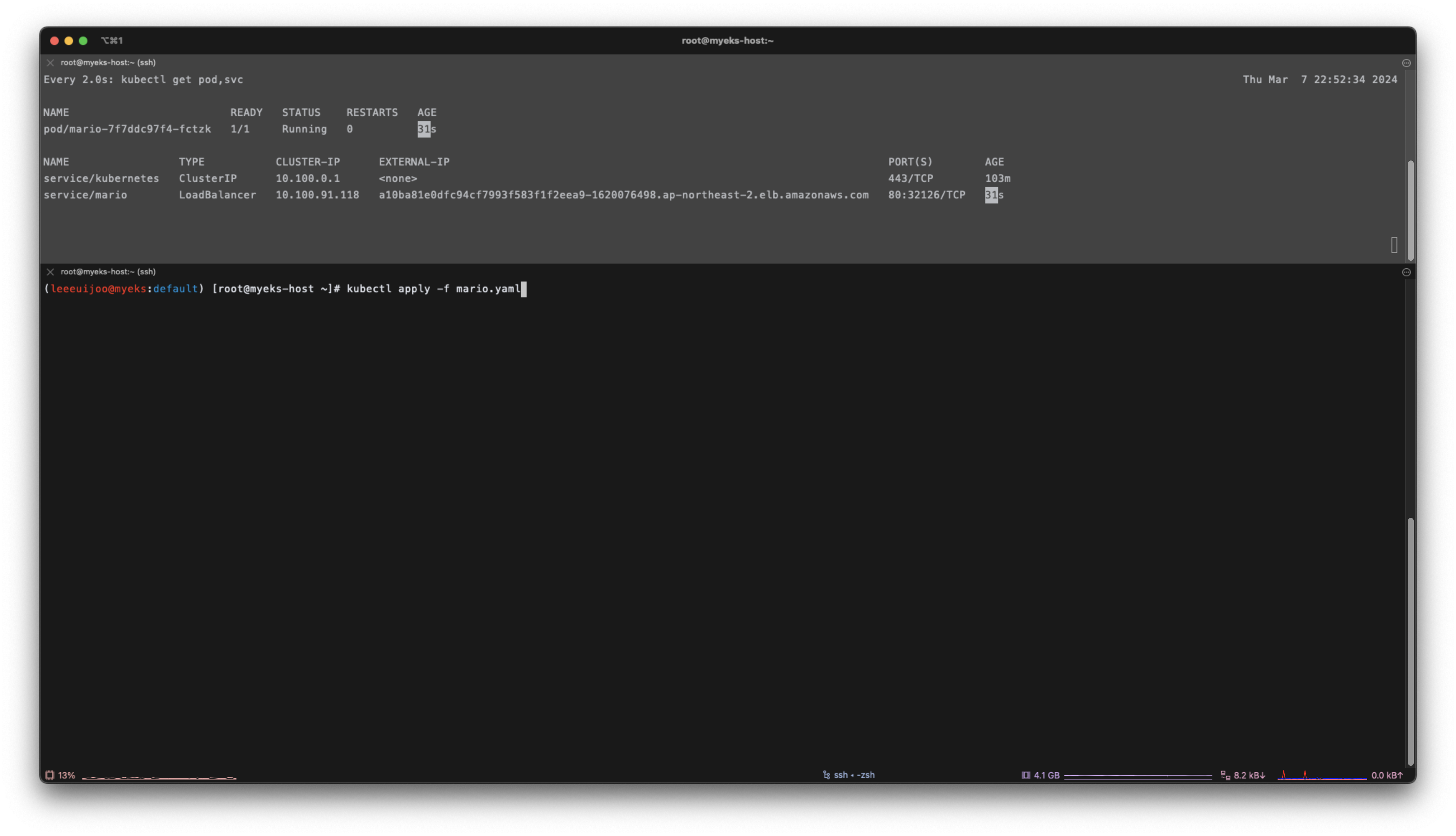

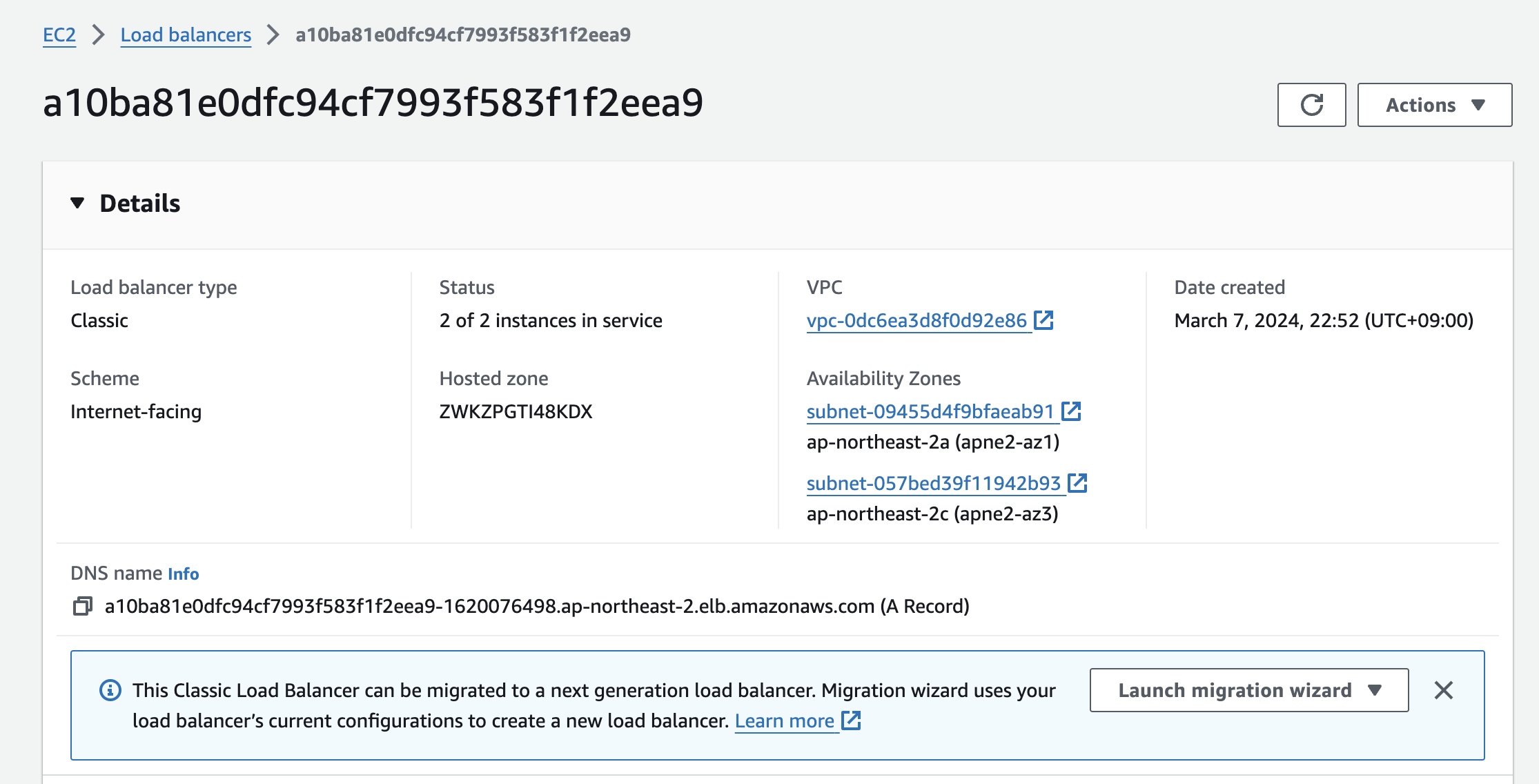

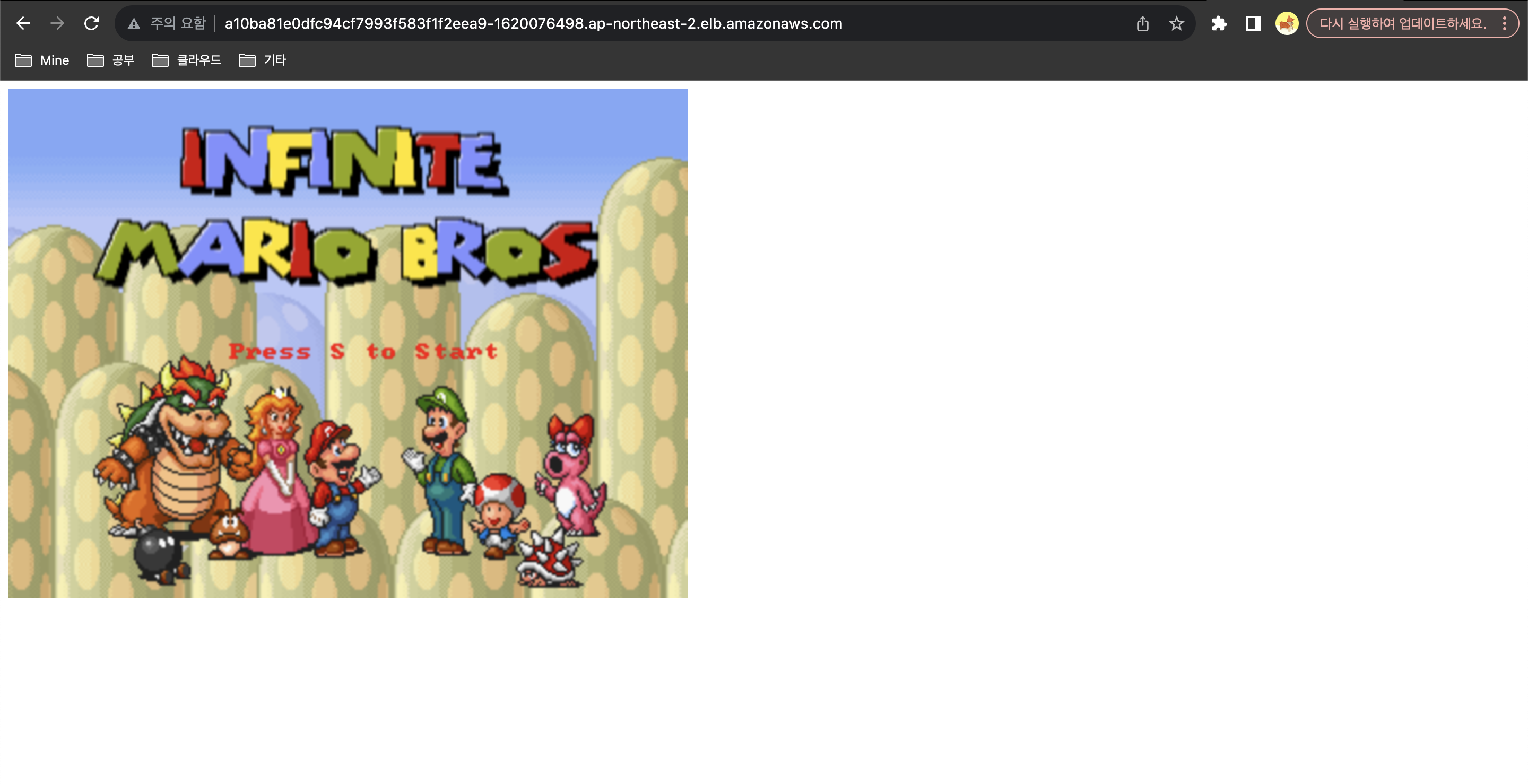

- 서비스 배포해보기 - 마리오 게임

# 터미널1 (모니터링)

watch -d 'kubectl get pod,svc'

# 수퍼마리오 디플로이먼트 배포

curl -s -O https://raw.githubusercontent.com/gasida/PKOS/main/1/mario.yaml

kubectl apply -f mario.yaml

cat mario.yaml | yh

# 배포 확인 : CLB 배포 확인

kubectl get deploy,svc,ep mario

# 마리오 게임 접속 : CLB 주소로 웹 접속

kubectl get svc mario -o jsonpath={.status.loadBalancer.ingress[0].hostname} | awk '{ print "Maria URL = http://"$1 }'

# 삭제

kubectl delete -f mario.yaml

(leeeuijoo@myeks:default) [root@myeks-host ~]# k get all

NAME READY STATUS RESTARTS AGE

pod/mario-7f7ddc97f4-fctzk 1/1 Running 0 55s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.100.0.1 <none> 443/TCP 103m

service/mario LoadBalancer 10.100.91.118 a10ba81e0dfc94cf7993f583f1f2eea9-1620076498.ap-northeast-2.elb.amazonaws.com 80:32126/TCP 55s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/mario 1/1 1 1 55s

NAME DESIRED CURRENT READY AGE

replicaset.apps/mario-7f7ddc97f4 1 1 1 55s- CLB 로 접속

- EXTERNAL-IP 로 접속

5. 노드에 배포된 컨테이너 정보 확인

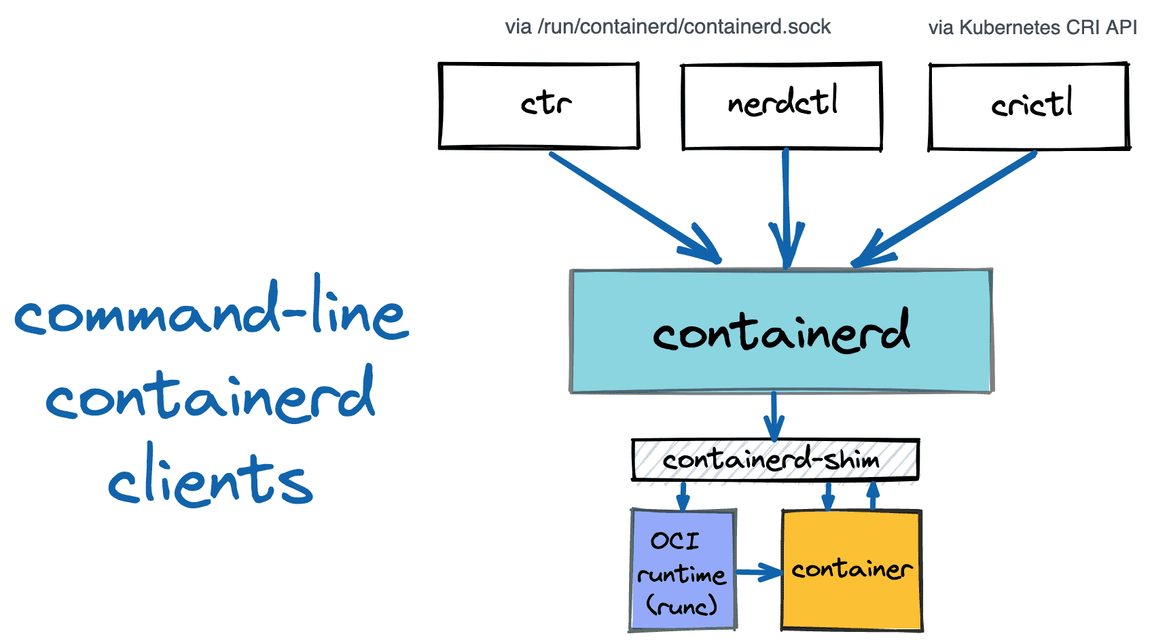

Containerd clients 3종 : ctr, nerdctl, crictl

# ctr 버전 확인

ssh ec2-user@$N1 ctr --version

ctr github.com/containerd/containerd v1.7.11

# ctr help

ssh ec2-user@$N1 ctr

NAME:

ctr -

__

_____/ /______

/ ___/ __/ ___/

/ /__/ /_/ /

\___/\__/_/

containerd CLI

...

DESCRIPTION:

ctr is an unsupported debug and administrative client for interacting with the containerd daemon.

Because it is unsupported, the commands, options, and operations are not guaranteed to be backward compatible or stable from release to release of the containerd project.

COMMANDS:

plugins, plugin provides information about containerd plugins

version print the client and server versions

containers, c, container manage containers

content manage content

events, event display containerd events

images, image, i manage images

leases manage leases

namespaces, namespace, ns manage namespaces

pprof provide golang pprof outputs for containerd

run run a container

snapshots, snapshot manage snapshots

tasks, t, task manage tasks

install install a new package

oci OCI tools

shim interact with a shim directly

help, h Shows a list of commands or help for one command

# 네임스페이스 확인

ssh ec2-user@$N1 sudo ctr ns list

NAME LABELS

k8s.io

# 컨테이너 리스트 확인

for i in $N1 $N2; do echo ">> node $i <<"; ssh ec2-user@$i sudo ctr -n k8s.io container list; echo; done

CONTAINER IMAGE RUNTIME

28b6a15c475e32cd8777c1963ba684745573d0b6053f80d2d37add0ae841eb45 602401143452.dkr.ecr-fips.us-east-1.amazonaws.com/eks/pause:3.5 io.containerd.runc.v2

4f266ebcee45b133c527df96499e01ec0c020ea72785eb10ef63b20b5826cf7c 602401143452.dkr.ecr-fips.us-east-1.amazonaws.com/eks/pause:3.5 io.containerd.runc.v2

...

# 컨테이너 이미지 확인

for i in $N1 $N2; do echo ">> node $i <<"; ssh ec2-user@$i sudo ctr -n k8s.io image list --quiet; echo; done

...

# 태스크 리스트 확인

for i in $N1 $N2; do echo ">> node $i <<"; ssh ec2-user@$i sudo ctr -n k8s.io task list; echo; done

...

## 예시) 각 테스크의 PID(3706) 확인

ssh ec2-user@$N1 sudo ps -c 3706

PID CLS PRI TTY STAT TIME COMMAND

3099 TS 19 ? Ssl 0:01 kube-proxy --v=2 --config=/var/lib/kube-proxy-config/config --hostname-override=ip-192-168-1-229.ap-northeast-2.compute.internal- 실습

(leeeuijoo@myeks:default) [root@myeks-host ~]# ssh ec2-user@$N1 ctr --version

ctr github.com/containerd/containerd 1.7.11

(leeeuijoo@myeks:default) [root@myeks-host ~]# ssh ec2-user@$N2 ctr --version

ctr github.com/containerd/containerd 1.7.11

(leeeuijoo@myeks:default) [root@myeks-host ~]# ssh ec2-user@$N1 ctr

NAME:

ctr -

__

_____/ /______

/ ___/ __/ ___/

/ /__/ /_/ /

\___/\__/_/

containerd CLI

USAGE:

ctr [global options] command [command options] [arguments...]

VERSION:

1.7.11

DESCRIPTION:

ctr is an unsupported debug and administrative client for interacting

with the containerd daemon. Because it is unsupported, the commands,

options, and operations are not guaranteed to be backward compatible or

stable from release to release of the containerd project.

COMMANDS:

plugins, plugin Provides information about containerd plugins

version Print the client and server versions

containers, c, container Manage containers

content Manage content

events, event Display containerd events

images, image, i Manage images

leases Manage leases

namespaces, namespace, ns Manage namespaces

pprof Provide golang pprof outputs for containerd

run Run a container

snapshots, snapshot Manage snapshots

tasks, t, task Manage tasks

install Install a new package

oci OCI tools

sandboxes, sandbox, sb, s Manage sandboxes

info Print the server info

deprecations

shim Interact with a shim directly

help, h Shows a list of commands or help for one command

GLOBAL OPTIONS:

--debug Enable debug output in logs

--address value, -a value Address for containerd's GRPC server (default: "/run/containerd/containerd.sock") [$CONTAINERD_ADDRESS]

--timeout value Total timeout for ctr commands (default: 0s)

--connect-timeout value Timeout for connecting to containerd (default: 0s)

--namespace value, -n value Namespace to use with commands (default: "default") [$CONTAINERD_NAMESPACE]

--help, -h show help

--version, -v print the version

(leeeuijoo@myeks:default) [root@myeks-host ~]# ssh ec2-user@$N1 sudo ctr ns list

NAME LABELS

k8s.io

(leeeuijoo@myeks:default) [root@myeks-host ~]# for i in $N1 $N2; do echo ">> node $i <<"; ssh ec2-user@$i sudo ctr -n k8s.io container list; echo; done

>> node 192.168.1.198 <<

CONTAINER IMAGE RUNTIME

2578a3942962c2b83fcc170edbf605b316909516021f42a49072881caabc4646 066635153087.dkr.ecr.il-central-1.amazonaws.com/amazon-k8s-cni:v1.15.1 io.containerd.runc.v2

27912fcde3f609df9f98596c9dacfa27a17fdb2909fc945639a1fbfb44a1574e 602401143452.dkr.ecr.ap-northeast-2.amazonaws.com/eks/pause:3.5 io.containerd.runc.v2

29d44690d52de745ec54811de08a084b2c860fc3937f77b9137fae8995e78127 066635153087.dkr.ecr.il-central-1.amazonaws.com/eks/kube-proxy:v1.28.2-minimal-eksbuild.2 io.containerd.runc.v2

2c8a7f89ab7f3647652d90dbc988c74b574e1991e1eea2931fb518221f104502 602401143452.dkr.ecr.ap-northeast-2.amazonaws.com/eks/pause:3.5 io.containerd.runc.v2

82e9414af6187100b02651b1a50bf010744d8b9dd961b885dc37131ee6112060 066635153087.dkr.ecr.il-central-1.amazonaws.com/amazon-k8s-cni-init:v1.15.1 io.containerd.runc.v2

ab92ded43b42e435c4d696b5aca4ec4b44bab841bde325b57546dfc7e1ec1a34 602401143452.dkr.ecr.ap-northeast-2.amazonaws.com/amazon/aws-network-policy-agent:v1.0.4-eksbuild.1 io.containerd.runc.v2

>> node 192.168.2.221 <<

CONTAINER IMAGE RUNTIME

0a1b745e2a9b1ec6ea4f0a9c09b51cf6df84a1f99f7e0533b9b445d7df7e48d3 066635153087.dkr.ecr.il-central-1.amazonaws.com/amazon-k8s-cni-init:v1.15.1 io.containerd.runc.v2

2ec889e1f07aff80274bc76e431e87bc28ff9c2313cddf9bcfe01c9ea115a21f 602401143452.dkr.ecr.ap-northeast-2.amazonaws.com/amazon/aws-network-policy-agent:v1.0.4-eksbuild.1 io.containerd.runc.v2

6337e8cd15a2e60627d950e8b7172ac94aa20a615927d97a01ee5aba64f85a79 602401143452.dkr.ecr.ap-northeast-2.amazonaws.com/eks/coredns:v1.10.1-eksbuild.4 io.containerd.runc.v2

760ba423b3026e37ec30893b66a6634ae2c29a1e8d1a58e9cc19eeb7bcc4a7d5 066635153087.dkr.ecr.il-central-1.amazonaws.com/amazon-k8s-cni:v1.15.1 io.containerd.runc.v2

878389e706f329ad82b61933e02ec571066a1c9813b05cf0c291407c5d58ae0f 602401143452.dkr.ecr.ap-northeast-2.amazonaws.com/eks/coredns:v1.10.1-eksbuild.4 io.containerd.runc.v2

8d530d660415ac2c5b36e579d36f1a5b60ce679b6a7a3afb67a72c17e2406d8a 602401143452.dkr.ecr.ap-northeast-2.amazonaws.com/eks/pause:3.5 io.containerd.runc.v2

9d86f43a60022e5a60eee3218b7dc96844840ba4c56c10570c6ba354b3797059 602401143452.dkr.ecr.ap-northeast-2.amazonaws.com/eks/pause:3.5 io.containerd.runc.v2

a4afe7156d5b445cb71cef91514b9516dfe9e97afd4da16cffee13bda0fcd567 066635153087.dkr.ecr.il-central-1.amazonaws.com/eks/kube-proxy:v1.28.2-minimal-eksbuild.2 io.containerd.runc.v2

bcca61055da2a7ab31512308c9b8e39ea22c37234d43b5712f6476efa095e407 602401143452.dkr.ecr.ap-northeast-2.amazonaws.com/eks/pause:3.5 io.containerd.runc.v2

d9c513660ccc53ebf6cd88fb974d606ec05ea29ac4deabfbe60c8000d4131167 602401143452.dkr.ecr.ap-northeast-2.amazonaws.com/eks/pause:3.5 io.containerd.runc.v2

(leeeuijoo@myeks:default) [root@myeks-host ~]# for i in $N1 $N2; do echo ">> node $i <<"; ssh ec2-user@$i sudo ctr -n k8s.io image list --quiet; echo; done

>> node 192.168.1.198 <<

066635153087.dkr.ecr.il-central-1.amazonaws.com/amazon-k8s-cni-init:v1.15.1

066635153087.dkr.ecr.il-central-1.amazonaws.com/amazon-k8s-cni-init:v1.15.1-eksbuild.1

066635153087.dkr.ecr.il-central-1.amazonaws.com/amazon-k8s-cni-init:v1.16.3-eksbuild.2

066635153087.dkr.ecr.il-central-1.amazonaws.com/amazon-k8s-cni:v1.15.1

066635153087.dkr.ecr.il-central-1.amazonaws.com/amazon-k8s-cni:v1.15.1-eksbuild.1

066635153087.dkr.ecr.il-central-1.amazonaws.com/amazon-k8s-cni:v1.16.3-eksbuild.2

066635153087.dkr.ecr.il-central-1.amazonaws.com/eks/kube-proxy:v1.28.2-minimal-eksbuild.2

066635153087.dkr.ecr.il-central-1.amazonaws.com/eks/kube-proxy:v1.28.6-minimal-eksbuild.2

296578399912.dkr.ecr.ap-southeast-3.amazonaws.com/amazon-k8s-cni-init:v1.15.1

296578399912.dkr.ecr.ap-southeast-3.amazonaws.com/amazon-k8s-cni-init:v1.15.1-eksbuild.1

296578399912.dkr.ecr.ap-southeast-3.amazonaws.com/amazon-k8s-cni-init:v1.16.3-eksbuild.2

296578399912.dkr.ecr.ap-southeast-3.amazonaws.com/amazon-k8s-cni:v1.15.1

296578399912.dkr.ecr.ap-southeast-3.amazonaws.com/amazon-k8s-cni:v1.15.1-eksbuild.1

296578399912.dkr.ecr.ap-southeast-3.amazonaws.com/amazon-k8s-cni:v1.16.3-eksbuild.2

296578399912.dkr.ecr.ap-southeast-3.amazonaws.com/eks/kube-proxy:v1.2

.

.

.

(leeeuijoo@myeks:default) [root@myeks-host ~]# for i in $N1 $N2; do echo ">> node $i <<"; ssh ec2-user@$i sudo ctr -n k8s.io task list; echo; done

>> node 192.168.1.198 <<

TASK PID STATUS

ab92ded43b42e435c4d696b5aca4ec4b44bab841bde325b57546dfc7e1ec1a34 3551 RUNNING

2578a3942962c2b83fcc170edbf605b316909516021f42a49072881caabc4646 3375 RUNNING

27912fcde3f609df9f98596c9dacfa27a17fdb2909fc945639a1fbfb44a1574e 3067 RUNNING

29d44690d52de745ec54811de08a084b2c860fc3937f77b9137fae8995e78127 3140 RUNNING

2c8a7f89ab7f3647652d90dbc988c74b574e1991e1eea2931fb518221f104502 3059 RUNNING

>> node 192.168.2.221 <<

TASK PID STATUS

a4afe7156d5b445cb71cef91514b9516dfe9e97afd4da16cffee13bda0fcd567 3143 RUNNING

d9c513660ccc53ebf6cd88fb974d606ec05ea29ac4deabfbe60c8000d4131167 3597 RUNNING

8d530d660415ac2c5b36e579d36f1a5b60ce679b6a7a3afb67a72c17e2406d8a 3054 RUNNING

760ba423b3026e37ec30893b66a6634ae2c29a1e8d1a58e9cc19eeb7bcc4a7d5 3377 RUNNING

bcca61055da2a7ab31512308c9b8e39ea22c37234d43b5712f6476efa095e407 3052 RUNNING

9d86f43a60022e5a60eee3218b7dc96844840ba4c56c10570c6ba354b3797059 3613 RUNNING

6337e8cd15a2e60627d950e8b7172ac94aa20a615927d97a01ee5aba64f85a79 3682 RUNNING

878389e706f329ad82b61933e02ec571066a1c9813b05cf0c291407c5d58ae0f 3690 RUNNING

2ec889e1f07aff80274bc76e431e87bc28ff9c2313cddf9bcfe01c9ea115a21f 3801 RUNNING

(leeeuijoo@myeks:default) [root@myeks-host ~]# ssh ec2-user@$N1 sudo ps -c 3551

PID CLS PRI TTY STAT TIME COMMAND

3551 TS 19 ? Ssl 0:02 /controller --enable-ipv6=false --enable-network-policy=false --enable-cloudwatch-logs=false --enable-policy-event-logs=false --metrics-bind-addr=:8162 --health-probe-bind-addr=:8163

(leeeuijoo@myeks:default) [root@myeks-host ~]# ssh ec2-user@$N1 sudo ps -c 3375

PID CLS PRI TTY STAT TIME COMMAND

3375 TS 19 ? Ssl 0:00 /app/aws-vpc-cni6. ECR 사용하기

# 퍼블릭 ECR 인증

aws ecr-public get-login-password --region us-east-1 | docker login --username AWS --password-stdin public.ecr.aws

cat /root/.docker/config.json | jq

# 퍼블릭 Repo 기본 정보 확인

aws ecr-public describe-registries --region us-east-1 | jq

# 퍼블릭 Repo 생성

NICKNAME=<각자자신의닉네임>

aws ecr-public create-repository --repository-name $NICKNAME/nginx --region us-east-1

# 생성된 퍼블릭 Repo 확인

aws ecr-public describe-repositories --region us-east-1 | jq

REPOURI=$(aws ecr-public describe-repositories --region us-east-1 | jq -r .repositories[].repositoryUri)

echo $REPOURI

# 이미지 태그

docker pull nginx:alpine

docker images

docker tag nginx:alpine $REPOURI:latest

docker images

# 이미지 업로드

docker push $REPOURI:latest

# 파드 실행

kubectl run mynginx --image $REPOURI

kubectl get pod

kubectl delete pod mynginx

# 퍼블릭 이미지 삭제

aws ecr-public batch-delete-image \

--repository-name $NICKNAME/nginx \

--image-ids imageTag=latest \

--region us-east-1

# 퍼블릭 Repo 삭제

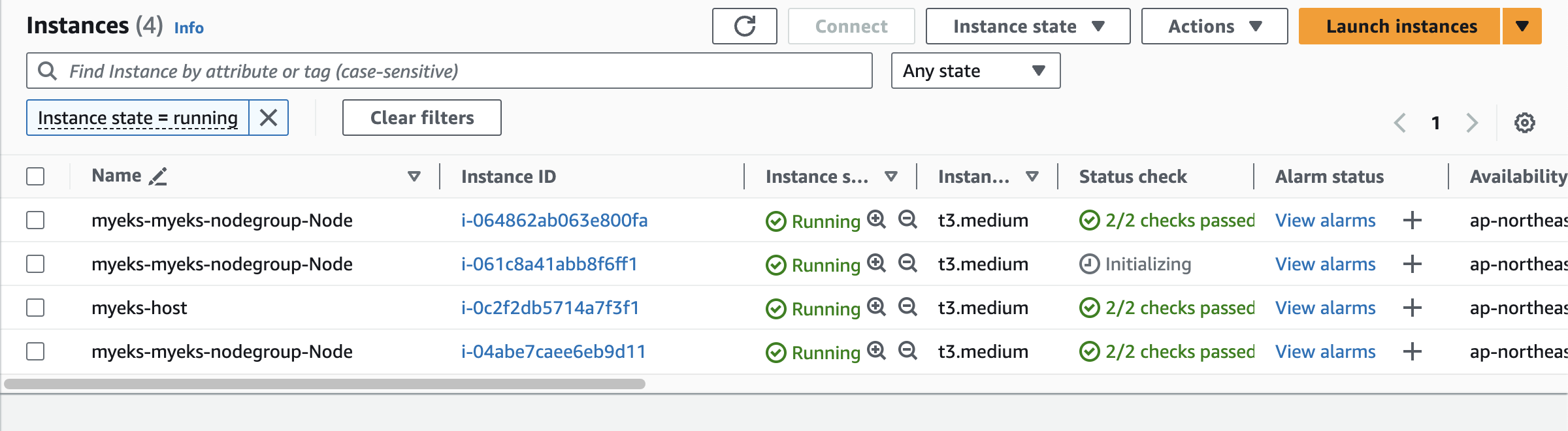

aws ecr-public delete-repository --repository-name $NICKNAME/nginx --force --region us-east-17. 관리형 노드 추가 및 삭제

# 옵션 [터미널1] EC2 생성 모니터링

#aws ec2 describe-instances --query "Reservations[*].Instances[*].{PublicIPAdd:PublicIpAddress,PrivateIPAdd:PrivateIpAddress,InstanceName:Tags[?Key=='Name']|[0].Value,Status:State.Name}" --filters Name=instance-state-name,Values=running --output table

while true; do aws ec2 describe-instances --query "Reservations[*].Instances[*].{PublicIPAdd:PublicIpAddress,PrivateIPAdd:PrivateIpAddress,InstanceName:Tags[?Key=='Name']|[0].Value,Status:State.Name}" --filters Name=instance-state-name,Values=running --output text ; echo "------------------------------" ; sleep 1; done

# eks 노드 그룹 정보 확인

eksctl get nodegroup --cluster $CLUSTER_NAME --name $CLUSTER_NAME-nodegroup

# 노드 2개 → 3개 증가

eksctl scale nodegroup --cluster $CLUSTER_NAME --name $CLUSTER_NAME-nodegroup --nodes 3 --nodes-min 3 --nodes-max 6

# 노드 확인

kubectl get nodes -o wide

kubectl get nodes -l eks.amazonaws.com/nodegroup=$CLUSTER_NAME-nodegroup

# 노드 3개 → 2개 감소 : 적용까지 시간이 소요됨

aws eks update-nodegroup-config --cluster-name $CLUSTER_NAME --nodegroup-name $CLUSTER_NAME-nodegroup --scaling-config minSize=2,maxSize=2,desiredSize=2- 실습

(leeeuijoo@myeks:default) [root@myeks-host ~]# eksctl get nodegroup --cluster $CLUSTER_NAME --name $CLUSTER_NAME-nodegroup

CLUSTER NODEGROUP STATUS CREATED MIN SIZE MAX SIZE DESIRED CAPACITY INSTANCE TYPE IMAGE ID ASG NAME TYPE

myeks myeks-nodegroup ACTIVE 2024-03-07T12:13:49Z 2 2 2 t3.medium AL2_x86_64 eks-myeks-nodegroup-20c70c6a-b895-7ce2-2393-8cf74b6037b5 managed

(leeeuijoo@myeks:default) [root@myeks-host ~]# eksctl scale nodegroup --cluster $CLUSTER_NAME --name $CLUSTER_NAME-nodegroup --nodes 3 --nodes-min 3 --nodes-max 6

2024-03-07 23:07:56 [ℹ] scaling nodegroup "myeks-nodegroup" in cluster myeks

2024-03-07 23:07:57 [ℹ] initiated scaling of nodegroup

2024-03-07 23:07:57 [ℹ] to see the status of the scaling run `eksctl get nodegroup --cluster myeks --region ap-northeast-2 --name myeks-nodegroup`

(leeeuijoo@myeks:default) [root@myeks-host ~]# k get nodes

NAME STATUS ROLES AGE VERSION

ip-192-168-1-198.ap-northeast-2.compute.internal Ready <none> 114m v1.28.5-eks-5e0fdde

ip-192-168-2-221.ap-northeast-2.compute.internal Ready <none> 114m v1.28.5-eks-5e0fdde

ip-192-168-2-253.ap-northeast-2.compute.internal Ready <none> 28s v1.28.5-eks-5e0fdde- 노드 3개로 증가

- 스케일 다운

(leeeuijoo@myeks:default) [root@myeks-host ~]# aws eks update-nodegroup-config --cluster-name $CLUSTER_NAME --nodegroup-name $CLUSTER_NAME-nodegroup --scaling-config minSize=2,maxSize=2,desiredSize=2

{

"update": {

"id": "95599237-cc6e-35de-ac6a-1ac65b54f480",

"status": "InProgress",

"type": "ConfigUpdate",

"params": [

{

"type": "MinSize",

"value": "2"

},

{

"type": "MaxSize",

"value": "2"

},

{

"type": "DesiredSize",

"value": "2"

}

],

"createdAt": "2024-03-07T23:10:08.153000+09:00",

"errors": []

}

}

(leeeuijoo@myeks:default) [root@myeks-host ~]# k get nodes

NAME STATUS ROLES AGE VERSION

ip-192-168-1-198.ap-northeast-2.compute.internal Ready <none> 117m v1.28.5-eks-5e0fdde

ip-192-168-2-221.ap-northeast-2.compute.internal Ready,SchedulingDisabled <none> 117m v1.28.5-eks-5e0fdde

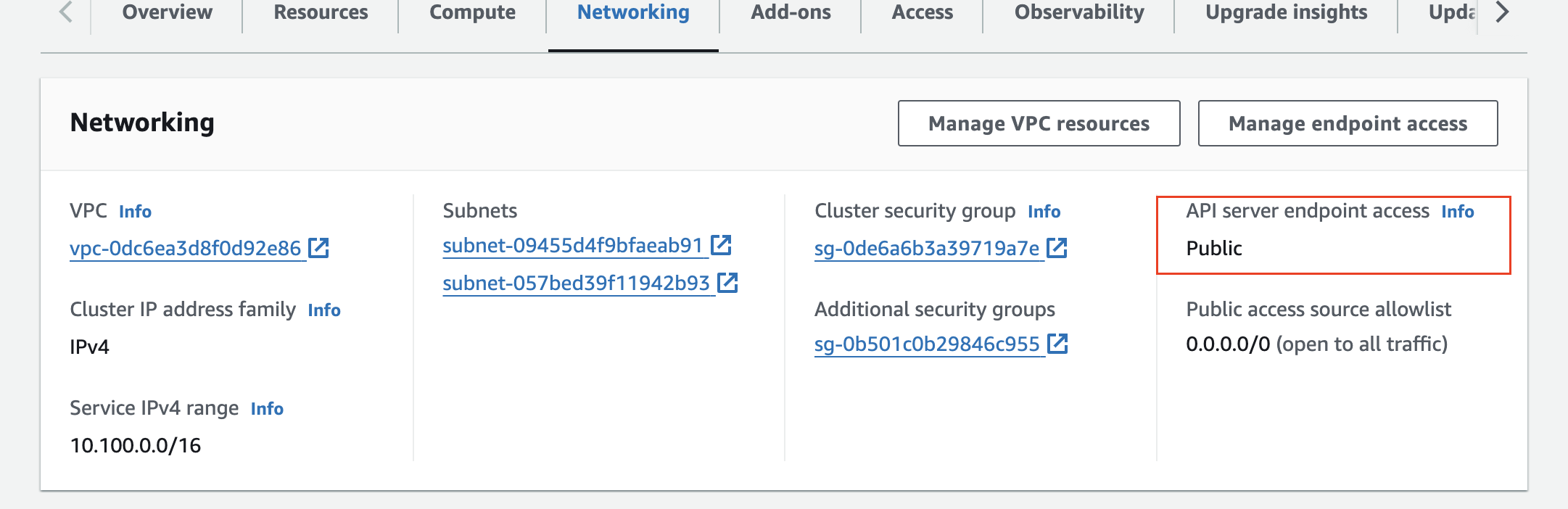

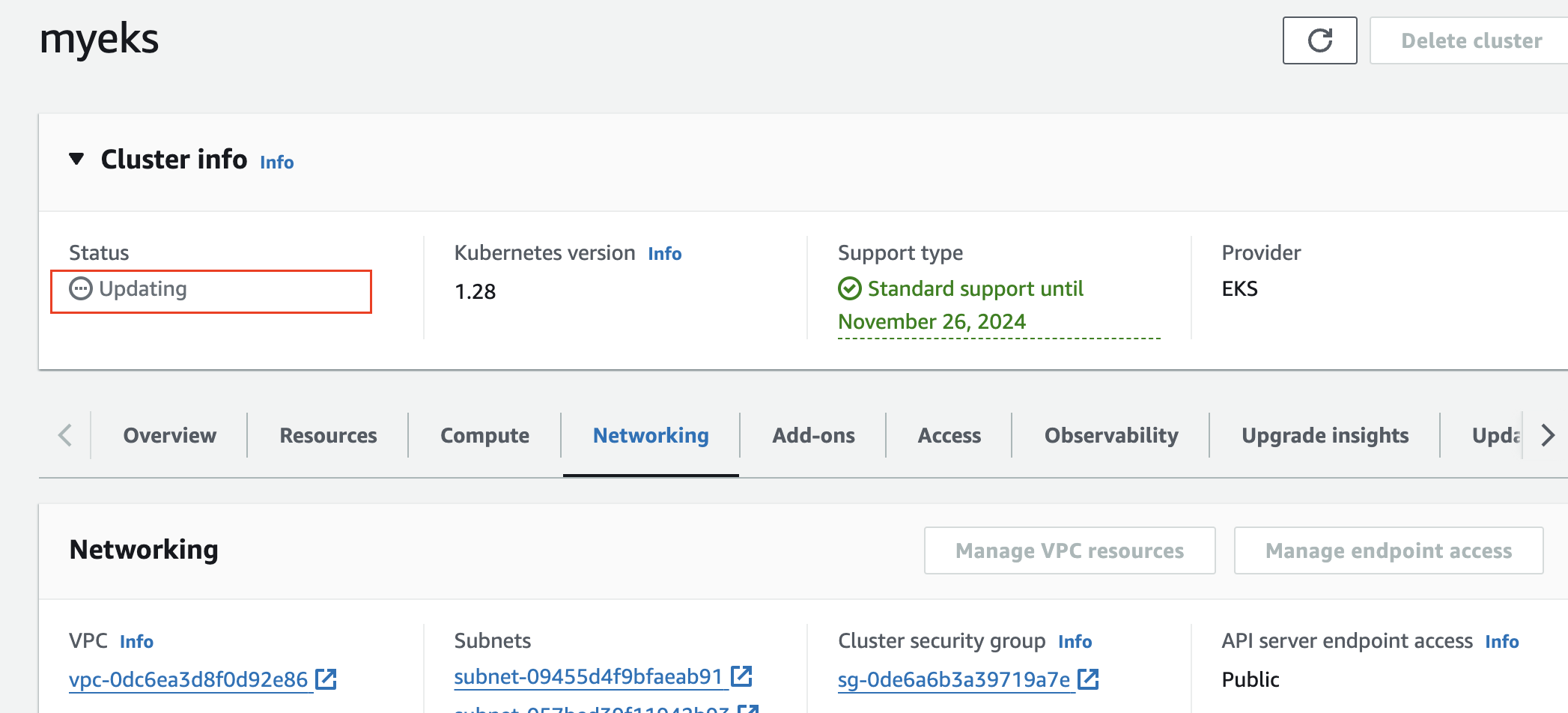

ip-192-168-2-253.ap-northeast-2.compute.internal Ready <none> 3m25s v1.28.5-eks-5e0fdde8. EKS Cluster Endpoint 를 Public(IP제한)+Private 로 변경

- 현재 정보

# [모니터링1] 설정 후 변경 확인

APIDNS=$(aws eks describe-cluster --name $CLUSTER_NAME | jq -r .cluster.endpoint | cut -d '/' -f 3)

dig +short $APIDNS

while true; do dig +short $APIDNS ; echo "------------------------------" ; date; sleep 1; done

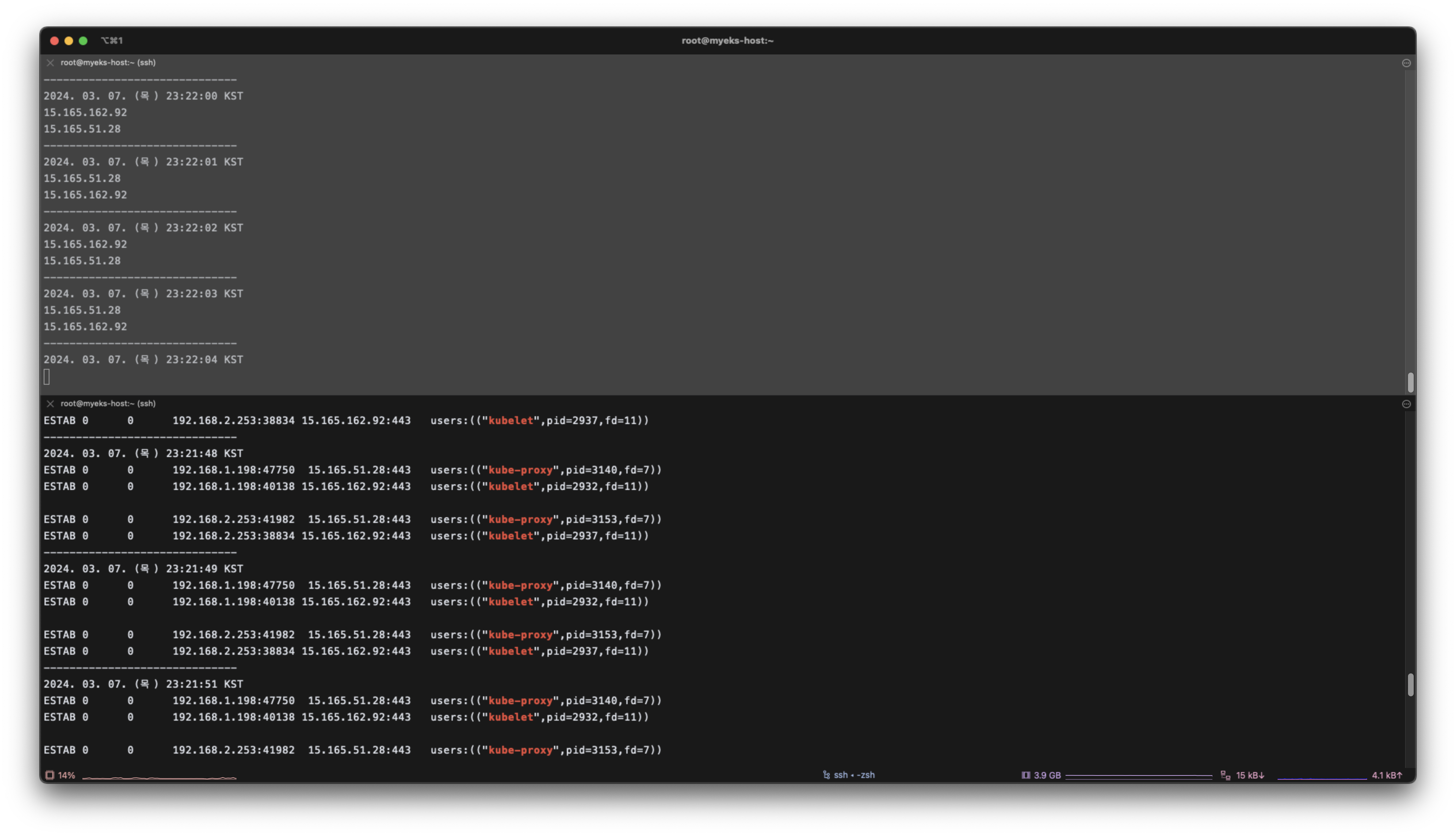

# [모니터링2] 노드에서 ControlPlane과 통신 연결 확인

N1=$(kubectl get node --label-columns=topology.kubernetes.io/zone --selector=topology.kubernetes.io/zone=ap-northeast-2a -o jsonpath={.items[0].status.addresses[0].address})

N2=$(kubectl get node --label-columns=topology.kubernetes.io/zone --selector=topology.kubernetes.io/zone=ap-northeast-2c -o jsonpath={.items[0].status.addresses[0].address})

while true; do ssh ec2-user@$N1 sudo ss -tnp | egrep 'kubelet|kube-proxy' ; echo ; ssh ec2-user@$N2 sudo ss -tnp | egrep 'kubelet|kube-proxy' ; echo "------------------------------" ; date; sleep 1; done

# Public(IP제한)+Private 로 변경 : 설정 후 8분 정도 후 반영

aws eks update-cluster-config --region $AWS_DEFAULT_REGION --name $CLUSTER_NAME --resources-vpc-config endpointPublicAccess=true,publicAccessCidrs="$(curl -s ipinfo.io/ip)/32",endpointPrivateAccess=true- 출력

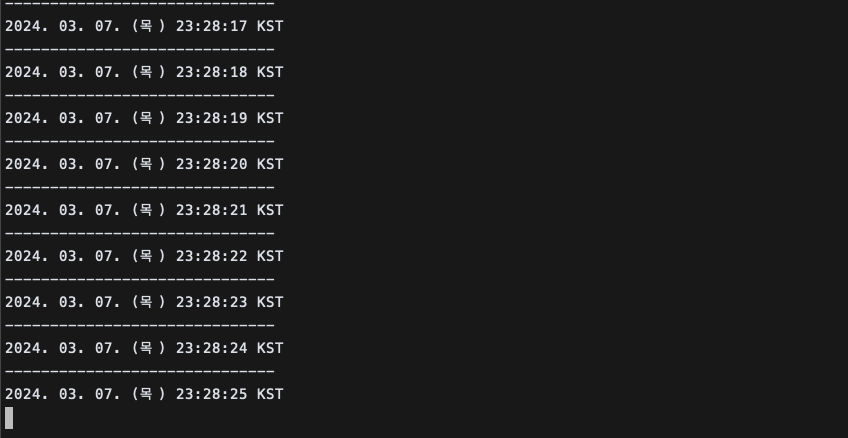

[shell 1]

2024. 03. 07. (목) 23:22:29 KST

15.165.51.28

15.165.162.92

------------------------------

2024. 03. 07. (목) 23:22:30 KST

15.165.162.92

15.165.51.28

------------------------------

2024. 03. 07. (목) 23:22:31 KST

15.165.51.28

15.165.162.92

------------------------------

2024. 03. 07. (목) 23:22:32 KST

15.165.162.92

15.165.51.28

------------------------------

2024. 03. 07. (목) 23:22:33 KST

...

[shell 2]

------------------------------

2024. 03. 07. (목) 23:21:49 KST

ESTAB 0 0 192.168.1.198:47750 15.165.51.28:443 users:(("kube-proxy",pid=3140,fd=7))

ESTAB 0 0 192.168.1.198:40138 15.165.162.92:443 users:(("kubelet",pid=2932,fd=11))

ESTAB 0 0 192.168.2.253:41982 15.165.51.28:443 users:(("kube-proxy",pid=3153,fd=7))

ESTAB 0 0 192.168.2.253:38834 15.165.162.92:443 users:(("kubelet",pid=2937,fd=11))

------------------------------

2024. 03. 07. (목) 23:21:51 KST

ESTAB 0 0 192.168.1.198:47750 15.165.51.28:443 users:(("kube-proxy",pid=3140,fd=7))

ESTAB 0 0 192.168.1.198:40138 15.165.162.92:443 users:(("kubelet",pid=2932,fd=11))

ESTAB 0 0 192.168.2.253:41982 15.165.51.28:443 users:(("kube-proxy",pid=3153,fd=7))

...

- Update 진행 중

- 긁어올 수 없어짐

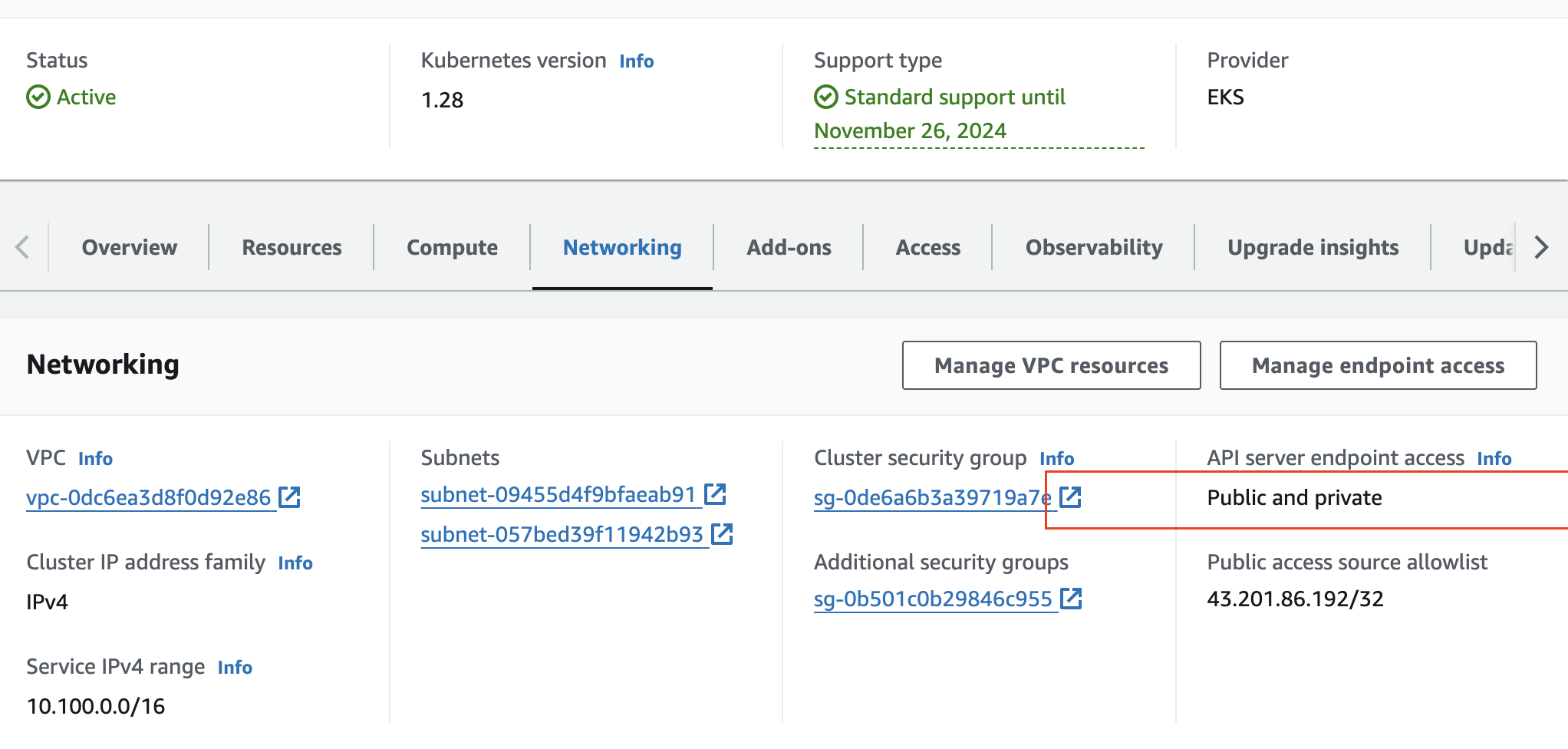

- Update 완료

- kubectl 확인

# kubectl 사용 확인

kubectl get node -v=6

kubectl cluster-info

ss -tnp | grep kubectl # 신규 터미널에서 확인 >> EKS가 동작하는 VPC 내부에서는 Cluster Endpoint 도메인 질의 시 Private IP 정보를 리턴

# 아래 dig 조회가 잘 안될 경우 myeks 호스트EC2를 재부팅 후 다시 실행

dig +short $APIDNS

# EKS ControlPlane 보안그룹 ID 확인

aws ec2 describe-security-groups --filters Name=group-name,Values=*ControlPlaneSecurityGroup* --query "SecurityGroups[*].[GroupId]" --output text

CPSGID=$(aws ec2 describe-security-groups --filters Name=group-name,Values=*ControlPlaneSecurityGroup* --query "SecurityGroups[*].[GroupId]" --output text)

echo $CPSGID

# 노드 보안그룹에 eksctl-host 에서 노드(파드)에 접속 가능하게 룰(Rule) 추가 설정

aws ec2 authorize-security-group-ingress --group-id $CPSGID --protocol '-1' --cidr 192.168.1.100/32

# kubectl 사용 확인

kubectl get node -v=6

kubectl cluster-info- 룰 추가 전

(leeeuijoo@myeks:default) [root@myeks-host ~]# kubectl get node -v=6

I0307 23:32:08.410305 23571 loader.go:395] Config loaded from file: /root/.kube/config

I0307 23:32:09.181331 23571 round_trippers.go:553] GET https://7A25B757005DD8204B9EC78BD35C6DDE.gr7.ap-northeast-2.eks.amazonaws.com/api/v1/nodes?limit=500 in 753 milliseconds

I0307 23:32:09.181464 23571 helpers.go:264] Connection error: Get https://7A25B757005DD8204B9EC78BD35C6DDE.gr7.ap-northeast-2.eks.amazonaws.com/api/v1/nodes?limit=500: dial tcp: lookup 7A25B757005DD8204B9EC78BD35C6DDE.gr7.ap-northeast-2.eks.amazonaws.com on 192.168.0.2:53: no such host

Unable to connect to the server: dial tcp: lookup 7A25B757005DD8204B9EC78BD35C6DDE.gr7.ap-northeast-2.eks.amazonaws.com on 192.168.0.2:53: no such host- 룰 추가 후

(leeeuijoo@myeks:default) [root@myeks-host ~]# kubectl get node -v=6

I0307 23:33:41.839993 23732 loader.go:395] Config loaded from file: /root/.kube/config

I0307 23:33:42.714388 23732 round_trippers.go:553] GET https://7A25B757005DD8204B9EC78BD35C6DDE.gr7.ap-northeast-2.eks.amazonaws.com/api/v1/nodes?limit=500 200 OK in 866 milliseconds

NAME STATUS ROLES AGE VERSION

ip-192-168-1-198.ap-northeast-2.compute.internal Ready <none> 138m v1.28.5-eks-5e0fdde

ip-192-168-2-253.ap-northeast-2.compute.internal Ready <none> 24m v1.28.5-eks-5e0fdde- Node 에서 확인

# 모니터링 : tcp peer 정보 변화 확인

while true; do ssh ec2-user@$N1 sudo ss -tnp | egrep 'kubelet|kube-proxy' ; echo ; ssh ec2-user@$N2 sudo ss -tnp | egrep 'kubelet|kube-proxy' ; echo "------------------------------" ; date; sleep 1; done

# kube-proxy rollout

kubectl rollout restart ds/kube-proxy -n kube-system

# kubelet 은 노드에서 systemctl restart kubelet으로 적용해볼 것

for i in $N1 $N2; do echo ">> node $i <<"; ssh ec2-user@$i sudo systemctl restart kubelet; echo; done- 자신의 개인 PC에서 EKS API 정보 확인

- 외부이기 때문에 Cluster Endpoint 도메인 질의 시 Public IP 정보를 리턴

(leeeuijoo@myeks:default) [root@myeks-host ~]# dig +short $APIDNS

192.168.2.197

192.168.1.2259. 실습자원 삭제

- Amazon EKS 클러스터 삭제(10분 정도 소요):

eksctl delete cluster --name $CLUSTER_NAME - (클러스터 삭제 완료 확인 후) AWS CloudFormation 스택 삭제 :

aws cloudformation delete-stack --stack-name myeks