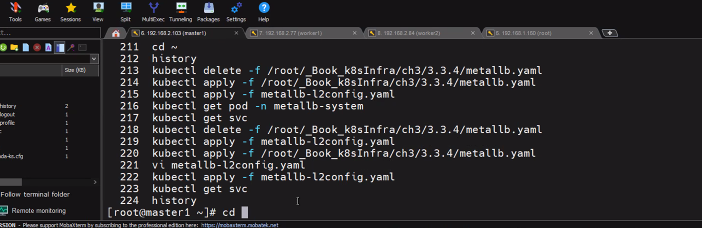

metallb 다 지우기

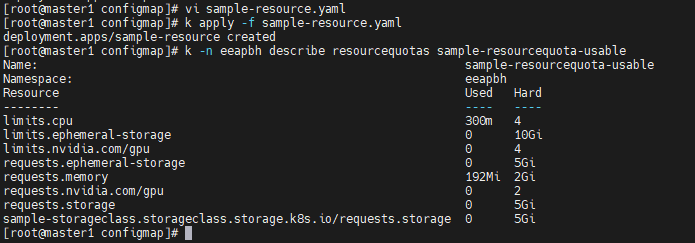

kubectl delete -f /root/_Book_k8sInfra/ch3/3.3.4/metallb.yamlvi metallb-l2config.yaml

kubectl apply -f metallb-l2config.yaml

k get pods -n metallb-system -o wide

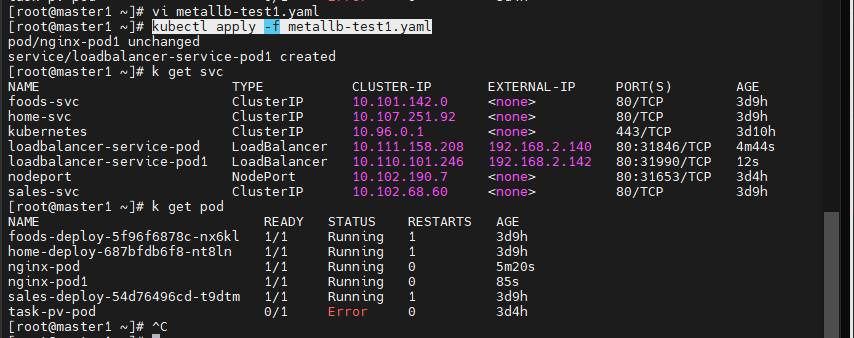

cp metallb-test.yaml metallb-test1.yaml

vi metallb-test1.yaml

kubectl apply -f metallb-test1.yaml

Volume

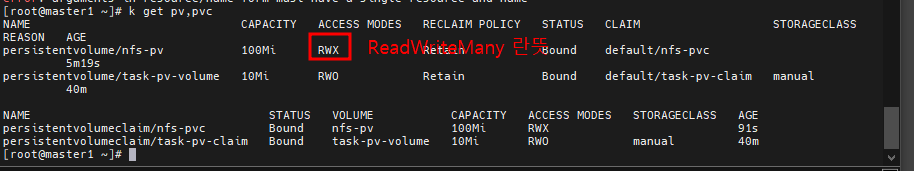

- pv/pvc

# pv-pvc-pod.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: task-pv-volume

labels:

type: local

spec:

storageClassName: manual

capacity:

storage: 10Mi

accessModes:

- ReadWriteOnce

hostPath:

path: "/mnt/data"

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: task-pv-claim

spec:

storageClassName: manual

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Mi

selector:

matchLabels:

type: local

---

apiVersion: v1

kind: Pod

metadata:

name: task-pv-pod

labels:

app: task-pv-pod

spec:

volumes:

- name: task-pv-storage

persistentVolumeClaim:

claimName: task-pv-claim

containers:

- name: task-pv-container

image: 192.168.1.156:5000/nginx:latest

ports:

- containerPort: 80

name: "http-server"

volumeMounts:

- mountPath: "/usr/share/nginx/html"

name: task-pv-storage

# k apply -f pv-pvc-pod.yaml

# k get pod -o wide

# k get po --show-labels

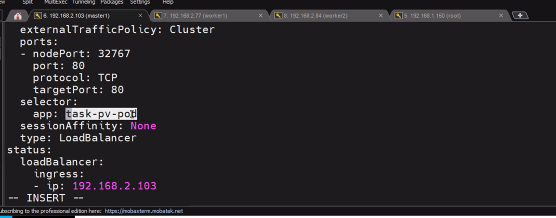

# k edit svc loadbalancer-service-pod

worker1

aws.tar 복사하고

tar xvf aws.tar -C /mnt/data/

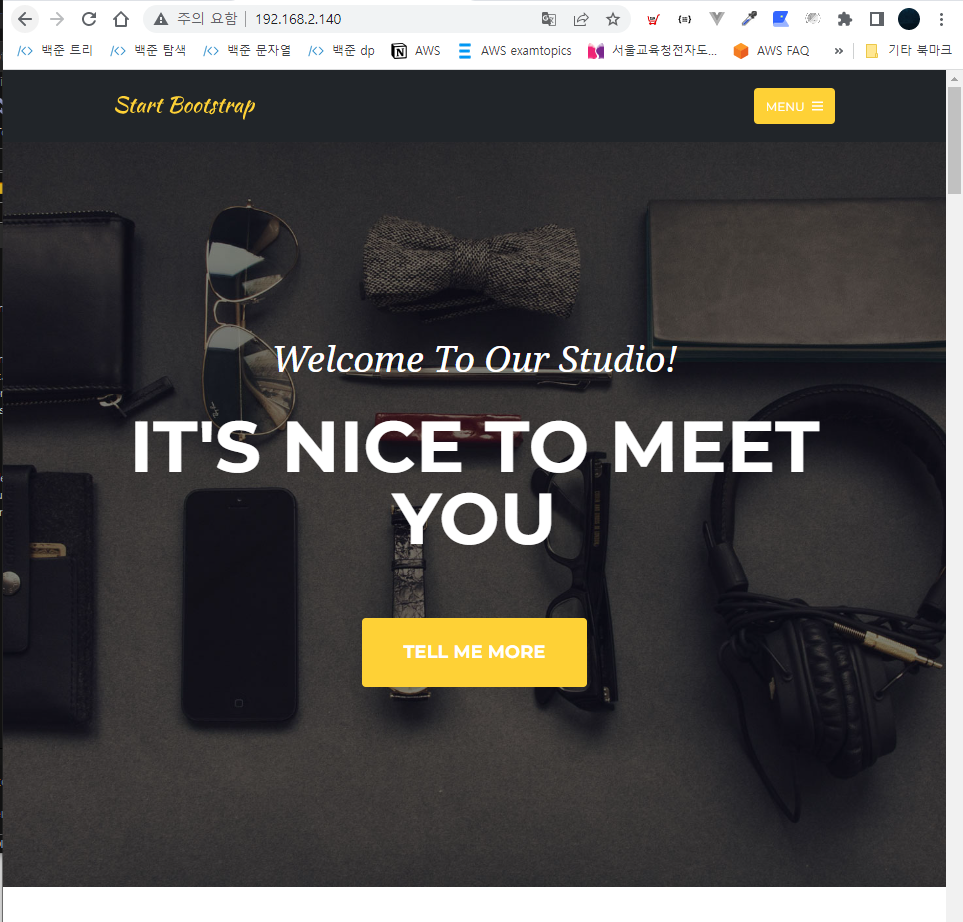

- 그럼 아까 loadbalancer-service-pod ip에서 원래 403 뜨던거에서 웹뜬다..

* rm -rf /mnt/data

* kubectl apply -f .

* echo "HELLO" > /mnt/data/index.html

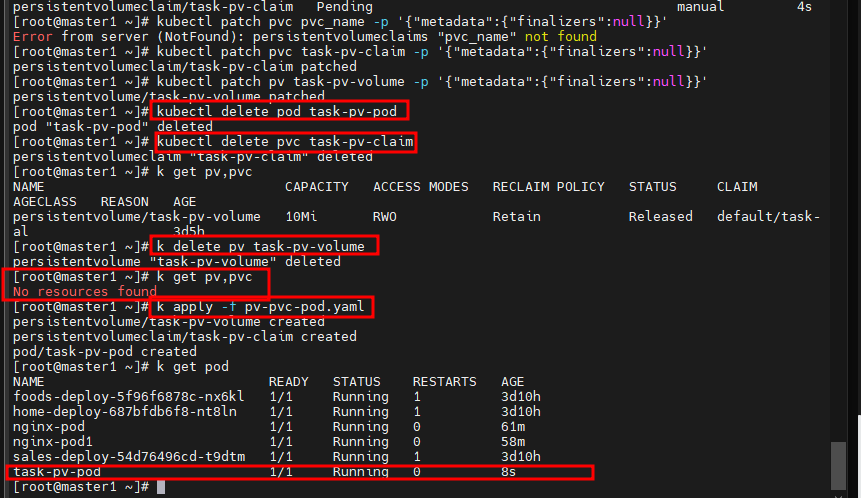

* kubectl delete pod task-pv-pod

* kubectl delete pvc task-pv-claim

# kubectl patch pvc pvc_name -p '{"metadata":{"finalizers":null}}'

# kubectl patch pv pv_name -p '{"metadata":{"finalizers":null}}'

* Retain 동일한 스토리지 자산을 재사용하려는 경우, 동일한 스토리지 자산 정의로 새 퍼시스턴트볼륨을 생성한다.

nfs

# yum install -y nfs-utils.x86_64

# mkdir /nfs_shared

# chmod 777 /nfs_shared/

# echo '/nfs_shared

192.168.0.0/21(rw,sync,no_root_squash)' >> /etc/exports

# systemctl enable --now nfs

# systemctl stop firewalld

# mkdir volume && cd $_

# vi nfs-pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: nfs-pv

spec:

capacity:

storage: 100Mi

accessModes:

- ReadWriteMany

persistentVolumeReclaimPolicy: Retain

nfs:

server: 192.168.2.140

path: /nfs_shared

# kubectl apply -f nfs-pv.yaml

# kubectl get pv

# vi nfs-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nfs-pvc

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 10Mi

# kubectl apply -f nfs-pvc.yaml

# kubectl get pvc

# kubectl get pv

# vi nfs-pvc-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-pvc-deploy

spec:

replicas: 4

selector:

matchLabels:

app: nfs-pvc-deploy

template:

metadata:

labels:

app: nfs-pvc-deploy

spec:

containers:

- name: nginx

image: nginx

volumeMounts:

- name: nfs-vol

mountPath: /usr/share/nginx/html

volumes:

- name: nfs-vol

persistentVolumeClaim:

claimName: nfs-pvc

# kubectl apply -f nfs-pvc-deploy.yaml

# kubectl get pod

# kubectl exec -it nfs-pvc-deploy-76bf944dd5-6j9gf -- /bin/bash

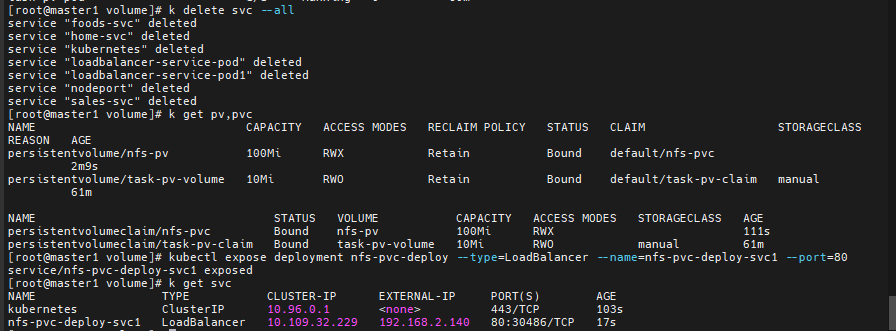

# kubectl expose deployment nfs-pvc-deploy --type=LoadBalancer --name=nfs-pvc-deploy-svc1 --port=80worker1, worker2

yum install -y nfs-utils.x86_64

metallb 가 external ip 를 줫다..?

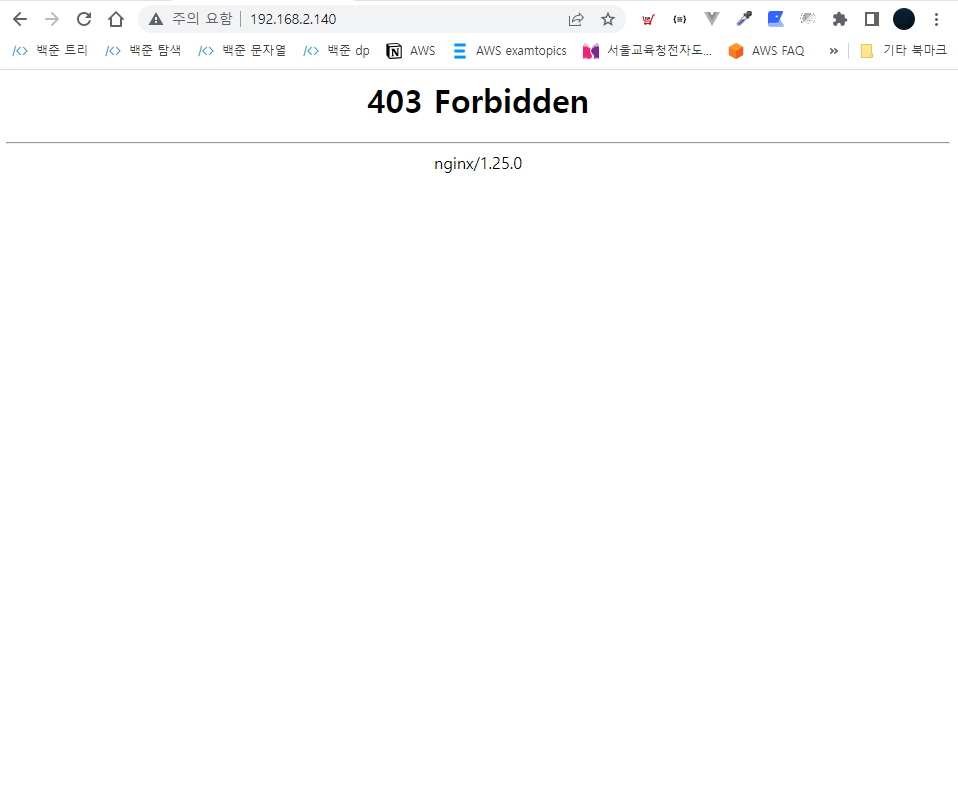

- 컨테이너 경로에 데이터가 아무것도 없기때문에 403이 떠야 정상임

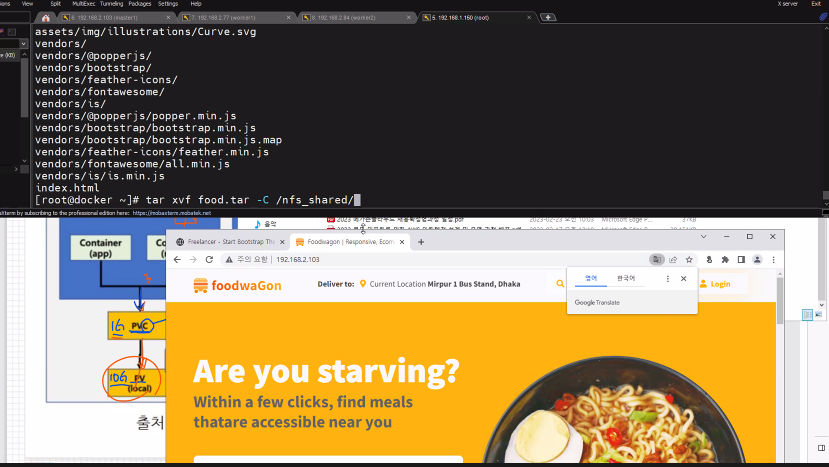

tar xvf food.tar -C /nfs_shared/

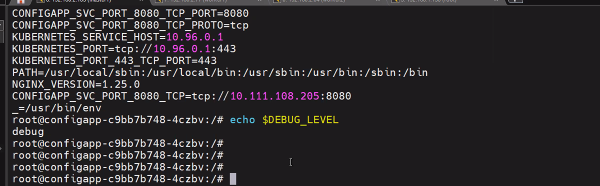

configMap

컨피그맵은 키-값 쌍으로 기밀이 아닌 데이터를 저장하는 데 사용하는 API 오브젝트입니다. 파드는 볼륨에서 환경 변수, 커맨드-라인 인수 또는 구성 파일로 컨피그맵을 사용할 수 있습니다.

컨피그맵을 사용하면 컨테이너 이미지에서 환경별 구성을 분리하여, 애플리케이션을 쉽게 이식할 수 있습니다.

vi configmap-dev.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: config-dev

namespace: default

data:

DB_URL: localhost

DB_USER: myuser

DB_PASS: mypass

DEBUG_INFO: debug

# k apply -f configmap-dev.yaml

# kubectl describe configmaps config-devvi deployment-config01.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: configapp

labels:

app: configapp

spec:

replicas: 1

selector:

matchLabels:

app: configapp

template:

metadata:

labels:

app: configapp

spec:

containers:

- name: testapp

image: nginx

ports:

- containerPort: 8080

env:

- name: DEBUG_LEVEL

valueFrom:

configMapKeyRef:

name: config-dev

key: DEBUG_INFO

---

apiVersion: v1

kind: Service

metadata:

labels:

app: configapp

name: configapp-svc

namespace: default

spec:

type: NodePort

ports:

- nodePort: 30800

port: 8080

protocol: TCP

targetPort: 80

selector:

app: configapp

k exec -it configapp-c9bb7b748-w9bqr -- bash

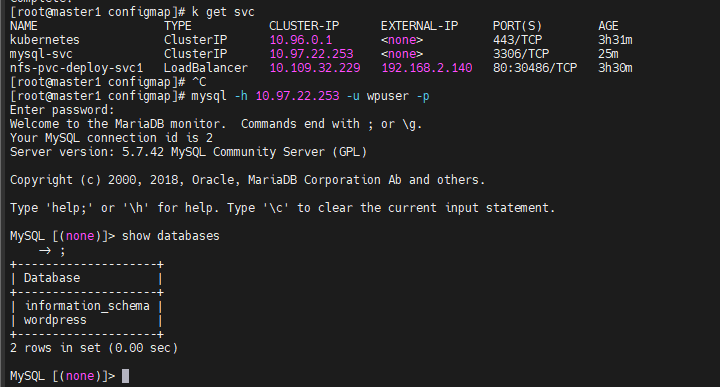

vi configmap-wordpress.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: config-wordpress

namespace: default

data:

MYSQL_ROOT_HOST: '%'

MYSQL_ROOT_PASSWORD: mode1752

MYSQL_DATABASE: wordpress

MYSQL_USER: wpuser

MYSQL_PASSWORD: wppass

# kubectl apply -f configmap-wordpress.yaml

# kubectl describe configmaps config-wordpress# vi mysql-pod-svc.yaml

apiVersion: v1

kind: Pod

metadata:

name: mysql-pod

labels:

app: mysql-pod

spec:

containers:

- name: mysql-container

image: mysql:5.7

envFrom:

- configMapRef:

name: config-wordpress

ports:

- containerPort: 3306

---

apiVersion: v1

kind: Service

metadata:

name: mysql-svc

spec:

type: ClusterIP

selector:

app: mysql-pod

ports:

- protocol: TCP

port: 3306

targetPort: 3306

# yum install -y mysql

# mysql -h 10.97.22.253 -u wpuser -p

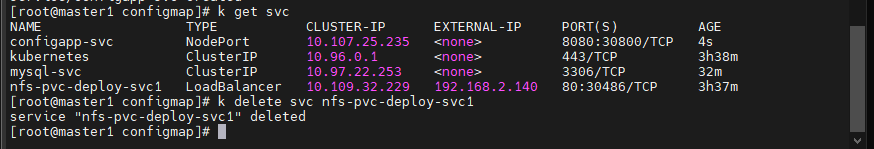

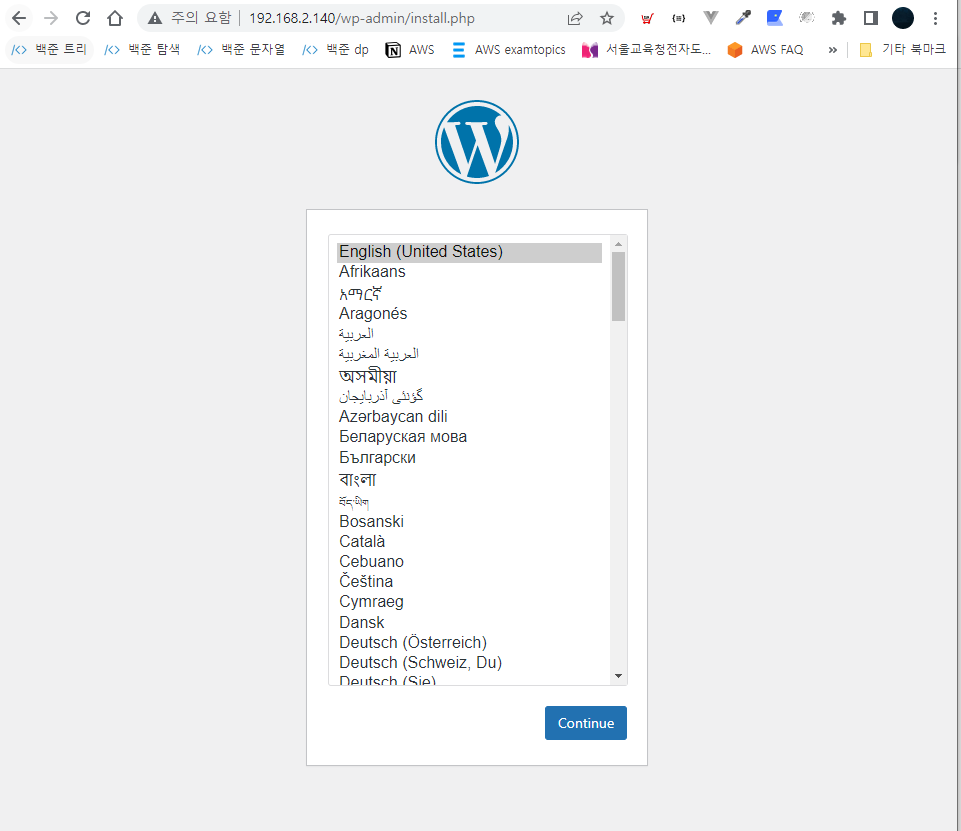

- 로드벨런서 지워주기

# vi wordpress-pod-svc.yaml

apiVersion: v1

kind: Pod

metadata:

name: wordpress-pod

labels:

app: wordpress-pod

spec:

containers:

- name: wordpress-container

image: wordpress

env:

- name: WORDPRESS_DB_HOST

value: mysql-svc:3306

- name: WORDPRESS_DB_USER

valueFrom:

configMapKeyRef:

name: config-wordpress

key: MYSQL_USER

- name: WORDPRESS_DB_PASSWORD

valueFrom:

configMapKeyRef:

name: config-wordpress

key: MYSQL_PASSWORD

- name: WORDPRESS_DB_NAME

valueFrom:

configMapKeyRef:

name: config-wordpress

key: MYSQL_DATABASE

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: wordpress-svc

spec:

type: LoadBalancer

# externalIPs:

# - 192.168.56.103

selector:

app: wordpress-pod

ports:

- protocol: TCP

port: 80

targetPort: 80

k apply -f wordpress-pod-svc.yaml

k get pod,svc

- openstack 때 썼던 ip 대역

- vi metallb-l2config.yaml

k get svc

k delete svc wordpress-svc

cd configmap/

k apply -f wordpress-pod-svc.yaml

- speaker 하고 controller 먼저 삭제하고

kubectl delete -f /root/_Book_k8sInfra/ch3/3.3.4/metallb.yaml

kubectl apply -f /root/_Book_k8sInfra/ch3/3.3.4/metallb.yaml

k apply -f metallb-l2config.yaml

k get pod -n metallb-system

- 안되네 걍 다시 원상복구;

kubectl delete -f /root/_Book_k8sInfra/ch3/3.3.4/metallb.yaml

kubectl apply -f /root/_Book_k8sInfra/ch3/3.3.4/metallb.yaml

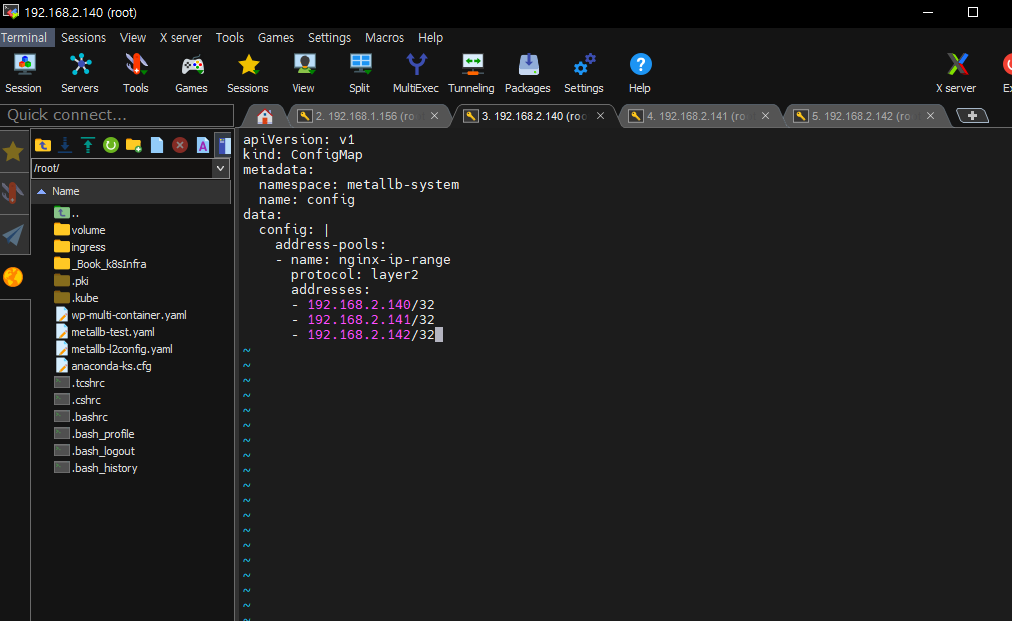

- pod로 만들지 말고 이제부터는 deploy로 만들어볼것이다.

k delete all --allcd configmap/

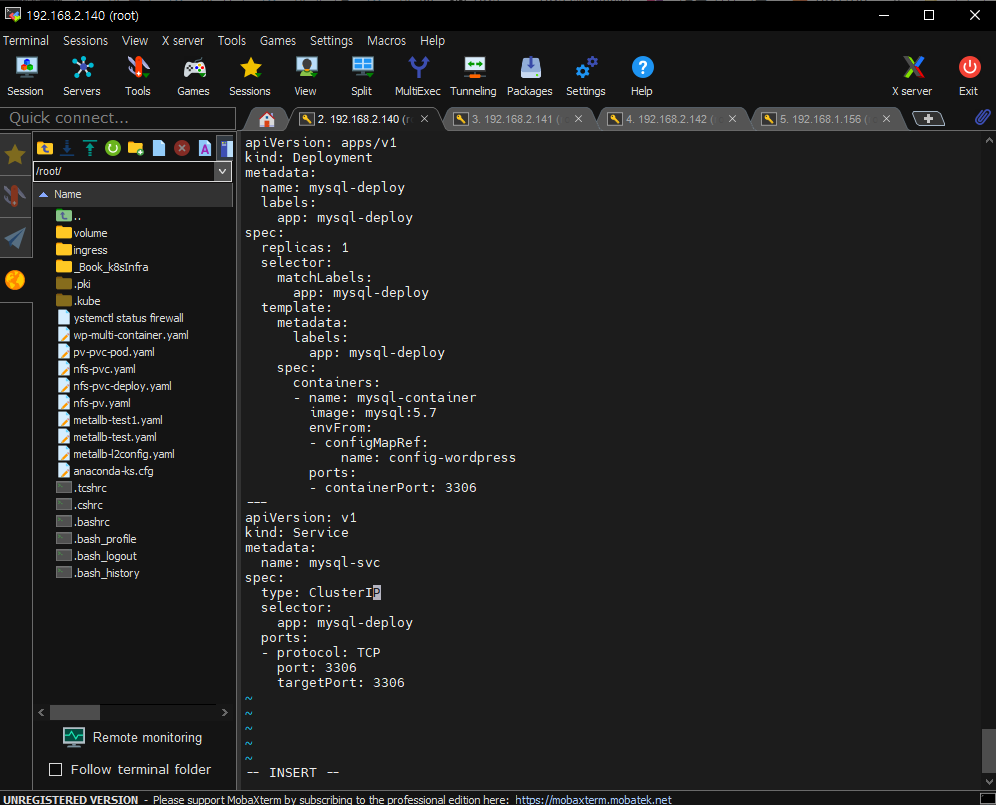

# vi mysql-deploy-svc.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: mysql-deploy

labels:

app: mysql-deploy

spec:

replicas: 1

selector:

matchLabels:

app: mysql-deploy

template:

metadata:

labels:

app: mysql-deploy

spec:

containers:

- name: mysql-container

image: mysql:5.7

envFrom:

- configMapRef:

name: config-wordpress

ports:

- containerPort: 3306

---

apiVersion: v1

kind: Service

metadata:

name: mysql-svc

spec:

type: ClusterIP

selector:

app: mysql-deploy

ports:

- protocol: TCP

port: 3306

targetPort: 3306

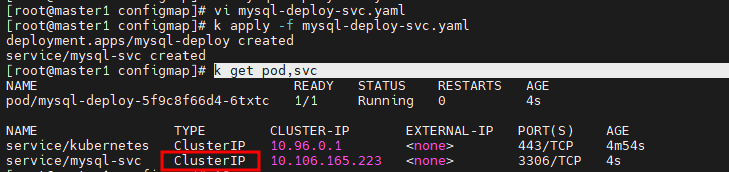

k apply -f mysql-deploy-svc.yaml

k get pod,svc

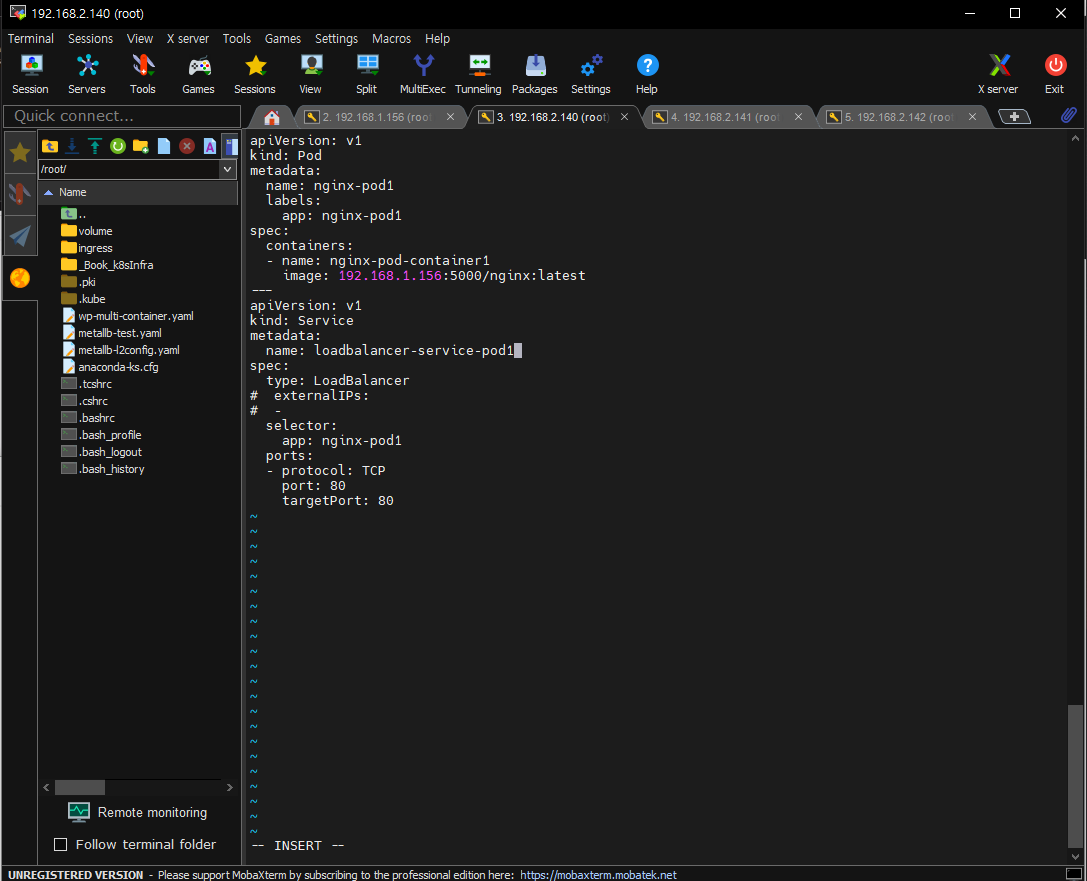

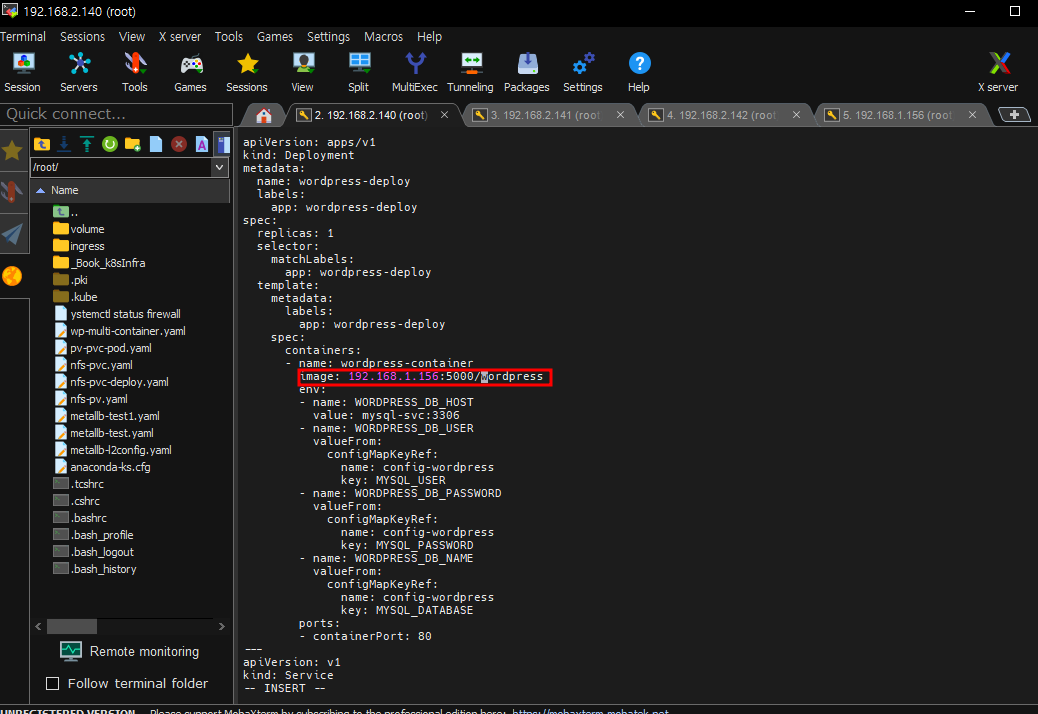

# vi wordpress-deploy-svc.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: wordpress-deploy

labels:

app: wordpress-deploy

spec:

replicas: 1

selector:

matchLabels:

app: wordpress-deploy

template:

metadata:

labels:

app: wordpress-deploy

spec:

containers:

- name: wordpress-container

image: wordpress

env:

- name: WORDPRESS_DB_HOST

value: mysql-svc:3306

- name: WORDPRESS_DB_USER

valueFrom:

configMapKeyRef:

name: config-wordpress

key: MYSQL_USER

- name: WORDPRESS_DB_PASSWORD

valueFrom:

configMapKeyRef:

name: config-wordpress

key: MYSQL_PASSWORD

- name: WORDPRESS_DB_NAME

valueFrom:

configMapKeyRef:

name: config-wordpress

key: MYSQL_DATABASE

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: wordpress-svc

spec:

type: LoadBalancer

# externalIPs:

# - 192.168.1.102

selector:

app: wordpress-deploy

ports:

- protocol: TCP

port: 80

targetPort: 80

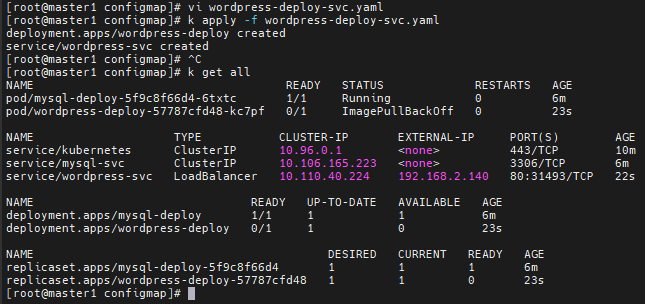

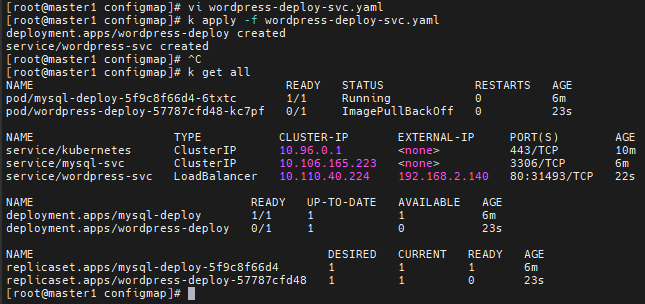

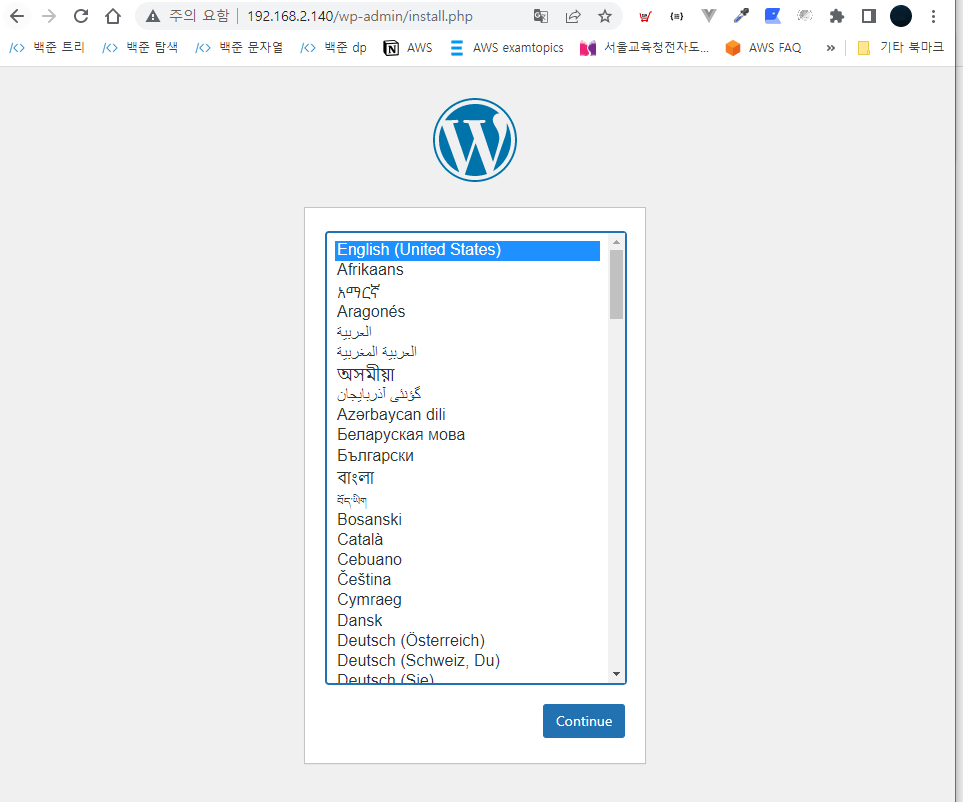

k apply -f wordpress-deploy-svc.yaml

- vi wordpress-deploy-svc.yaml

k apply -f wordpress-deploy-svc.yaml

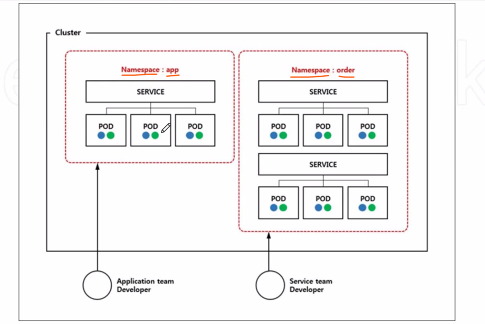

k get podnamespace

k get ns

k -n metallb-system get svc,pod

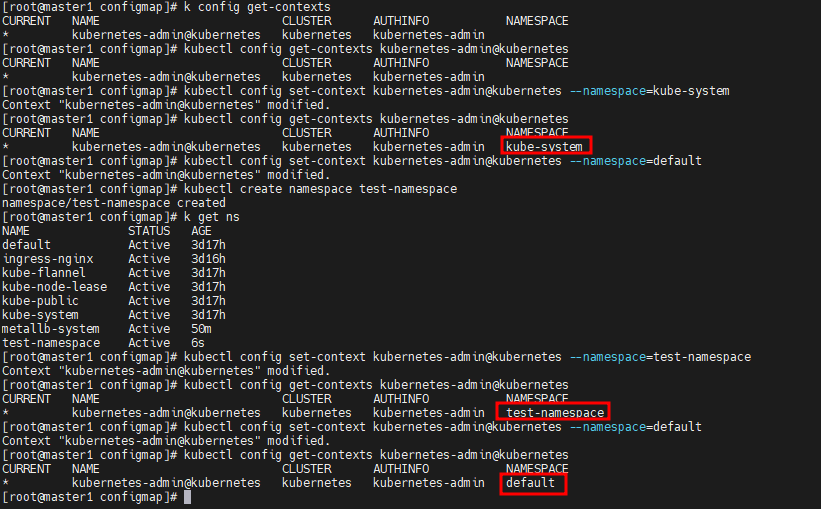

kubectl config get-contexts kubernetes-admin@kubernetes

kubectl config set-context kubernetes-admin@kubernetes --namespace=kube-system

kubectl config get-contexts kubernetes-admin@kubernetes

kubectl config set-context kubernetes-admin@kubernetes --namespace=default

kubectl create namespace test-namespace

kubectl get namespace

kubectl config set-context kubernetes-admin@kubernetes --namespace=test-namespace

kubectl config set-context kubernetes-admin@kubernetes --namespace=default

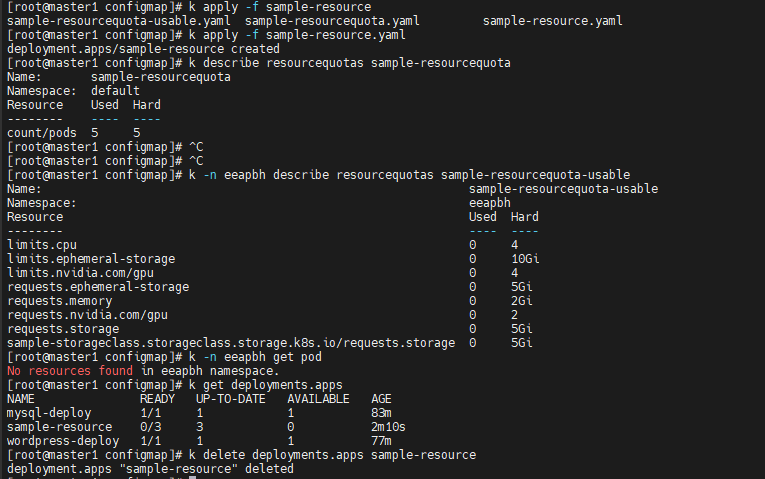

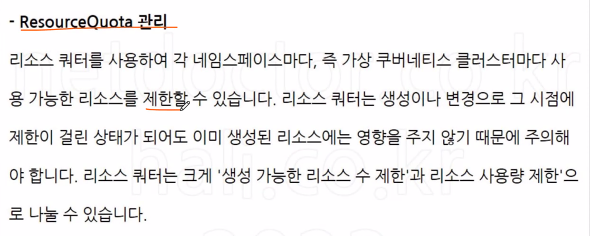

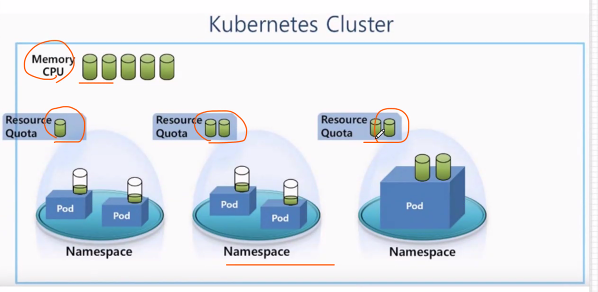

ResourceQuota

안정적인 클러스터 운영을 위해 고로고로 부하 분산

# vi sample-resourcequota.yaml

apiVersion: v1

kind: ResourceQuota

metadata:

name: sample-resourcequota

namespace: default

spec:

hard:

count/pods: 5

k apply -f sample-resourcequota.yaml

kubectl describe resourcequotas sample-resourcequota

k run new-nginx1 -n test-namespace --image=192.168.1.156:5000/nginx:latest

kubectl run pod new-nginx --image=nginx

k get ns

k create ns eeapbh

vi sample-resourcequota-usable.yaml

apiVersion: v1

kind: ResourceQuota

metadata:

name: sample-resourcequota-usable

namespace: eeapbh

spec:

hard:

requests.memory: 2Gi

requests.storage: 5Gi

sample-storageclass.storageclass.storage.k8s.io/requests.storage: 5Gi

requests.ephemeral-storage: 5Gi

requests.nvidia.com/gpu: 2

limits.cpu: 4

limits.ephemeral-storage: 10Gi

limits.nvidia.com/gpu: 4

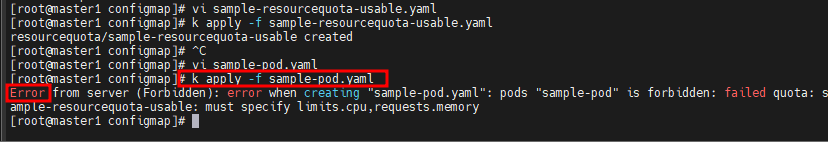

k apply -f sample-resourcequota-usable.yaml

주어진 YAML 파일은 Kubernetes의 ResourceQuota 리소스를 정의하는 것입니다. ResourceQuota는 Kubernetes 클러스터 내에서 네임스페이스의 리소스 사용량을 제한하는 데 사용됩니다. 파일의 내용을 해석해보겠습니다:

apiVersion: v1

이 줄은 사용하는 Kubernetes API 버전을 나타냅니다.

kind: ResourceQuota

이 줄은 정의하려는 Kubernetes 리소스 유형이 "ResourceQuota"임을 나타냅니다.

metadata:

name: sample-resourcequota-usable

namespace: eeapbh

이 부분은 리소스Quota의 이름과 해당 Quota가 적용되는 네임스페이스를 정의합니다. 이 예시에서는 "sample-resourcequota-usable"라는 이름을 가진 Quota를 "eeapbh" 네임스페이스에 적용합니다.

spec:

hard:

requests.memory: 2Gi

requests.storage: 5Gi

sample-storageclass.storageclass.storage.k8s.io/requests.storage: 5Gi

requests.ephemeral-storage: 5Gi

requests.nvidia.com/gpu: 2

limits.cpu: 4

limits.ephemeral-storage: 10Gi

limits.nvidia.com/gpu: 4

이 부분은 리소스Quota의 제한 사항을 정의합니다. 각 항목은 특정 리소스 유형 및 해당 리소스에 대한 제한 값을 설정합니다. 예를 들어, "requests.memory"는 2GiB, "requests.storage"는 5GiB, "limits.cpu"는 4개, "limits.ephemeral-storage"는 10GiB 등으로 설정됩니다. 또한 NVIDIA GPU와 같은 특정 리소스 유형에 대한 제한도 설정됩니다.

이렇게 정의된 ResourceQuota는 해당 네임스페이스에서 정의된 리소스 사용량을 제한하고 지정된 제한 값 이상의 리소스를 사용하려는 경우 에러를 발생시킵니다.

vi sample-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: sample-pod

namespace: eeapbh

spec:

containers:

- name: nginx-container

image: nginx:192.168.1.156:5000/nginx:latest- 메모리랑 cpu 정의 안하면 에러남

vi sample-resource.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: sample-resource

namespace: eeapbh

spec:

replicas: 3

selector:

matchLabels:

app: sample-app

template:

metadata:

labels:

app: sample-app

spec:

containers:

- name: nginx-container

image: nginx:192.168.1.156:5000/nginx:latest

resources:

requests:

memory: "64Mi"

cpu: "50m"

limits:

memory: "128Mi"

cpu: "100m"

# request와 limits를 안 정하면 안만들어지게 설정되어있음

k apply -f sample-resource.yaml

k describe resourcequotas sample-resourcequota- namespace 설정 안했네 삭제하고 다시하자