이미지넷(ImageNet)은 컴퓨터 비전 분야를 연구하다보면 필연적으로 만나게 되는 이름이다.

딥네트워크의 시작

AlexNet

AlexNet 구조

Image Classification with Deep Convolutional Neural Networks 논문

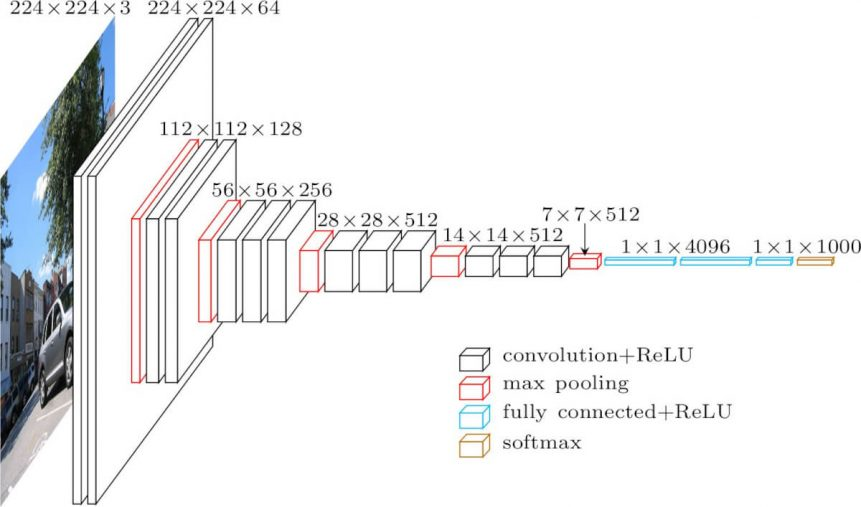

VGG

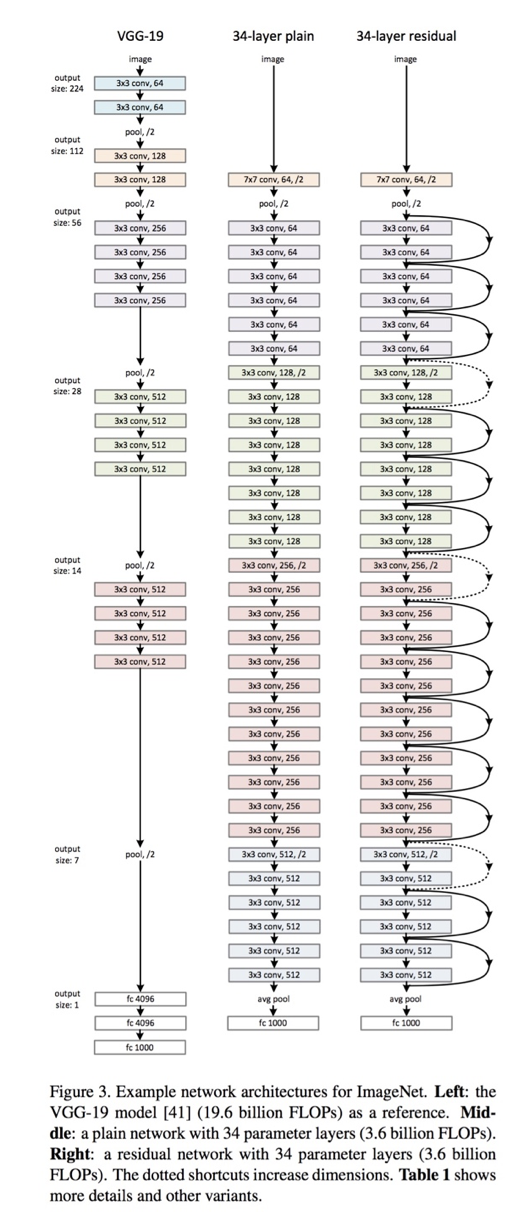

VGG는 AlexNet 같이 이미지넷 챌린지에서 공개된 모델이다. 2014년 이미지넷 챌린지 준우승을 했다. 이전에 우승한 네트워크들이 10개가 안 되는 CNN층을 가진 반면, VGG16과 VGG19라는 이름 뒤의 숫자로 볼 수 있듯이, VGG는 16개, 19개의 층으로 이루어져 있다.

VGGNet

위의 AlexNet과 VGG를 포함한 여러 CNN 모델들이 존재한다. 밑에 링크에 들어가면 볼수 있다.

CNN의 주요모델들

기울기 소실 Vanishing Gradient

멀리서 말하는 사람들의 목소리가 잘 안들리듯이, 모델의 깊이가 깊어질수록 모델학습을 위한 gradient가 사라지는 현상이 발생하는데 이것을 기울기 소실(경사소실, Vanishing Gradient)이라고 한다.

지름길을 만들어주자

레이어를 깊게 쌓으면서 생기는 Vanishing/Exploding Gradient 문제를 해결하기 위해서 ResNet은 Skip Connection이라는 구조를 사용해서 Vanishing Gradient 문제를 해결했다.

딥네트워크들에 대하여

Model API

Tensorflow

Tensorflow의 사전학습 모델(pre-trained model)들은 slim이라는 고수준 API로 현되어 있으며, Tensorflow github 으로 들어가면 자세한 내용을 확인할 수 있다.

Keras

Keras는 Keras applications를 통해 사전학습 모델을 제공하며, keras.applications docs를 보면 지원하는 모델들을 살펴볼 수 있으며, 구현된 코드들은 keras application github 으로 들어가면 확인할 수 있다.

VGG-16

앞서 배웠떤 VGG16을 코드로 구현해보자.

Max pooling과 softmax 등의 활성화 함수(Activation function)를 제외하면, CNN 계층과 완전 연결 계층(Fully Connected Layer)을 합쳐 16개의 레이어로 구성되어 있으며, 아래 그림을 보면 바로 이해된다.

- Block 1 : ~ first Max pooling

- Block 2 : ~ second Max pooling

- Block 3 : ~ third Max pooling

- Block 4 : ~ fourth Max pooling

- Block 5 : ~ fifth Max pooling

- Block 6 : ~ Fully Connected Layer + softmax

위의 개념을 코드로 구현해보면 아래와 같다.

import tensorflow as tf

from tensorflow import keras

from tensorflow.python.keras import layers

from tensorflow.python.keras.applications import imagenet_utils

# CIFAR100 데이터셋을 가져옵시다.

cifar100 = keras.datasets.cifar100

(x_train, y_train), (x_test, y_test) = cifar100.load_data()

x_train, x_test = x_train / 255.0, x_test / 255.0

img_input = keras.Input(shape=(32, 32, 3))Block 1

x = layers.Conv2D(64, (3, 3),

activation='relu',

padding='same',

name='block1_conv1')(img_input)

x = layers.Conv2D(64, (3, 3),

activation='relu',

padding='same',

name='block1_conv2')(x)

x = layers.MaxPooling2D((2, 2), strides=(2, 2), name='block1_pool')(x)Block 2

x = layers.Conv2D(128, (3, 3),

activation='relu',

padding='same',

name='block1_conv1')(img_input)

x = layers.Conv2D(128, (3, 3),

activation='relu',

padding='same',

name='block1_conv2')(x)

x = layers.MaxPooling2D((2, 2), strides=(2, 2), name='block1_pool')(x)Block 3

x = layers.Conv2D(

256, (3, 3), activation='relu', padding='same', name='block3conv1')(x)

x = layers.Conv2D(

256, (3, 3), activation='relu', padding='same', name='block3conv2')(x)

x = layers.Conv2D(

256, (3, 3), activation='relu', padding='same', name='block3conv3')(x)

x = layers.MaxPooling2D((2, 2), strides=(2, 2), name='block3pool')(x)Block 4

x = layers.Conv2D(

512, (3, 3), activation='relu', padding='same', name='block4conv1')(x)

x = layers.Conv2D(

512, (3, 3), activation='relu', padding='same', name='block4conv2')(x)

x = layers.Conv2D(

512, (3, 3), activation='relu', padding='same', name='block4conv3')(x)

x = layers.MaxPooling2D((2, 2), strides=(2, 2), name='block4pool')(x)Block 5

x = layers.Conv2D(

512, (3, 3), activation='relu', padding='same', name='block5conv1')(x)

x = layers.Conv2D(

512, (3, 3), activation='relu', padding='same', name='block5conv2')(x)

x = layers.Conv2D(

512, (3, 3), activation='relu', padding='same', name='block5conv3')(x)

x = layers.MaxPooling2D((2, 2), strides=(2, 2), name='block5pool')(x)Block 6

x = layers.Flatten(name='flatten')(x)

x = layers.Dense(4096, activation='relu', name='fc1')(x)

x = layers.Dense(4096, activation='relu', name='fc2')(x)

classes=100

x = layers.Dense(classes, activation='softmax', name='predictions')(x) 모델로 만들고 학습하기

model = keras.Model(name="VGG-16", inputs=img_input, outputs=x)

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

model.fit(X_train, y_train, epochs=1)ResNet50

Skip connection이 추가되어 있는 ResNet을 구현해보겠다.

ResNet50 모델을 생성하기 위해서 반복적으로 활용하는 conv_block과 identity_block이 있으며, 이 구조를 활용하여, 50개나 되는 복잡한 레이어 구조를 간결하게 표현할 수 있다.

from tensorflow.python.keras import backend

from tensorflow.python.keras import regularizers

from tensorflow.python.keras import initializers

from tensorflow.python.keras import models

# block 안에 반복적으로 활용되는 L2 regularizer를 선언해 줍니다.

def _gen_l2_regularizer(use_l2_regularizer=True, l2_weight_decay=1e-4):

return regularizers.l2(l2_weight_decay) if use_l2_regularizer else None[conv_block]

def conv_block(input_tensor,

kernel_size,

filters,

stage,

block,

strides=(2, 2),

use_l2_regularizer=True,

batch_norm_decay=0.9,

batch_norm_epsilon=1e-5):

filters1, filters2, filters3 = filters

if backend.image_data_format() == 'channels_last':

bn_axis = 3

else:

bn_axis = 1

conv_name_base = 'res' + str(stage) + block + '_branch'

bn_name_base = 'bn' + str(stage) + block + '_branch'

x = layers.Conv2D(

filters1, (1, 1),

use_bias=False,

kernel_initializer='he_normal',

kernel_regularizer=_gen_l2_regularizer(use_l2_regularizer),

name=conv_name_base + '2a')(input_tensor)

x = layers.BatchNormalization(

axis=bn_axis,

momentum=batch_norm_decay,

epsilon=batch_norm_epsilon,

name=bn_name_base + '2a')(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(

filters2,

kernel_size,

strides=strides,

padding='same',

use_bias=False,

kernel_initializer='he_normal',

kernel_regularizer=_gen_l2_regularizer(use_l2_regularizer),

name=conv_name_base + '2b')(x)

x = layers.BatchNormalization(

axis=bn_axis,

momentum=batch_norm_decay,

epsilon=batch_norm_epsilon,

name=bn_name_base + '2b')(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(

filters3, (1, 1),

use_bias=False,

kernel_initializer='he_normal',

kernel_regularizer=_gen_l2_regularizer(use_l2_regularizer),

name=conv_name_base + '2c')(x)

x = layers.BatchNormalization(

axis=bn_axis,

momentum=batch_norm_decay,

epsilon=batch_norm_epsilon,

name=bn_name_base + '2c')(x)

shortcut = layers.Conv2D(

filters3, (1, 1),

strides=strides,

use_bias=False,

kernel_initializer='he_normal',

kernel_regularizer=_gen_l2_regularizer(use_l2_regularizer),

name=conv_name_base + '1')(input_tensor)

shortcut = layers.BatchNormalization(

axis=bn_axis,

momentum=batch_norm_decay,

epsilon=batch_norm_epsilon,

name=bn_name_base + '1')(shortcut)

x = layers.add([x, shortcut])

x = layers.Activation('relu')(x)

return x[identity_block]

def identity_block(input_tensor,

kernel_size,

filters,

stage,

block,

use_l2_regularizer=True,

batch_norm_decay=0.9,

batch_norm_epsilon=1e-5):

filters1, filters2, filters3 = filters

if backend.image_data_format() == 'channels_last':

bn_axis = 3

else:

bn_axis = 1

conv_name_base = 'res' + str(stage) + block + '_branch'

bn_name_base = 'bn' + str(stage) + block + '_branch'

x = layers.Conv2D(

filters1, (1, 1),

use_bias=False,

kernel_initializer='he_normal',

kernel_regularizer=_gen_l2_regularizer(use_l2_regularizer),

name=conv_name_base + '2a')(input_tensor)

x = layers.BatchNormalization(

axis=bn_axis,

momentum=batch_norm_decay,

epsilon=batch_norm_epsilon,

name=bn_name_base + '2a')(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(

filters2,

kernel_size,

padding='same',

use_bias=False,

kernel_initializer='he_normal',

kernel_regularizer=_gen_l2_regularizer(use_l2_regularizer),

name=conv_name_base + '2b')(x)

x = layers.BatchNormalization(

axis=bn_axis,

momentum=batch_norm_decay,

epsilon=batch_norm_epsilon,

name=bn_name_base + '2b')(x)

x = layers.Activation('relu')(x)

x = layers.Conv2D(

filters3, (1, 1),

use_bias=False,

kernel_initializer='he_normal',

kernel_regularizer=_gen_l2_regularizer(use_l2_regularizer),

name=conv_name_base + '2c')(x)

x = layers.BatchNormalization(

axis=bn_axis,

momentum=batch_norm_decay,

epsilon=batch_norm_epsilon,

name=bn_name_base + '2c')(x)

x = layers.add([x, input_tensor])

x = layers.Activation('relu')(x)

return x[resnet50 함수]

def resnet50(num_classes,

batch_size=None,

use_l2_regularizer=True,

rescale_inputs=False,

batch_norm_decay=0.9,

batch_norm_epsilon=1e-5):

# CIFAR100을 위한 input_shape

input_shape = (32, 32, 3)

img_input = layers.Input(shape=input_shape, batch_size=batch_size)

if rescale_inputs:

# Hub image modules expect inputs in the range [0, 1]. This rescales these

# inputs to the range expected by the trained model.

x = layers.Lambda(

lambda x: x * 255.0 - backend.constant(

imagenet_preprocessing.CHANNEL_MEANS,

shape=[1, 1, 3],

dtype=x.dtype),

name='rescale')(img_input)

else:

x = img_input

if backend.image_data_format() == 'channels_first':

x = layers.Permute((3, 1, 2))(x)

bn_axis = 1

else: # channels_last

bn_axis = 3

block_config = dict(

use_l2_regularizer=use_l2_regularizer,

batch_norm_decay=batch_norm_decay,

batch_norm_epsilon=batch_norm_epsilon)

x = layers.ZeroPadding2D(padding=(3, 3), name='conv1_pad')(x)

x = layers.Conv2D(

64, (7, 7),

strides=(2, 2),

padding='valid',

use_bias=False,

kernel_initializer='he_normal',

kernel_regularizer=_gen_l2_regularizer(use_l2_regularizer),

name='conv1')(x)

x = layers.BatchNormalization(

axis=bn_axis,

momentum=batch_norm_decay,

epsilon=batch_norm_epsilon,

name='bn_conv1')(x)

x = layers.Activation('relu')(x)

x = layers.MaxPooling2D((3, 3), strides=(2, 2), padding='same')(x)

x = conv_block(

x, 3, [64, 64, 256], stage=2, block='a', strides=(1, 1), **block_config)

x = identity_block(x, 3, [64, 64, 256], stage=2, block='b', **block_config)

x = identity_block(x, 3, [64, 64, 256], stage=2, block='c', **block_config)

x = conv_block(x, 3, [128, 128, 512], stage=3, block='a', **block_config)

x = identity_block(x, 3, [128, 128, 512], stage=3, block='b', **block_config)

x = identity_block(x, 3, [128, 128, 512], stage=3, block='c', **block_config)

x = identity_block(x, 3, [128, 128, 512], stage=3, block='d', **block_config)

x = conv_block(x, 3, [256, 256, 1024], stage=4, block='a', **block_config)

x = identity_block(x, 3, [256, 256, 1024], stage=4, block='b', **block_config)

x = identity_block(x, 3, [256, 256, 1024], stage=4, block='c', **block_config)

x = identity_block(x, 3, [256, 256, 1024], stage=4, block='d', **block_config)

x = identity_block(x, 3, [256, 256, 1024], stage=4, block='e', **block_config)

x = identity_block(x, 3, [256, 256, 1024], stage=4, block='f', **block_config)

x = conv_block(x, 3, [512, 512, 2048], stage=5, block='a', **block_config)

x = identity_block(x, 3, [512, 512, 2048], stage=5, block='b', **block_config)

x = identity_block(x, 3, [512, 512, 2048], stage=5, block='c', **block_config)

x = layers.GlobalAveragePooling2D()(x)

x = layers.Dense(

num_classes,

kernel_initializer=initializers.RandomNormal(stddev=0.01),

kernel_regularizer=_gen_l2_regularizer(use_l2_regularizer),

bias_regularizer=_gen_l2_regularizer(use_l2_regularizer),

name='fc1000')(x)

# A softmax that is followed by the model loss must be done cannot be done

# in float16 due to numeric issues. So we pass dtype=float32.

x = layers.Activation('softmax', dtype='float32')(x)

# Create model.

return models.Model(img_input, x, name='resnet50')위의 resnet함수를 만들었으니 이제 모델을 만든 후, 학습시킬 차례이다.

model = resnet50(num_classes=100)

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

model.fit(x_train, y_train, epochs=1)