Part1 - ch1 AlexNet 구현

1) Library

import os

import time

import copy

import numpy as np

import matplotlib.pyplot as plt

import torch

import torch.nn as nn

import torch.optim as optim

from torchvision import datasets, models, transforms2) Hyperparameter 설정

batch_size = 64

num_workers = 4

ddir = 'hymenoptera_data'3) Data 준비

data_transformers = {

'train': transforms.Compose(

[

transforms.RandomResizedCrop(224),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize([0.49, 0.449, 0.411], [0.231, 0.221, 0.230])

]),

'val': transforms.Compose(

[

transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize([0.49, 0.449, 0.411], [0.231, 0.221, 0.230]),

]

),

}

# Image Folder 만들기. train과 val에 대해서.

img_data = {

# A generic data loader where the images are arranged in this way by default:

k: datasets.ImageFolder(os.path.join(ddir, k), data_transformers[k])

for k in ['train', 'val']

}img_data

{

'train': Dataset ImageFolder

Number of datapoints: 244

Root location: hymenoptera_data\train

StandardTransform

Transform: Compose(

RandomResizedCrop(size=(224, 224), scale=(0.08, 1.0), ratio=(0.75, 1.3333), interpolation=bilinear, antialias=warn)

RandomHorizontalFlip(p=0.5)

ToTensor()

Normalize(mean=[0.49, 0.449, 0.411], std=[0.231, 0.221, 0.23])

),

'val': Dataset ImageFolder

Number of datapoints: 153

Root location: hymenoptera_data\val

StandardTransform

Transform: Compose(

Resize(size=256, interpolation=bilinear, max_size=None, antialias=warn)

CenterCrop(size=(224, 224))

ToTensor()

Normalize(mean=[0.49, 0.449, 0.411], std=[0.231, 0.221, 0.23])

)

}# train, val data 각각에 batch_size로 묶고 랜덤으로 섞기

dloaders = {

k: torch.utils.data.DataLoader(

img_data[k], batch_size=batch_size, shuffle=True, num_workers=num_workers

) for k in ['train','val']

}

print(dloaders)

print(dloaders['train'])dset_sizes = {x:len(img_data[x]) for x in ['train','val']}

classes = img_data['train'].classes #['ants', 'bees']

print(dset_sizes, classes)CPU or GPU 설정

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")4) AlexNet Architecture

class AlexNet(nn.Module):

def __init__(self, num_classes):

super(AlexNet, self).__init__()

# 1. Extract Features

self.features = nn.Sequential(

nn.Conv2d(in_channels=3, out_channels=96, kernel_size=11, stride=4),

nn.ReLU(),

nn.MaxPool2d(kernel_size=3, stride=2),

nn.Conv2d(96,256, kernel_size=5, padding=2),

nn.ReLU(),

nn.MaxPool2d(kernel_size=3, stride=2),

nn.Conv2d(256, 384, kernel_size=3, padding=1),

nn.ReLU(),

nn.Conv2d(384, 384, kernel_size=3, padding=1),

nn.ReLU(),

nn.Conv2d(384, 256, kernel_size=3, padding=1),

nn.ReLU(),

nn.MaxPool2d(kernel_size=3, stride=2)

)

# 2. classify Layer

self.classifier = nn.Sequential(

# FC 4096

nn.Linear(256*5*5, 4096),

nn.ReLU(),

nn.Linear(4096, 4096),

nn.ReLU(),

nn.Linear(4096, num_classes)

)

def forward(self, input):

hidden = self.features(input)

# flatten

flatten = hidden.view(hidden.size(0),-1)

output = self.classifier(flatten)

return output

model = AlexNet(2) # num_classes=2

print(model)5) Train function

def train(model, loss_func, optimizer, epochs=10):

start = time.time()

accuracy = 0.0

for epoch in range(epochs):

print(f'Epoch number {epoch}/{epochs-1}')

print('=' * 20)

# dset: train or val

for dset in ['train','val']:

if dset == 'train':

model.train()

else:

model.eval()

loss = 0.0

correct = 0

# dloader에 batch 만큼 각각 extract

for imgs, labels in dloaders[dset]:

# batch수 만큼의 img

imgs = imgs.to(device)

# batch수 만큼의 label

labels = labels.to(device)

# 학습 시 gradient 초기화

optimizer.zero_grad()

# enable or disable grads based on its argument mode.

with torch.set_grad_enabled(dset == 'train'):

# 만든 모델의 img batch파일 넣기

output = model(imgs)

# output에서 최댓값의 index 뽑기 -> preds

_, preds = torch.max(output, 1)

# output class와 labels의 차는 loss_curr

loss_curr = loss_func(output, labels)

# 학습하는 경우

if dset == 'train':

# 역전파

loss_curr.backward()

# update

optimizer.step()

# 64 * loss 값 = 총 loss 값

loss += loss_curr.item() * imgs.size(0)

# prediction과 label수가 같은지 합계

correct += torch.sum(preds == labels.data)

# dset_sizes = {'train': 244, 'val': 153}

loss_epoch = loss / dset_sizes[dset] # epoch마다 loss 값

# 맞은 갯수 / 데이터 크기 -> 정확도

accuracy_epoch = correct.double() / dset_sizes[dset]

# 각각 train과 val에 대해 loss_Epoch와 accuracy_epoch 출력

print(f'{dset} loss in this epoch: {loss_epoch}, accuracy in this epoch: {accuracy_epoch}\n')

# val의 최대 accuarcy를 선정하기 위함

if dset == 'val' and accuracy_epoch > accuracy:

accuracy = accuracy_epoch

time_delta = time.time() - start

print(f'Training finished in {time_delta // 60}mins {time_delta % 60}secs')

print(f'Best validation set accuracy: {accuracy}')

return model

6) Optimizer & Loss

loss_func = nn.CrossEntropyLoss()

optimizer = optim.Adam(model.parameters(), lr=0.0001)7) Train

pretrained_model = train(model, loss_func, optimizer, epochs=10)8) Test

def test(model=pretrained_model):

correct_pred = {classname: 0 for classname in classes} #['ants', 'bees']

total_pred = {classname:0 for classname in classes}

with torch.no_grad():

for images, labels in dloaders['val']:

images = images.to(device)

labels = labels.to(device)

outputs = model(images)

_, preds = torch.max(outputs, 1)

# [labels], [preds] -> [[labels원소, preds원소]] 로 zip하기

for label, pred in zip(labels, preds):

if label == pred:

correct_pred[classes[label]] +=1

total_pred[classes[label]] += 1

for classname, correct_count in correct_pred.items():

accuracy = 100 * float(correct_count) / total_pred[classname]

print(f'Accuracy for class: {classname:5s} is {accuracy:.1f}%')

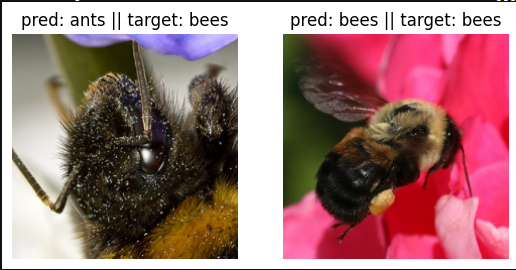

test(pretrained_model)9) Visualize

def imageshow(img, text=None):

# image는 (채널, 높이, 너비) = (C,H,W) -> (axis1, axis2, axis0) = (높이, 너비, 채널)

print(img) #

img = img.numpy().transpose((1,2,0))

avg = np.array([0.49, 0.449, 0.411])

std = np.array([0.231, 0.221, 0.230])

img = std * img + avg

# 0~1값으로 img array 자르기

img = np.clip(img, 0, 1)

# plt.imshow()는 이미지 데이터를 시각화하는 데 사용

plt.imshow(img)

if text is not None:

plt.title(text)def visualize_predctions(pretrained_model, max_num_imgs=4):

# Sets the seed for generating random numbers.

torch.manual_seed(10)

# train했냐?

if_model_training = pretrained_model.training

pretrained_model.eval()

imgs_counter = 0

# Create a figure

fig = plt.figure()

with torch.no_grad():

for images, labels in dloaders['val']:

images = images.to(device)

labels = labels.to(device)

outputs = model(images)

_, preds = torch.max(outputs, 1)

# batch_size=25, print(images.size()[0])

for j in range(images.size()[0]):

imgs_counter += 1

# 6//2 = 3행, 2열, 0,1,2,3,4,5

ax = plt.subplot(max_num_imgs//2, 2, imgs_counter)

# num_rows: 전체 그림을 row으로 나눌 때 행 수

# num_cols: 전체 그림을 column로 나눌 때 열 수

# plot_num: 현재 서브플롯의 위치를 지정합니다. 이 값은 왼쪽에서 오른쪽으로, 위에서 아래로 증가하며 1부터 시작합니다.

ax.axis('off') # suplot의 축을 끄기 기능

# classes[preds[j]]], labels[j]

ax.set_title(f'pred: {classes[preds[j]]} || target: {classes[labels[j]]}')

imageshow(images.cpu().data[j])

if imgs_counter == max_num_imgs:

pretrained_model.train(mode=if_model_training)

return

pretrained_model.train(mode=if_model_training)visualize_predctions(pretrained_model, 2)