회귀

-

모델: LinearRegression, Ridge, Lasso.. 등

-

평가 Metric:

-

cost function: MSE(Mean Squared Error)

분류

- 모델: LogisticRegression, SGDClassifier.. 등

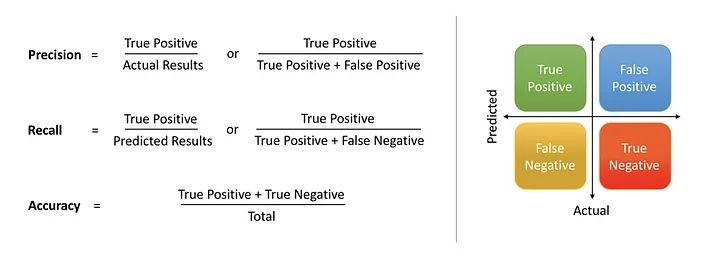

- 평가 Metric: Accuracy, Preicision, Recall, F1_score

https://medium.com/@shrutisaxena0617/precision-vs-recall-386cf9f89488 - cost function: log_loss, hinge

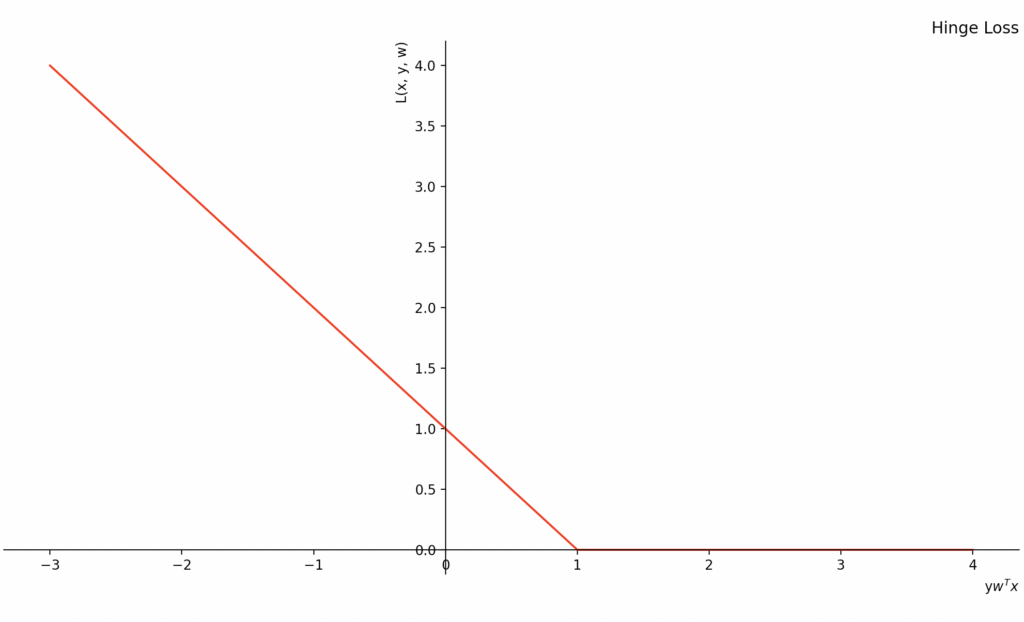

- hinge

- 0보다 작은 값에 대해서는 그대로 출력, 0과 같거나 0보다 큰 값에 대해서는 0으로 처리하는 function입니다.

https://www.baeldung.com/cs/hinge-loss-vs-logistic-loss