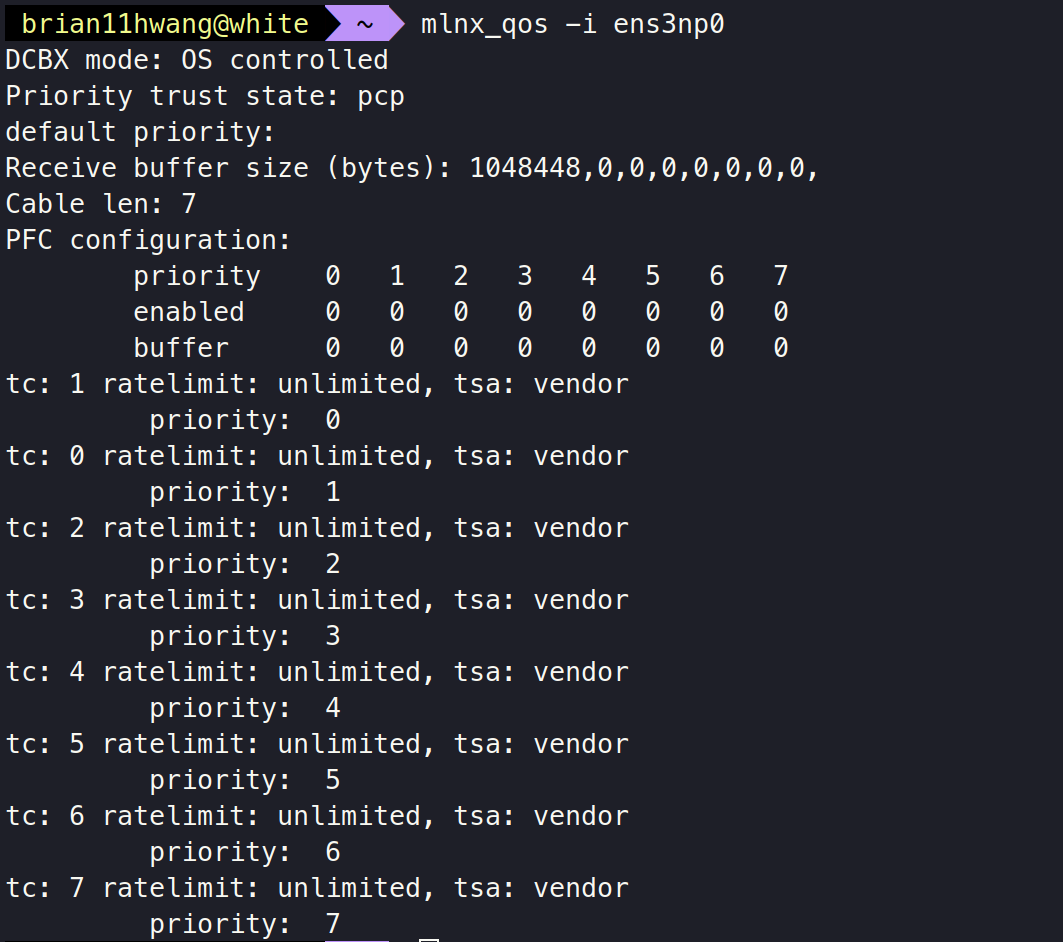

Now that I'm done with the settings, it's time to start using mlnx_qos tool.

1. See Current QoS Setting

We can see the current QoS setting using:

mlnx_qos -i <interface>

DCBX Mode:

Priority Trust state:

1) Priority Code Point (PCP)

PCP is used as a means for classifying and managing network traffic, and providing QoS in Layer 2 Ethernet networks. It uses the 3-bit PCP field in the VLAN header for the purpose of packet classification.

2) Differentiated Service Code Point (DSCP)

Differentiated services or DiffServ is a computer networking architecture that specifies a simple and scalable mechanism for classifying and managing network traffic and providing quality of service (QoS) on IP networks.

DiffServ uses a 6-bit DSCP in the 8-bit DS field in the IP header for packet classification purposes. The DS field replaces the outdated IPv4 TOS field.

Now, there are plenty of points that I would like to test:

1. How to use Mlnx_qos with VFs in SR-IOV?

2. How to check if the Mlnx_qos is working well?

3. How much overhead will the vlan header make?

4. How much performance increase will the Qos make?

5. Is the performance increase due Qos? or due to tradeoff from vlan header overhead?

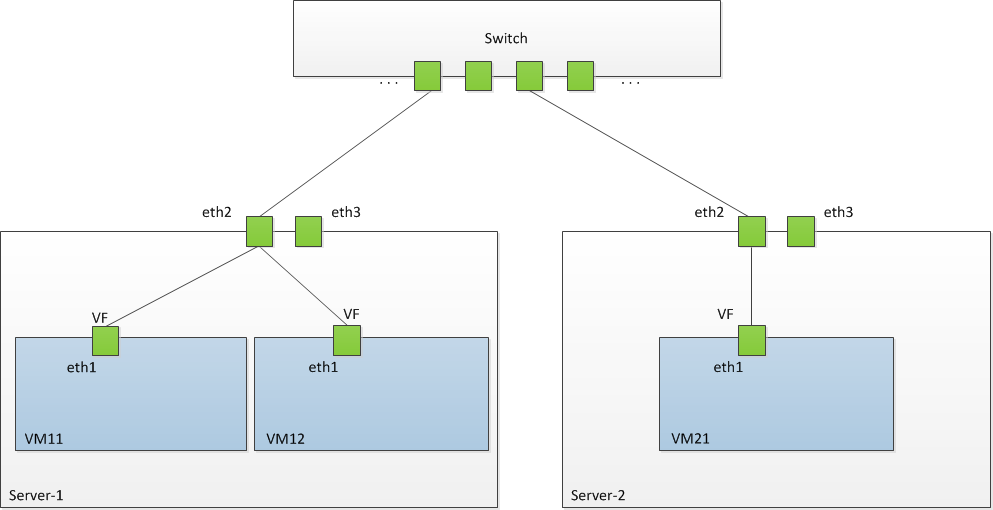

1. How to use Mlnx_qos with VFs in SR-IOV?

Now, the base idea of using mlnx_qos was from:

https://enterprise-support.nvidia.com/s/article/howto-configure-qos-over-sr-iov

However, this setting required a switch that handles PFC(Priority Flow Control) and ETS (Enhanced Transmission Selection algorithm)

Thus this was done in a way that:

1. vlan tags are added in the VM

2. PFC is done in the switch, where the PFs done intervene.

However, as our setting does not contain switch, we found a way in such we could make vlans at the host, and have the VFs be linked to the vlans:

This was originally done using the vconfig add command, but as is outdated, we used:

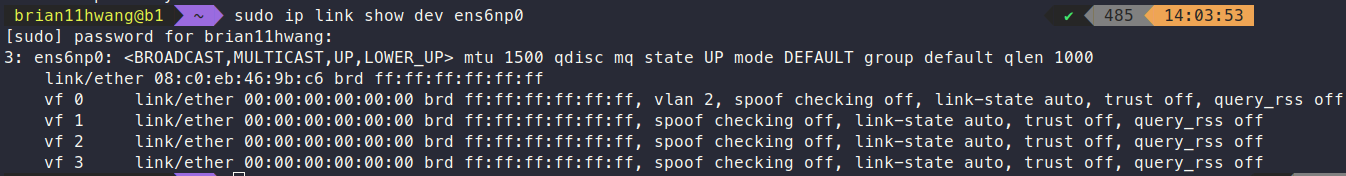

sudo ip link set <PF Interface> vf <VF Num> vlan <VLAN Num> qos <QOS level> mac <VF MAC>

# ex)

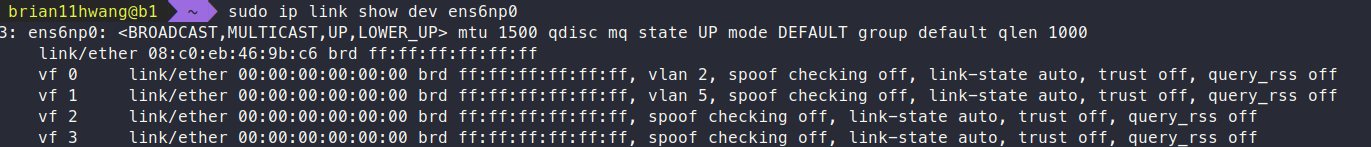

sudo ip link set ens6np0 vf 0 vlan 2 qos 2 mac 52:03:27:CC:BB:AAThen, if we check via:

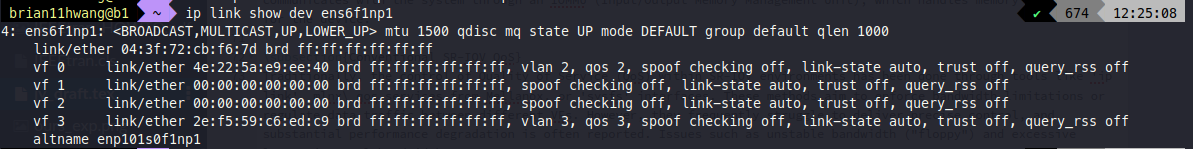

sudo ip link show dev <interface>

# ex)

sudo ip link show dev ens6np0we can see the vfs have been linked (vf 0 <-> vlan2)

Lastly, we need to couple the qos(vlan tag) with the mlnx_qos setting.

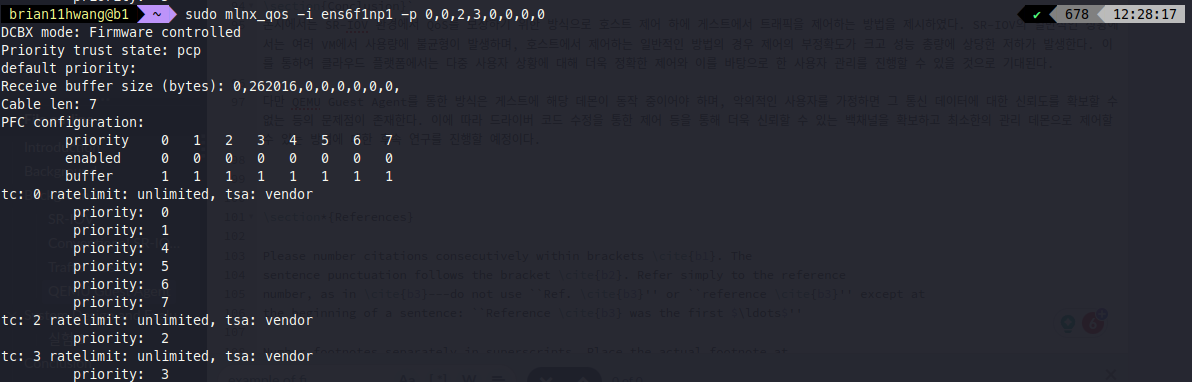

If this is the current ip link setting,

we can do:

sudo mlnx_qos -i ens6f1np1 -p 0,0,2,3,0,0,0,0to make:

If we want to set BW limit, we need to 1)set tsa setting to ets, 2) set bw share percentage:

sudo mlnx_qos -i ens6f1np1 -s strict,strict,ets,ets,strict,strict,strict,strict -t 0,0,50,50,0,0,0,02. How to check if the Mlnx_qos is working well?

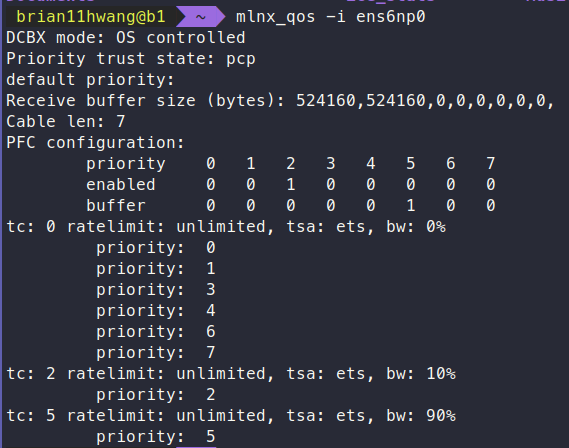

Now from as we have 2 VMs on each sender, and receiver , we have coupled them with priority 2 and 5:

Now we have plenty of parts to explore: Performance difference with buffer size, priority, ratelimit, tsa and bw.

Beforehand, we have to check if Mlnx_qos is really garaunteeing Qos.

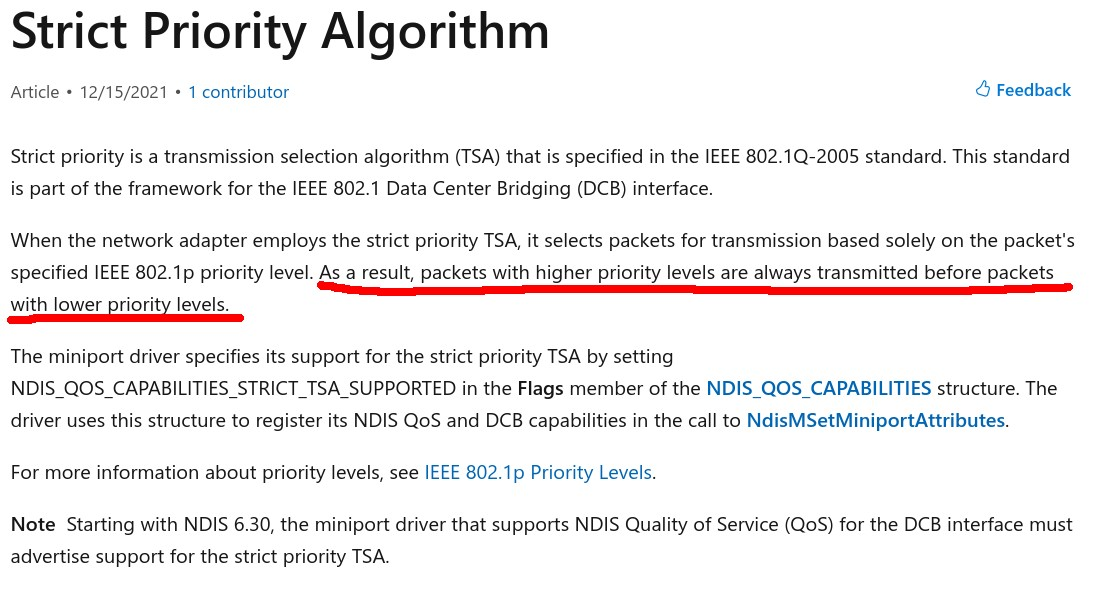

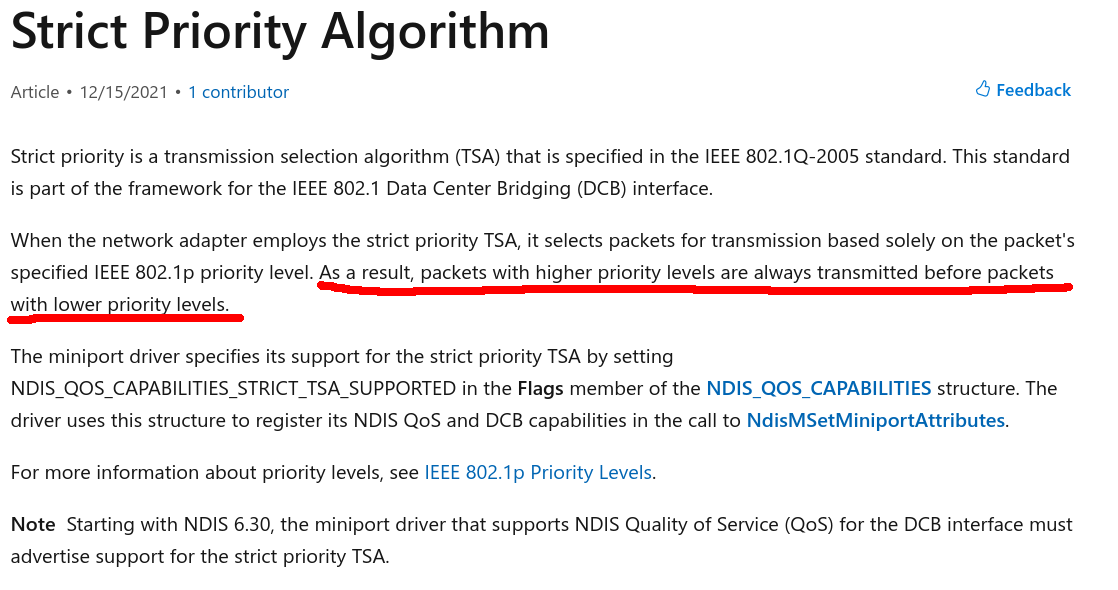

According to https://learn.microsoft.com/en-us/windows-hardware/drivers/network/strict-priority-algorithm

Strict Priority in TSA is:

Therefore, I would like to test on 2 VMs:

1. iperf on both VM without any setting

2. iperf on both VM with vlan with same priority

3. iperf on both VM with one VM having higher priority

With iperf showing lower performance than DPDK, it still showed > 90Gbps.

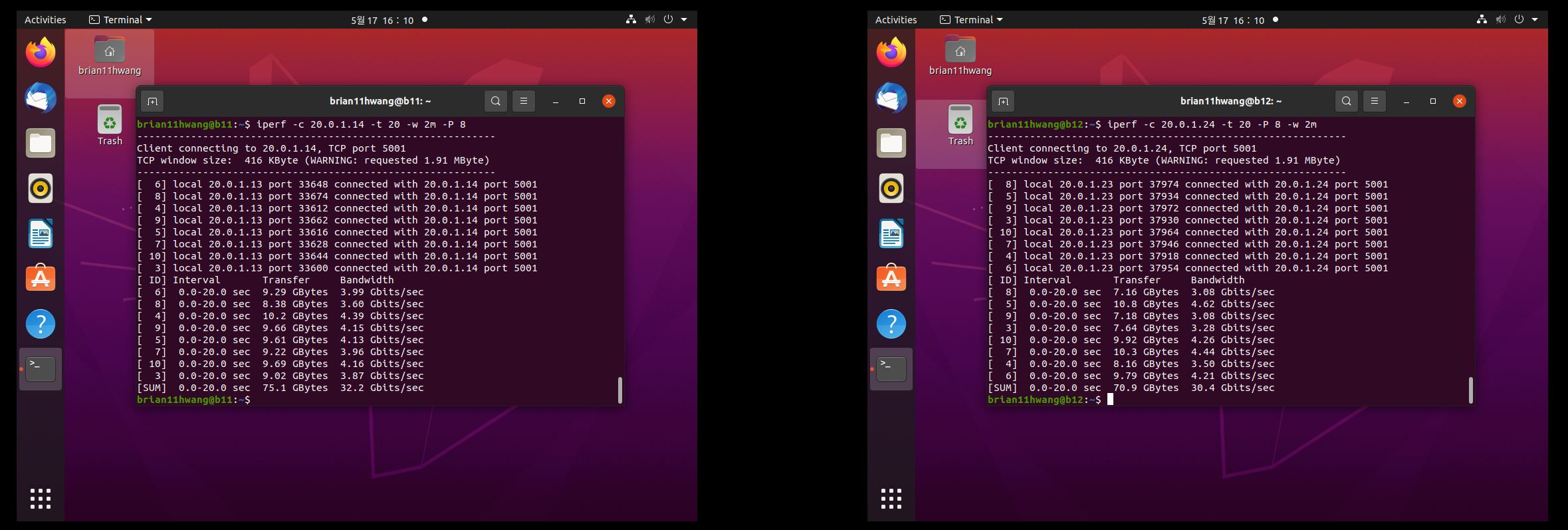

2.1 iperf on both VM without any setting

When running iperf on both VM:

It showed about 30GBps each.

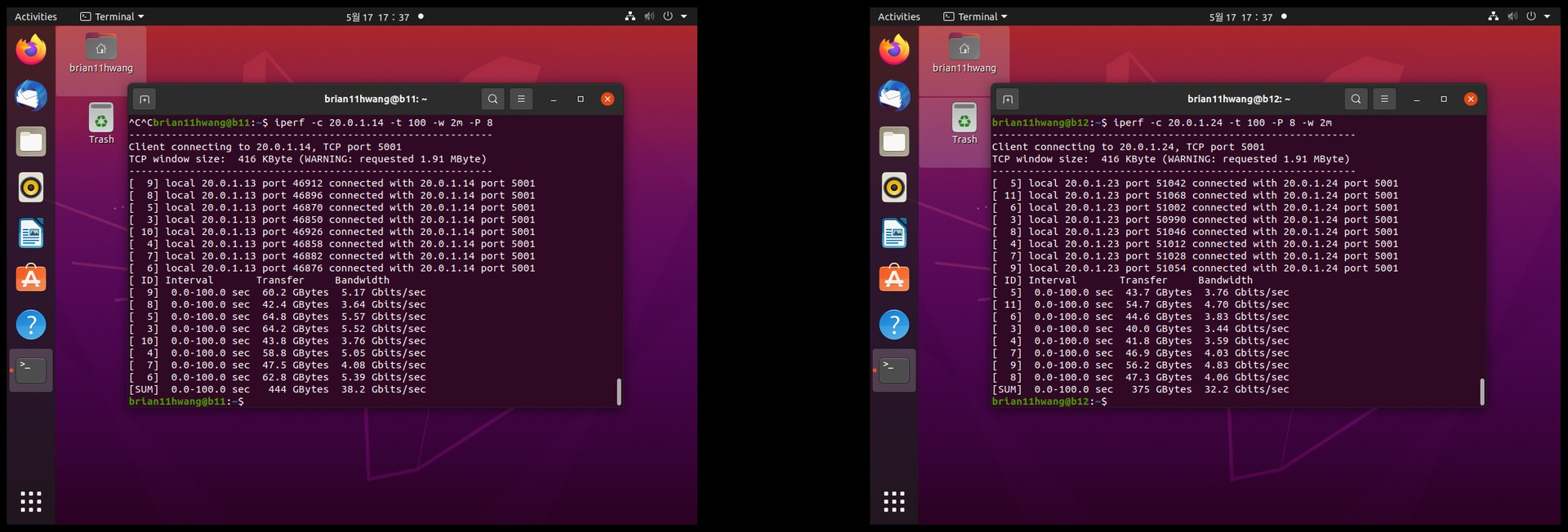

2.2 iperf on both VM with vlan with same priority

2.3 iperf on both VM with one VM having higher priority

Lastly, I have linked each VM(VFs) with vtag 2 and 5:

Then,

--> Not much change sighted.