Hubble

Hubble이란 Cilium의 eBPF 흐름을 기반으로, 네트워크 보안 정책, 서비스 흐름, L3~L7 수준의 트래픽을 관찰·분석할 수 있도록 도와주는 관찰/모니터링 플랫폼이다.

Hubble 구성 요소

Cilium에서 제공하는 공식문서에는 Hubble을 이용한 Observability에 관해 상세하게 작성되어 있다.

| 구성 요소 | 설명 | 관찰 범위 | 연결 방식 | 배포/사용 위치 | 주요 특징 |

|---|---|---|---|---|---|

| Hubble API | Cilium 에이전트가 실행 중인 로컬 노드에서 관찰된 네트워크 트래픽 정보를 제공하는 gRPC API | 단일 Cilium 노드 (로컬) | Unix 도메인 소켓 (/var/run/cilium/hubble.sock) | 각 Cilium 에이전트 Pod 내부 | L3~L7 네트워크 이벤트 제공. 외부에서 직접 접근 불가 |

| Hubble Relay | 여러 Cilium 노드의 Hubble API를 집계하여 클러스터 전체 또는 ClusterMesh 환경의 여러 클러스터의 트래픽 정보를 통합 제공 | 전체 클러스터 또는 ClusterMesh | 내부: Hubble API와 통신 외부: gRPC (CLI, UI에서 연결) | 별도 Pod(Deployment 등)로 실행 | 중앙 집중형 데이터 수집기. 보안 및 인증 구성 가능. CLI 및 UI의 주요 백엔드 역할 수행 |

| Hubble UI | 클러스터의 서비스 간 통신 흐름을 자동으로 탐지하고 시각화하여 보여주는 웹 UI | 전체 클러스터 또는 ClusterMesh | gRPC 또는 HTTP로 Hubble Relay와 통신 | Pod로 배포되며 웹 브라우저에서 접근 | 서비스 종속성 맵, 필터링 UI, L3/L4/L7 데이터 시각화. Grafana와 유사한 UX 제공 |

| Hubble CLI | hubble 명령어를 통해 Hubble API 또는 Hubble Relay에 접근하여 트래픽 흐름을 조회하는 CLI 도구 | 로컬 노드 or 전체 클러스터 | ① Unix 도메인 소켓 (API 직접 연결) ② Hubble Relay 주소 (gRPC) | Cilium Pod 내부 또는 외부 클라이언트 | 실시간 흐름 조회, 필터링, JSON 출력 등 다양한 커맨드 지원 |

Hubble 설치

Hubble을 설치한 후 기본적인 구성을 확인해본다.

helm을 이용하여 Hubble을 설치하면 hubble-relay, hubble-ui Deployment 및 Secret 등 리소스가 클러스터에 배포된다.

(⎈|HomeLab:N/A) root@k8s-ctr:~# helm upgrade cilium cilium/cilium --namespace kube-system --reuse-values \

--set hubble.enabled=true \

--set hubble.relay.enabled=true \

--set hubble.ui.enabled=true \

--set hubble.ui.service.type=NodePort \

--set hubble.ui.service.nodePort=31234 \

--set hubble.export.static.enabled=true \

--set hubble.export.static.filePath=/var/run/cilium/hubble/events.log \

--set prometheus.enabled=true \

--set operator.prometheus.enabled=true \

--set hubble.metrics.enableOpenMetrics=true \

--set hubble.metrics.enabled="{dns,drop,tcp,flow,port-distribution,icmp,httpV2:exemplars=true;labelsContext=source_ip\,source_namespace\,source_workload\,destination_ip\,destination_namespace\,destination_workload\,traffic_direction}"

(⎈|HomeLab:N/A) root@k8s-ctr:~# cilium status

/¯¯\

/¯¯\__/¯¯\ Cilium: OK

\__/¯¯\__/ Operator: OK

/¯¯\__/¯¯\ Envoy DaemonSet: OK

\__/¯¯\__/ Hubble Relay: OK

\__/ ClusterMesh: disabled

...

Deployment hubble-relay Desired: 1, Ready: 1/1, Available: 1/1

Deployment hubble-ui Desired: 1, Ready: 1/1, Available: 1/1

Containers: ...

hubble-relay Running: 1

hubble-ui Running: 1

...

(⎈|HomeLab:N/A) root@k8s-ctr:~# cilium config view | grep -i hubble

enable-hubble true

enable-hubble-open-metrics true

hubble-disable-tls false

hubble-export-allowlist

hubble-export-denylist

hubble-export-fieldmask

hubble-export-file-max-backups 5

hubble-export-file-max-size-mb 10

hubble-export-file-path /var/run/cilium/hubble/events.log

hubble-listen-address :4244

hubble-metrics dns drop tcp flow port-distribution icmp httpV2:exemplars=true;labelsContext=source_ip,source_namespace,source_workload,destination_ip,destination_namespace,destination_workload,traffic_direction

hubble-metrics-server :9965

hubble-metrics-server-enable-tls false

hubble-socket-path /var/run/cilium/hubble.sock

hubble-tls-cert-file /var/lib/cilium/tls/hubble/server.crt

hubble-tls-client-ca-files /var/lib/cilium/tls/hubble/client-ca.crt

hubble-tls-key-file /var/lib/cilium/tls/hubble/server.key

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get secret -n kube-system | grep -iE 'cilium-ca|hubble'

cilium-ca Opaque 2 4d14h

hubble-relay-client-certs kubernetes.io/tls 3 4d14h

hubble-server-certs kubernetes.io/tls 3 4d14hHubble 구조

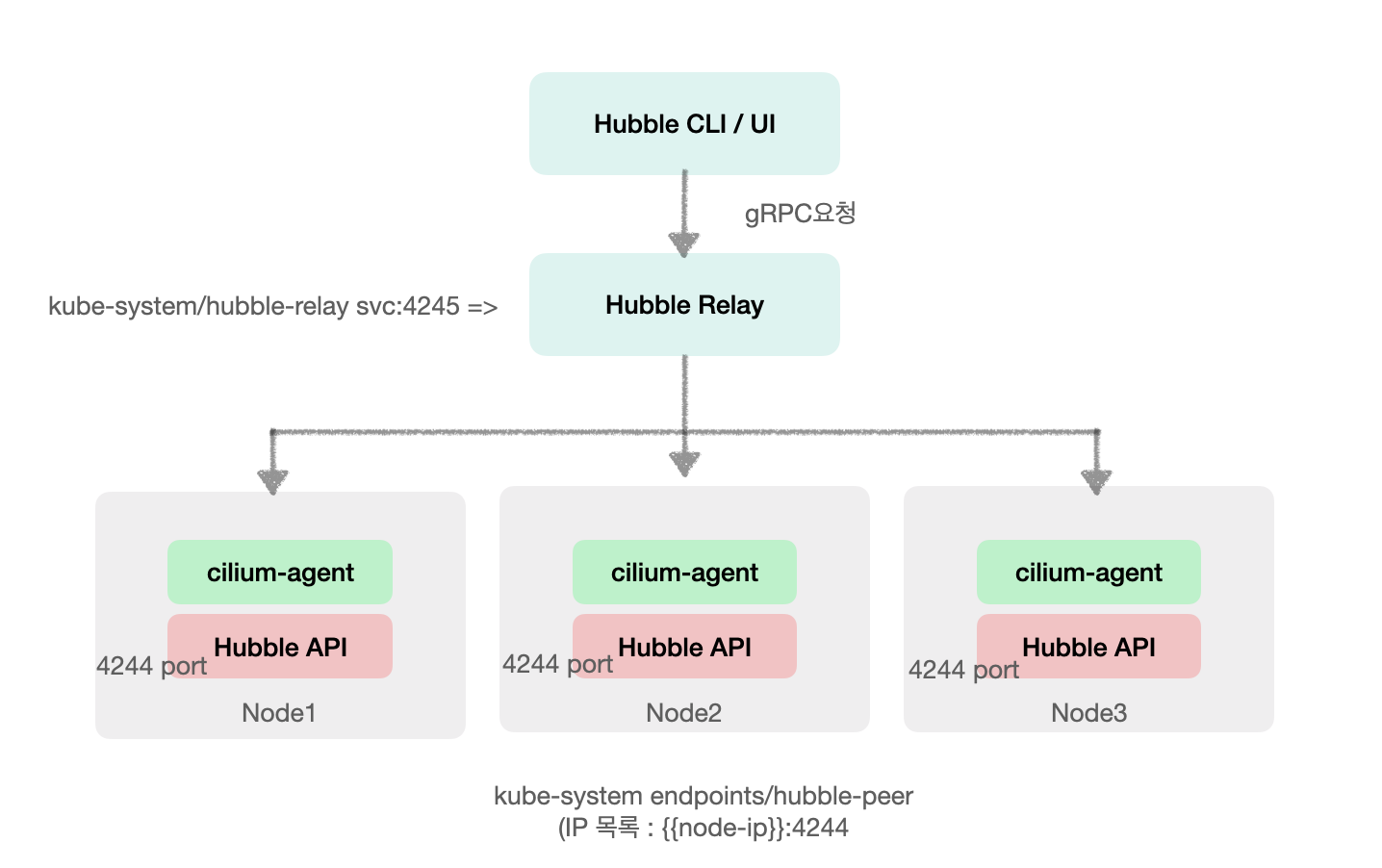

Hubble의 구조는 정리하면 다음과 같다.

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get svc,ep -n kube-system | grep -i hubble-relay

Warning: v1 Endpoints is deprecated in v1.33+; use discovery.k8s.io/v1 EndpointSlice

service/hubble-relay ClusterIP 10.96.173.198 <none> 80/TCP 56s

endpoints/hubble-relay 172.20.1.6:4245 56s

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get svc,ep -n kube-system | grep -i hubble-peer

Warning: v1 Endpoints is deprecated in v1.33+; use discovery.k8s.io/v1 EndpointSlice

service/hubble-peer ClusterIP 10.96.21.177 <none> 443/TCP 60s

endpoints/hubble-peer 192.168.10.100:4244,192.168.10.101:4244,192.168.10.102:4244 60sHubble Relay는 kube-system/hubble-peer Endpoints 리소스를 참조하여, 각 노드의 cilium-agent가 노출하는 Hubble API(포트 4244)에 gRPC로 연결한다. 이를 통해 각 노드의 네트워크 흐름 데이터를 수집해 중앙에서 통합 제공한다.

Hubble이 배포된 전 후를 비교하면 Hubble API인 4244 포트가 신규로 열린 것을 확인할 수 있다.

(⎈|HomeLab:N/A) root@k8s-ctr:~# ss -tnlp | grep 4244

LISTEN 0 4096 *:4244 *:* users:(("cilium-agent",pid=6955,fd=52))

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl describe pod -n kube-system -l k8s-app=hubble-relay

Name: hubble-relay-5dcd46f5c-6pmq9

...

Containers:

hubble-relay:

...

Port: 4245/TCP

Host Port: 0/TCP

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get svc,ep -n kube-system hubble-relay

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/hubble-relay ClusterIP 10.96.180.56 <none> 80/TCP 4d14h

NAME ENDPOINTS AGE

endpoints/hubble-relay 172.20.2.58:4245 4d14hHubble 구조 - Code 확인

Hubble Relay에서 어떻게 Hubble API 즉 peer를 인지하고, GRPC 통신을 하는지 코드를 통해 알아보자.

//cilium/pkg/hubble/relay/pool/manager.go

func (m *PeerManager) Start() {

m.wg.Add(3)

go func() {

defer m.wg.Done()

// Hubble Relay가 Hubble Agent와 gRPC연결을 통해 Peer목록을 실시간으로 반영

m.watchNotifications()

}()

go func() {

defer m.wg.Done()

//Peer와의 gRPC통신 연결 수행

m.manageConnections()

}()

go func() {

defer m.wg.Done()

// 연결 상태 확인

m.reportConnectionStatus()

}()

}1. watchNotifications

Hubble Relay에서 코드에 명시된 Default ServerPort를 기준으로 gRPC연결을 할 Peer를 생성한다.

//cilium/pkg/hubble/relay/pool/manager.go

func (m *PeerManager) watchNotifications() {

ctx, cancel := context.WithCancel(context.Background())

defer cancel()

go func() {

<-m.stop

cancel()

}()

connect:

for {

cl, err := m.opts.peerClientBuilder.Client(m.opts.peerServiceAddress)

...

client, err := cl.Notify(ctx, &peerpb.NotifyRequest{})

...

cn, err := client.Recv()

...

//신규 peer 생성

p := peerTypes.FromChangeNotification(cn)

switch cn.GetType() {

case peerpb.ChangeNotificationType_PEER_ADDED:

m.upsert(p)

case peerpb.ChangeNotificationType_PEER_DELETED:

m.remove(p)

case peerpb.ChangeNotificationType_PEER_UPDATED:

m.upsert(p)

}

}

}

}

//cilium/pkg/hubble/peer/types/peer.go

// FromChangeNotification creates a new Peer from a ChangeNotification.

func FromChangeNotification(cn *peerpb.ChangeNotification) *Peer {

if cn == nil {

return (*Peer)(nil)

}

var err error

var addr net.Addr

switch a := cn.GetAddress(); {

...

default:

var host, port string

if host, port, err = net.SplitHostPort(a); err == nil {

...

//별도로 IP와 Port를 지정한 것이 아니면 Peer의 Port를 default Server Port로 지정한다.

} else if ip := net.ParseIP(a); ip != nil {

err = nil

addr = &net.TCPAddr{

IP: ip,

Port: defaults.ServerPort,

}

}

}

...

return &Peer{

Name: cn.GetName(),

Address: addr,

TLSEnabled: tlsEnabled,

TLSServerName: tlsServerName,

}

}

//cilium/pkg/hubble/defaults

//default Server Port는 4244로 코드에 명시되어 있다.

const (

// ServerPort is the default port for hubble server when a provided

// listen address does not include one.

ServerPort = 4244

...

)2. manageConnections

Peer정보가 업데이트 될 때 및 주기적으로 Peer상태 확인 및 연결을 시도한다.

//cilium/pkg/hubble/relay/pool/manager.go

func (m *PeerManager) manageConnections() {

for {

select {

case <-m.stop:

return

// Peer 정보 업데이트 시 연결 시도

case name := <-m.updated:

m.mu.RLock()

p := m.peers[name]

m.mu.RUnlock()

m.wg.Add(1)

go func(p *peer) {

defer m.wg.Done()

// a connection request has been made, make sure to attempt a connection

m.connect(p, true)

}(p)

// 주기적으로 Peer 연결 시도

case <-time.After(m.opts.connCheckInterval):

m.mu.RLock()

for _, p := range m.peers {

m.wg.Add(1)

go func(p *peer) {

defer m.wg.Done()

m.connect(p, false)

}(p)

}

m.mu.RUnlock()

}

}

}

...

func (m *PeerManager) connect(p *peer, ignoreBackoff bool) {

...

//실제 gRPC 연결을 생성한다.

scopedLog.Info("Connecting")

conn, err := m.opts.clientConnBuilder.ClientConn(p.Address.String(), p.TLSServerName)

if err != nil {

duration := m.opts.backoff.Duration(p.connAttempts)

p.nextConnAttempt = now.Add(duration)

p.connAttempts++

scopedLog.Warn(

"Failed to create gRPC client",

logfields.Error, err,

logfields.NextTryIn, duration,

)

return

}

p.nextConnAttempt = time.Time{}

p.connAttempts = 0

p.conn = conn

scopedLog.Info("Connected")

}Hubble UI 접속

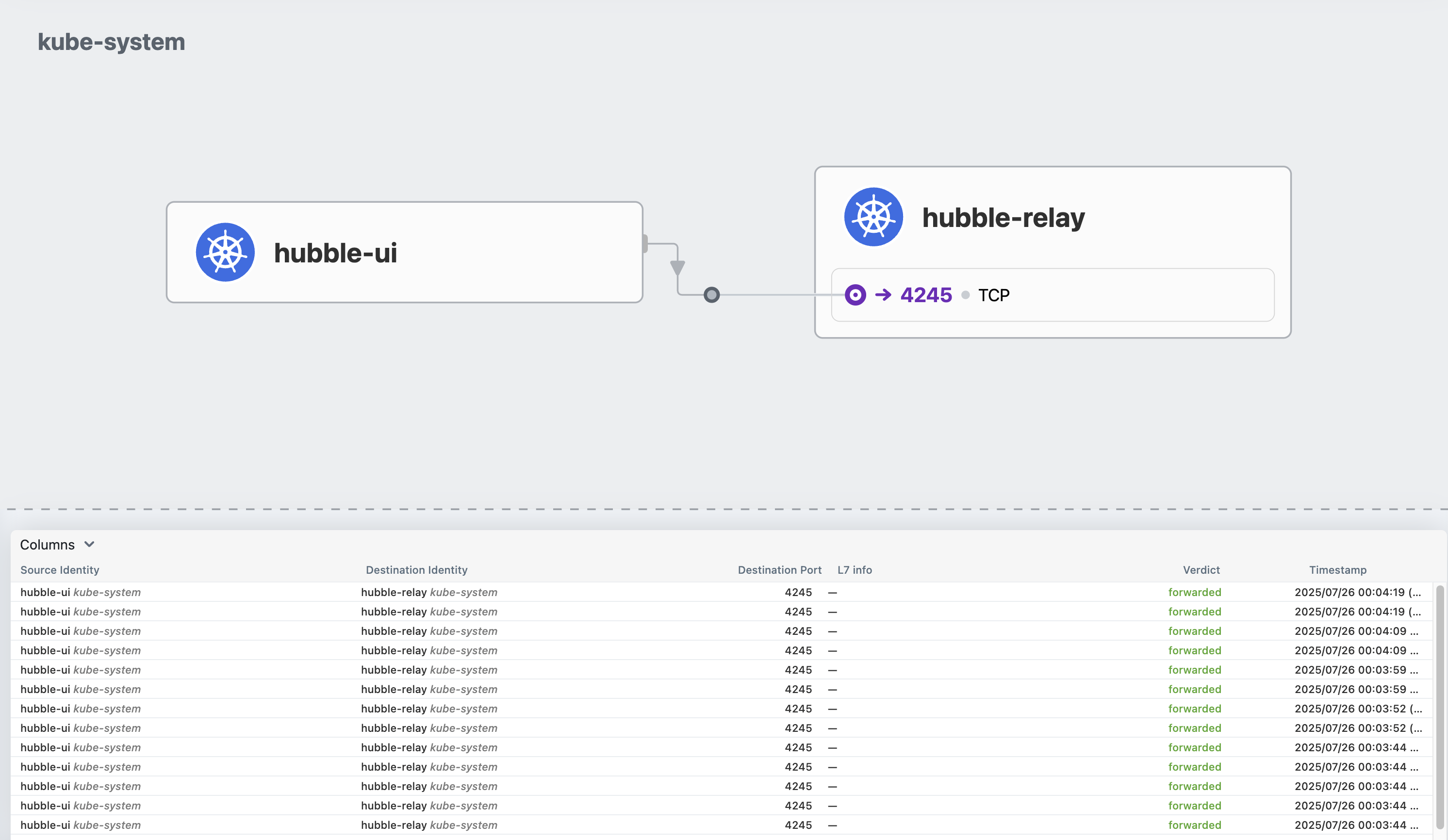

이를 Hubble UI 접속을 통해 알아보자

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get svc,ep -n kube-system hubble-ui

Warning: v1 Endpoints is deprecated in v1.33+; use discovery.k8s.io/v1 EndpointSlice

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/hubble-ui NodePort 10.96.137.247 <none> 80:31234/TCP 3m43s

NAME ENDPOINTS AGE

endpoints/hubble-ui 172.20.2.101:8081 3m43s

Hubble CLI 사용

Hubble CLI를 통해 실시간 통신 모니터링을 확인해본다.

Hubble CLI 설치

HUBBLE_VERSION=$(curl -s https://raw.githubusercontent.com/cilium/hubble/master/stable.txt)

HUBBLE_ARCH=amd64

if [ "$(uname -m)" = "aarch64" ]; then HUBBLE_ARCH=arm64; fi

curl -L --fail --remote-name-all https://github.com/cilium/hubble/releases/download/$HUBBLE_VERSION/hubble-linux-${HUBBLE_ARCH}.tar.gz{,.sha256sum}

sudo tar xzvfC hubble-linux-${HUBBLE_ARCH}.tar.gz /usr/local/bin

which hubble

hubble status모니터링

Local Machine에서 Hubble CLI로 Hubble Relay와 통신을 하기 위해 백그라운드에서 port-forwarding을 수행한 후 모니터링을 확인해본다.

cilium hubble port-forward&

Hubble Relay is available at 127.0.0.1:4245

# Now you can validate that you can access the Hubble API via the installed CLI

hubble status

Healthcheck (via localhost:4245): Ok

Current/Max Flows: 12,285/12,285 (100.00%)

Flows/s: 41.20

# hubble (api) server 기본 접속 주소 확인

hubble config view

...

port-forward-port: "4245"

server: localhost:4245다음은 hubble observe 옵션의 일부이다. 해당 옵션을 사용하여 원하는 설정으로 모니터링이 가능하다.

| 옵션 | 설명 | 예시 |

|---|---|---|

--from-pod | Source Pod 지정 (<namespace>/<pod-name> 형식) | --from-pod kube-system/cilium-abc |

--to-pod | Destination Pod 지정 | --to-pod default/myapp |

--from-ip | Source IP 주소 지정 | --from-ip 10.0.0.12 |

--to-ip | Destination IP 주소 지정 | --to-ip 10.0.1.25 |

--from-fqdn | Source Fully Qualified Domain Name (FQDN) 지정 | --from-fqdn api.example.com |

--to-fqdn | Destination FQDN 지정 | --to-fqdn google.com |

--selector | Label selector (Source/Destination 모두에 적용됨) | --selector k8s:app=frontend |

(⎈|HomeLab:N/A) root@k8s-ctr:~# hubble observe -f

Jul 25 15:13:25.416: 192.168.10.100:49996 (kube-apiserver) <- kube-system/hubble-ui-76d4965bb6-vbjtc:8081 (ID:64472) to-network FORWARDED (TCP Flags: ACK, PSH)

Jul 25 15:13:26.683: 127.0.0.1:32926 (world) <> kube-system/coredns-674b8bbfcf-pdpmn (ID:3810) pre-xlate-rev TRACED (TCP)

Jul 25 15:13:26.683: 127.0.0.1:32926 (world) <> kube-system/coredns-674b8bbfcf-pdpmn (ID:3810) pre-xlate-rev TRACED (TCP)

Jul 25 15:13:27.434: 127.0.0.1:34640 (world) <> 192.168.10.102 (host) pre-xlate-rev TRACED (TCP)

Jul 25 15:13:27.484: 127.0.0.1:55200 (world) <> kube-system/hubble-relay-5dcd46f5c-78fnx (ID:29925) pre-xlate-rev TRACED (TCP)

Jul 25 15:13:27.484: 127.0.0.1:55200 (world) <> kube-system/hubble-relay-5dcd46f5c-78fnx (ID:29925) pre-xlate-rev TRACED (TCP)

(⎈|HomeLab:N/A) root@k8s-ctr:~# hubble observe --from-pod kube-system/coredns-674b8bbfcf-pdpmn -f

Jul 25 15:14:19.621: kube-system/coredns-674b8bbfcf-pdpmn:53128 (ID:3810) -> 192.168.10.100:6443 (host) to-stack FORWARDED (TCP Flags: ACK)

Jul 25 15:14:23.375: 10.0.2.15:49350 (host) <- kube-system/coredns-674b8bbfcf-pdpmn:8080 (ID:3810) to-stack FORWARDED (TCP Flags: SYN, ACK)

Jul 25 15:14:23.375: 10.0.2.15:49350 (host) <- kube-system/coredns-674b8bbfcf-pdpmn:8080 (ID:3810) to-stack FORWARDED (TCP Flags: ACK, PSH)

Jul 25 15:14:23.375: 10.0.2.15:49350 (host) <- kube-system/coredns-674b8bbfcf-pdpmn:8080 (ID:3810) to-stack FORWARDED (TCP Flags: ACK, FIN)

(⎈|HomeLab:N/A) root@k8s-ctr:~# hubble observe --to-ip 192.168.10.100 -f

Jul 25 15:15:11.670: 192.168.10.102:37974 (host) -> 192.168.10.100:6443 (kube-apiserver) to-network FORWARDED (TCP Flags: ACK)

Jul 25 15:15:13.414: 192.168.10.100:49953 (kube-apiserver) <- kube-system/hubble-ui-76d4965bb6-vbjtc:8081 (ID:64472) to-network FORWARDED (TCP Flags: ACK, PSH)

Jul 25 15:15:13.944: kube-system/hubble-ui-76d4965bb6-vbjtc:47176 (ID:64472) -> 192.168.10.100:6443 (kube-apiserver) to-network FORWARDED (TCP Flags: ACK, PSH)

Jul 25 15:15:14.882: 192.168.10.102:56384 (host) -> 192.168.10.100:6443 (kube-apiserver) to-network FORWARDED (TCP Flags: ACK)

Jul 25 15:15:18.256: 192.168.10.101:49758 (host) -> 192.168.10.100:6443 (kube-apiserver) to-network FORWARDED (TCP Flags: ACK, PSH)

Jul 25 15:15:19.404: 192.168.10.100:49953 (kube-apiserver) <- kube-system/hubble-ui-76d4965bb6-vbjtc:8081 (ID:64472) to-network FORWARDED (TCP Flags: ACK, PSH)

Jul 25 15:15:19.405: kube-system/hubble-relay-5dcd46f5c-78fnx:51508 (ID:29925) -> 192.168.10.100:4244 (kube-apiserver) to-network FORWARDED (TCP Flags: ACK, PSH)Starwars Demo

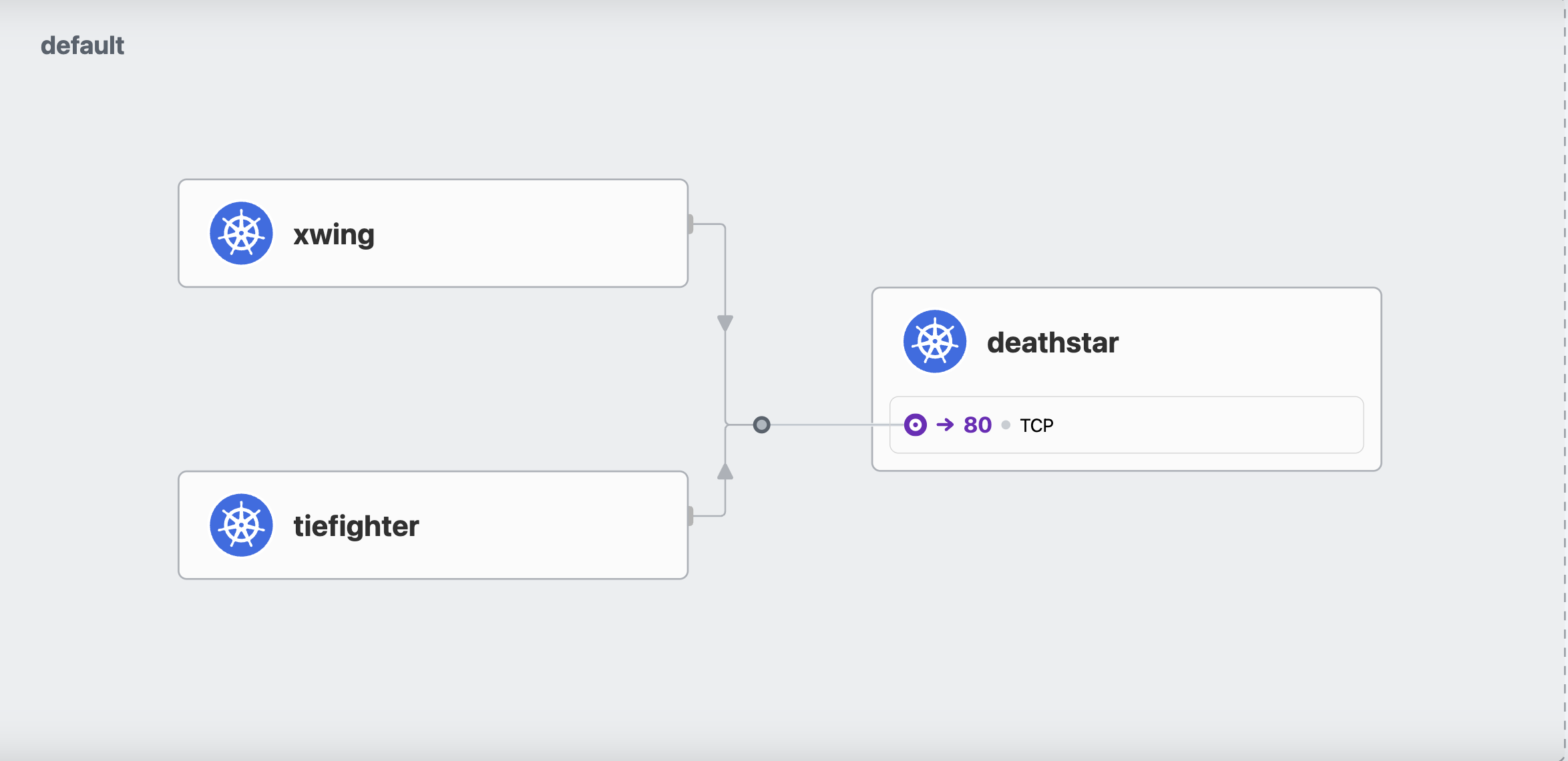

Cilium에서 제공하는 Demo를 통해 접근 제어를 위한 다양한 보안 정책을 테스트 해본다.

Demo 구성

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get pod --show-labels

NAME READY STATUS RESTARTS AGE LABELS

deathstar-8c4c77fb7-7rqzr 1/1 Running 0 22m app.kubernetes.io/name=deathstar,class=deathstar,org=empire,pod-template-hash=8c4c77fb7

deathstar-8c4c77fb7-8wdts 1/1 Running 0 22m app.kubernetes.io/name=deathstar,class=deathstar,org=empire,pod-template-hash=8c4c77fb7

tiefighter 1/1 Running 0 22m app.kubernetes.io/name=tiefighter,class=tiefighter,org=empire

xwing 1/1 Running 0 22m app.kubernetes.io/name=xwing,class=xwing,org=alliance

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get deploy,svc,ep deathstar

Warning: v1 Endpoints is deprecated in v1.33+; use discovery.k8s.io/v1 EndpointSlice

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/deathstar 2/2 2 2 22m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/deathstar ClusterIP 10.96.60.53 <none> 80/TCP 22m

NAME ENDPOINTS AGE

endpoints/deathstar 172.20.1.85:80,172.20.2.33:80 22m시나리오 1) 조건 X

1-1) tiefighter -> deathstar : request-landing

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec tiefighter -- curl -s -XPOST deathstar.default.svc.cluster.local/v1/request-landing

Ship landed

(⎈|HomeLab:N/A) root@k8s-ctr:~# hubble observe -f --protocol tcp --from-identity $TIEFIGHTERID

Jul 25 15:56:06.052: default/tiefighter:51000 (ID:62396) -> default/deathstar-8c4c77fb7-8wdts:80 (ID:50122) to-endpoint FORWARDED (TCP Flags: SYN)

Jul 25 15:56:06.052: default/tiefighter:51000 (ID:62396) -> default/deathstar-8c4c77fb7-8wdts:80 (ID:50122) to-endpoint FORWARDED (TCP Flags: ACK)

Jul 25 15:56:06.053: default/tiefighter:51000 (ID:62396) <> default/deathstar-8c4c77fb7-8wdts (ID:50122) pre-xlate-rev TRACED (TCP)

Jul 25 15:56:06.053: default/tiefighter:51000 (ID:62396) <> default/deathstar-8c4c77fb7-8wdts (ID:50122) pre-xlate-rev TRACED (TCP)

Jul 25 15:56:06.053: default/tiefighter:51000 (ID:62396) <> default/deathstar-8c4c77fb7-8wdts (ID:50122) pre-xlate-rev TRACED (TCP)

Jul 25 15:56:06.053: default/tiefighter:51000 (ID:62396) -> default/deathstar-8c4c77fb7-8wdts:80 (ID:50122) to-endpoint FORWARDED (TCP Flags: ACK, PSH)

Jul 25 15:56:06.053: default/tiefighter:51000 (ID:62396) <> default/deathstar-8c4c77fb7-8wdts (ID:50122) pre-xlate-rev TRACED (TCP)

Jul 25 15:56:06.053: default/tiefighter:51000 (ID:62396) <> default/deathstar-8c4c77fb7-8wdts (ID:50122) pre-xlate-rev TRACED (TCP)

Jul 25 15:56:06.054: default/tiefighter:51000 (ID:62396) -> default/deathstar-8c4c77fb7-8wdts:80 (ID:50122) to-endpoint FORWARDED (TCP Flags: ACK, FIN)

Jul 25 15:56:06.098: default/tiefighter (ID:62396) <> 10.96.60.53:80 (world) pre-xlate-fwd TRACED (TCP)

Jul 25 15:56:06.098: default/tiefighter (ID:62396) <> default/deathstar-8c4c77fb7-8wdts:80 (ID:50122) post-xlate-fwd TRANSLATED (TCP)

Jul 25 15:56:06.098: default/tiefighter:51000 (ID:62396) -> default/deathstar-8c4c77fb7-8wdts:80 (ID:50122) to-network FORWARDED (TCP Flags: SYN)

Jul 25 15:56:06.099: default/tiefighter:51000 (ID:62396) -> default/deathstar-8c4c77fb7-8wdts:80 (ID:50122) to-network FORWARDED (TCP Flags: ACK)

Jul 25 15:56:06.099: default/tiefighter:51000 (ID:62396) -> default/deathstar-8c4c77fb7-8wdts:80 (ID:50122) to-network FORWARDED (TCP Flags: ACK, PSH)

Jul 25 15:56:06.100: default/tiefighter:51000 (ID:62396) -> default/deathstar-8c4c77fb7-8wdts:80 (ID:50122) to-network FORWARDED (TCP Flags: ACK, FIN)1-2) xwing -> deathstar : request-landing

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec xwing -- curl -s -XPOST deathstar.default.svc.cluster.local/v1/request-landing

Ship landed

(⎈|HomeLab:N/A) root@k8s-ctr:~# hubble observe -f --protocol tcp --from-identity $XWINGID

Jul 25 15:53:42.883: default/xwing (ID:6305) <> 10.96.60.53:80 (world) pre-xlate-fwd TRACED (TCP)

Jul 25 15:53:42.883: default/xwing (ID:6305) <> default/deathstar-8c4c77fb7-8wdts:80 (ID:50122) post-xlate-fwd TRANSLATED (TCP)

Jul 25 15:53:42.883: default/xwing:37840 (ID:6305) -> default/deathstar-8c4c77fb7-8wdts:80 (ID:50122) to-endpoint FORWARDED (TCP Flags: SYN)

Jul 25 15:53:42.883: default/xwing:37840 (ID:6305) -> default/deathstar-8c4c77fb7-8wdts:80 (ID:50122) to-endpoint FORWARDED (TCP Flags: ACK)

Jul 25 15:53:42.883: default/xwing:37840 (ID:6305) <> default/deathstar-8c4c77fb7-8wdts (ID:50122) pre-xlate-rev TRACED (TCP)

Jul 25 15:53:42.883: default/xwing:37840 (ID:6305) <> default/deathstar-8c4c77fb7-8wdts (ID:50122) pre-xlate-rev TRACED (TCP)

Jul 25 15:53:42.883: default/xwing:37840 (ID:6305) -> default/deathstar-8c4c77fb7-8wdts:80 (ID:50122) to-endpoint FORWARDED (TCP Flags: ACK, PSH)

Jul 25 15:53:42.883: default/xwing:37840 (ID:6305) <> default/deathstar-8c4c77fb7-8wdts (ID:50122) pre-xlate-rev TRACED (TCP)

Jul 25 15:53:42.883: default/xwing:37840 (ID:6305) <> default/deathstar-8c4c77fb7-8wdts (ID:50122) pre-xlate-rev TRACED (TCP)

Jul 25 15:53:42.883: default/xwing:37840 (ID:6305) <> default/deathstar-8c4c77fb7-8wdts (ID:50122) pre-xlate-rev TRACED (TCP)

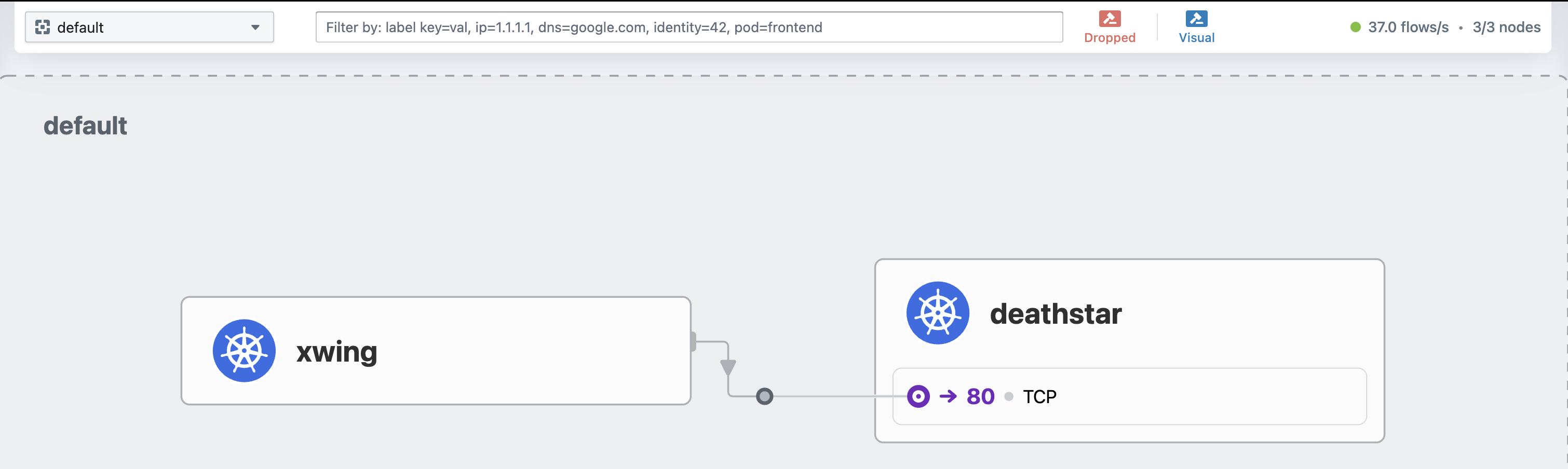

Jul 25 15:53:42.884: default/xwing:37840 (ID:6305) -> default/deathstar-8c4c77fb7-8wdts:80 (ID:50122) to-endpoint FORWARDED (TCP Flags: ACK, FIN)시나리오 2) empire에 속한 그룹에 한해 deathstar 호출 가능

L3/L4 정책 적용

Cilium은 IP 주소가 아닌 포드의 레이블로 보안 정책을 정의한다.

예를 들어, org=empire 레이블이 있는 그룹만 deathstar 서비스에 접근할 수 있도록 접근 제한 정책을 생성 할 수 있다.

이 정책은 L3/L4 수준의 네트워크 보안 정책으로, IP와 TCP 수준에서 동작한다.

Cilium은 요청 트래픽만 명시적으로 허용하더라도, 그에 대한 응답 트래픽은 자동으로 허용된다.

이는 Cilium이 Linux 커널의 conntrack(connection tracking) 기능을 기반으로 하여 TCP/UDP 연결 상태를 추적하고, eBPF 프로그램 내에서 해당 상태를 검사하여 연결이 이미 허용된 것인지 확인하기 때문이다.

즉, 클라이언트가 서버에 요청을 보내는 방향의 트래픽만 정책으로 허용하면, 그 요청에 대한 응답은 conntrack에 의해 자동으로 허용된다.

cilium 코드를 통해 전반적인 과정을 이해해보자.

conntrack 기반 연결 상태 추적 Code

1) CiliumNetworkPolicy기반 L4Policy 구조체 생성

// cilium/pkg/policy/repository.go

func (p *Repository) resolvePolicyLocked(securityIdentity *identity.Identity) (*selectorPolicy, error) {

...

// Policy 적용 여부 및 정책 목록을 반환한다.

matchingRules := p.computePolicyEnforcementAndRules(securityIdentity)

...

// ingerss 및 egress 항목을 분석하여 사용자가 정의한 정책을 L4Policy로 기록한다.(L4Policy 구조체 생성)

if ingressEnabled {

newL4IngressPolicy, err := matchingRules.resolveL4IngressPolicy(&policyCtx)

if err != nil {

return nil, err

}

calculatedPolicy.L4Policy.Ingress.PortRules = newL4IngressPolicy

}

if egressEnabled {

newL4EgressPolicy, err := matchingRules.resolveL4EgressPolicy(&policyCtx)

if err != nil {

return nil, err

}

calculatedPolicy.L4Policy.Egress.PortRules = newL4EgressPolicy

}2) 생성된 정책을 실제 PolicyMap에 적용

// cilium/pkg/endpoint/bpf.go

func (e *Endpoint) runPreCompilationSteps(regenContext *regenerationContext) (preCompilationError error) {

...

// 저장된 policy 정책을 평가하여 endpointPolicy를 생성한다.

err := e.regeneratePolicy(stats, datapathRegenCtxt)

...

// endpointPolicy를 PolicyMap(eBPF map)에 적용

err = e.applyPolicyMapChangesLocked(regenContext, e.desiredPolicy != e.realizedPolicy)

...

}

// cilium/pkg/endpoint/policy.go

// 저장된 policy 정책을 평가하여 endpointPolicy를 생성한다.

func (e *Endpoint) regeneratePolicy(stats *regenerationStatistics, datapathRegenCtxt *datapathRegenerationContext) error {

...

//1. selectorPolicy = 정책 리포지토리에서 추출된 정책

selectorPolicy, result.policyRevision, err = e.policyRepo.GetSelectorPolicy(securityIdentity, skipPolicyRevision, stats, e.GetID())

...

//2. selectorPolicy를 endpointPolicy로 변환하여 BPF로 전달 가능한 형태로 정제

result.endpointPolicy = selectorPolicy.DistillPolicy(e.getLogger(), e, desiredRedirects)3) eBPF 프로그램에서 패킷 수신 시 PolicyMap기반 conntrack 조회 및 정책 검사

//cilium/bpf/bpf_lxc.c

static __always_inline int handle_ipv4_from_lxc(struct __ctx_buff *ctx, __u32 *dst_sec_identity,

__s8 *ext_err)

...

switch (ct_status) {

case CT_NEW:

case CT_ESTABLISHED:

//PolicyMap(cilium_policy_v2)를 기반으로 connection 상태를 확인한다.

verdict = policy_can_egress4(ctx, &cilium_policy_v2, tuple, l4_off, SECLABEL_IPV4,

*dst_sec_identity, &policy_match_type, &audited,

ext_err, &proxy_port);

switch (ct_status) {

//새로운 connection인 경우 conntrack 엔트리를 새롭게 생성한다.

case CT_NEW:

ct_recreate4:

...

break;

//기존에 conntrack엔트리가 존재할 경우 통신을 허용한다. (필요 시 재 생성)

case CT_ESTABLISHED:

...

break;

CiliumNetworkPolicy 생성

# sw_l3_l4_policy.yaml

apiVersion: "cilium.io/v2"

kind: CiliumNetworkPolicy

metadata:

name: "rule1"

spec:

description: "L3-L4 policy to restrict deathstar access to empire ships only"

endpointSelector:

matchLabels:

org: empire

class: deathstar

ingress:

- fromEndpoints:

- matchLabels:

org: empire

toPorts:

- ports:

- port: "80"

protocol: TCP

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl apply -f https://raw.githubusercontent.com/cilium/cilium/1.17.6/examples/minikube/sw_l3_l4_policy.yaml

ciliumnetworkpolicy.cilium.io/rule1 created

# ingress에 policy 적용 확인

(⎈|HomeLab:N/A) root@k8s-ctr:~# c1 endpoint list

ENDPOINT POLICY (ingress) POLICY (egress) IDENTITY LABELS (source:key[=value]) IPv6 IPv4 STATUS

ENFORCEMENT ENFORCEMENT

... ready

1224 Enabled Disabled 50122 k8s:app.kubernetes.io/name=deathstar 172.20.1.85 ready

k8s:class=deathstar

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=default

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=default

k8s:org=empire

2-1) tiefighter -> deathstar : request-landing

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec tiefighter -- curl -s -XPOST deathstar.default.svc.cluster.local/v1/request-landing

Ship landed

(⎈|HomeLab:N/A) root@k8s-ctr:~hubble observe -f --protocol tcp --from-identity $DEATHSTARIDID

Jul 25 16:07:58.104: default/tiefighter:50142 (ID:62396) <- default/deathstar-8c4c77fb7-8wdts:80 (ID:50122) to-network FORWARDED (TCP Flags: SYN, ACK)

Jul 25 16:07:58.106: default/tiefighter:50142 (ID:62396) <- default/deathstar-8c4c77fb7-8wdts:80 (ID:50122) to-network FORWARDED (TCP Flags: ACK, PSH)

Jul 25 16:07:58.107: default/tiefighter:50142 (ID:62396) <- default/deathstar-8c4c77fb7-8wdts:80 (ID:50122) to-network FORWARDED (TCP Flags: ACK, FIN)

Jul 25 16:07:58.151: default/tiefighter:50142 (ID:62396) <- default/deathstar-8c4c77fb7-8wdts:80 (ID:50122) to-endpoint FORWARDED (TCP Flags: SYN, ACK)

Jul 25 16:07:58.151: default/deathstar-8c4c77fb7-8wdts:80 (ID:50122) <> default/tiefighter (ID:62396) pre-xlate-rev TRACED (TCP)

Jul 25 16:07:58.151: default/deathstar-8c4c77fb7-8wdts:80 (ID:50122) <> default/tiefighter (ID:62396) pre-xlate-rev TRACED (TCP)

Jul 25 16:07:58.153: default/tiefighter:50142 (ID:62396) <- default/deathstar-8c4c77fb7-8wdts:80 (ID:50122) to-endpoint FORWARDED (TCP Flags: ACK, PSH)

Jul 25 16:07:58.154: default/tiefighter:50142 (ID:62396) <- default/deathstar-8c4c77fb7-8wdts:80 (ID:50122) to-endpoint FORWARDED (TCP Flags: ACK, FIN)2-2) xwing -> deathstar : request-landing

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec xwing -- curl -s -XPOST deathstar.default.svc.cluster.local/v1/request-landing

(⎈|HomeLab:N/A) root@k8s-ctr:~# hubble observe -f --type drop

Jul 25 16:05:58.942: default/xwing:38870 (ID:6305) <> default/deathstar-8c4c77fb7-8wdts:80 (ID:50122) Policy denied DROPPED (TCP Flags: SYN)

Jul 25 16:05:59.962: default/xwing:38870 (ID:6305) <> default/deathstar-8c4c77fb7-8wdts:80 (ID:50122) Policy denied DROPPED (TCP Flags: SYN)

Jul 25 16:06:00.987: default/xwing:38870 (ID:6305) <> default/deathstar-8c4c77fb7-8wdts:80 (ID:50122) Policy denied DROPPED (TCP Flags: SYN)

Jul 25 16:06:02.011: default/xwing:38870 (ID:6305) <> default/deathstar-8c4c77fb7-8wdts:80 (ID:50122) Policy denied DROPPED (TCP Flags: SYN)

Jul 25 16:06:03.034: default/xwing:38870 (ID:6305) <> default/deathstar-8c4c77fb7-8wdts:80 (ID:50122) Policy denied DROPPED (TCP Flags: SYN)

Jul 25 16:06:04.059: default/xwing:38870 (ID:6305) <> default/deathstar-8c4c77fb7-8wdts:80 (ID:50122) Policy denied DROPPED (TCP Flags: SYN)시나리오 3) empire에 속한 그룹에 한해 명확한 요청으로(request-landing) deathstar 호출 가능

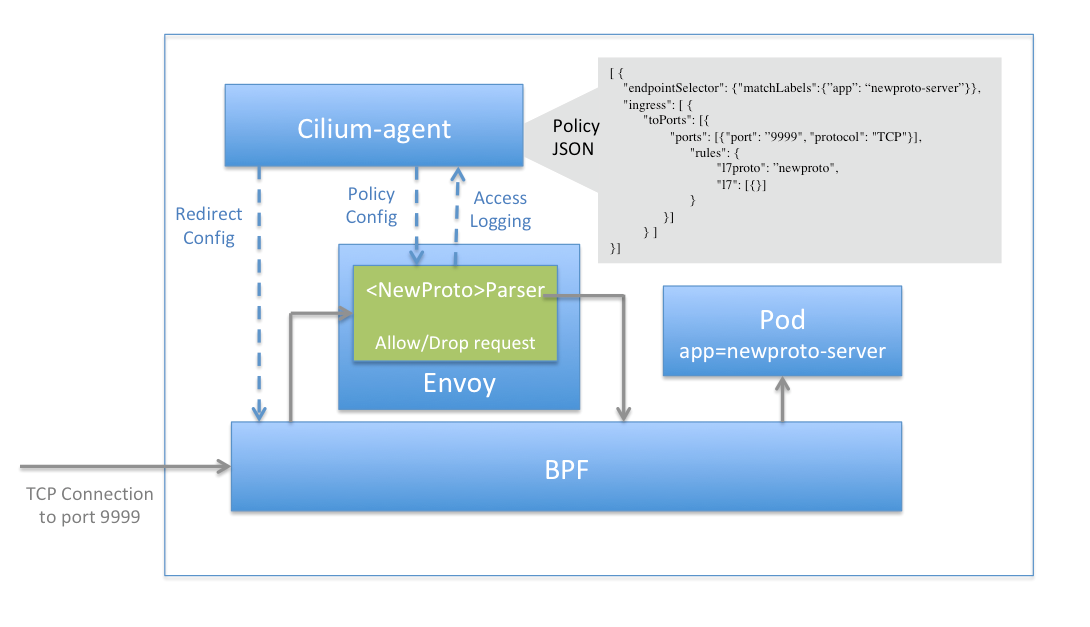

L7 정책 적용

L7 동작 처리는 cilium-envoy 데몬셋이 담당한다.

공식 문서 참조1

공식 문서 참조2

L7 정책은 L3/L4와는 다르게 단순한 eBPF map 기반 정책으로는 처리할 수 없기 때문에, Cilium에서는 Envoy Proxy를 연동하여 L7 처리를 수행하도록 설계되어있다.

사용자가 CiliumNetworkPolicy에 HTTP method나 path 등 L7 룰을 정의하면, Cilium은 해당 정책을 분석해 해당 트래픽을 Envoy Proxy로 보낸다.

proxy_port는 eBPF 코드에서 트래픽을 리디렉션할 포트를 의미하며, L7 정책이 있을 때에만 할당되는 port이다.

proxy_port가 0보다 크면 아래 코드와 같이 해당 트래픽을 proxy redirection 체크를 한 후, envoy proxy로 redirect시킨다.

//cilium/bpf/bpf_lxc.c

ct_state_new.proxy_redirect = *proxy_port > 0;

/* ext_err may contain a value from __policy_can_access, and

* ct_create6 overwrites it only if it returns an error itself.

* As the error from __policy_can_access is dropped in that

* case, it's OK to return ext_err from ct_create6 along with

* its error code.

*/

ret = ct_create6(get_ct_map6(tuple), &cilium_ct_any6_global, tuple, ctx, CT_INGRESS,

&ct_state_new, ext_err);

if (IS_ERR(ret))

return ret;

}

if (*proxy_port > 0)

goto redirect_to_proxy;

...

redirect_to_proxy:

send_trace_notify4(ctx, TRACE_TO_PROXY, src_label, SECLABEL_IPV4, orig_sip,

bpf_ntohs(*proxy_port), ifindex, trace.reason,

trace.monitor);

if (tuple_out)

*tuple_out = *tuple;

return POLICY_ACT_PROXY_REDIRECT;

}이후 Envoy는 전달받은 트래픽을 정책에 따라 검사하고, 허용되면 다시 eBPF를 통해 원래 목적지(Pod)로 전달한다.

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get ds -n kube-system cilium-envoy

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

cilium-envoy 3 3 3 3 3 kubernetes.io/os=linux 23h

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl describe ds -n kube-system cilium-envoy | grep -i mount -A4

Mounts:

/sys/fs/bpf from bpf-maps (rw)

/var/run/cilium/envoy/ from envoy-config (ro)

/var/run/cilium/envoy/artifacts from envoy-artifacts (ro)

/var/run/cilium/envoy/sockets from envoy-sockets (rw)

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -it -n kube-system ds/cilium -c cilium-agent -- ss -xnp | grep -i -envoy

u_str ESTAB 0 0 /var/run/cilium/envoy/sockets/admin.sock 28795 * 29729

u_str ESTAB 0 0 /var/run/cilium/envoy/sockets/admin.sock 28789 * 29726

u_str ESTAB 0 0 /var/run/cilium/envoy/sockets/xds.sock 35039 * 35038 users:(("cilium-agent",pid=1,fd=72))

CiliumNetworkPolicy 업데이트

# sw_l3_l4_l7_policy.yaml

apiVersion: "cilium.io/v2"

kind: CiliumNetworkPolicy

metadata:

name: "rule1"

spec:

description: "L7 policy to restrict access to specific HTTP call"

endpointSelector:

matchLabels:

org: empire

class: deathstar

ingress:

- fromEndpoints:

- matchLabels:

org: empire

toPorts:

- ports:

- port: "80"

protocol: TCP

rules:

http:

- method: "POST"

path: "/v1/request-landing"

kubectl apply -f https://raw.githubusercontent.com/cilium/cilium/1.17.6/examples/minikube/sw_l3_l4_l7_policy.yaml

(⎈|HomeLab:N/A) root@k8s-ctr:~# c0 policy get

[

{

"endpointSelector": {

"matchLabels": {

"any:class": "deathstar",

"any:org": "empire",

"k8s:io.kubernetes.pod.namespace": "default"

}

},

"ingress": [

{

"fromEndpoints": [

{

"matchLabels": {

"any:org": "empire",

"k8s:io.kubernetes.pod.namespace": "default"

}

}

],

"toPorts": [

{

"ports": [

{

"port": "80",

"protocol": "TCP"

}

],

"rules": {

"http": [

{

"path": "/v1/request-landing",

"method": "POST"

}

]

}

}

]

}

],

"labels": [

{

"key": "io.cilium.k8s.policy.derived-from",

"value": "CiliumNetworkPolicy",

"source": "k8s"

},

{

"key": "io.cilium.k8s.policy.name",

"value": "rule1",

"source": "k8s"

},

{

"key": "io.cilium.k8s.policy.namespace",

"value": "default",

"source": "k8s"

},

{

"key": "io.cilium.k8s.policy.uid",

"value": "c07db93d-ea58-448b-aee1-3a4701800f13",

"source": "k8s"

}

],

"enableDefaultDeny": {

"ingress": true,

"egress": false

},

"description": "L7 policy to restrict access to specific HTTP call"

}

]

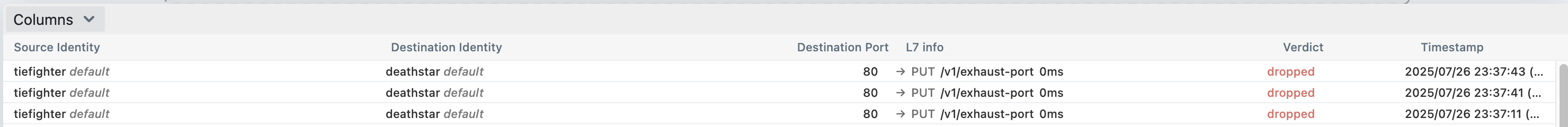

Revision: 33-1) tiefighter -> deathstar : exhaust-port

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec tiefighter -- curl -s -XPUT deathstar.default.svc.cluster.local/v1/exhaust-port

Access denied

(⎈|HomeLab:N/A) root@k8s-ctr:~# hubble observe -f --pod deathstar --verdict DROPPED

Jul 26 14:37:11.689: default/tiefighter:49534 (ID:62396) -> default/deathstar-8c4c77fb7-8wdts:80 (ID:50122) http-request DROPPED (HTTP/1.1 PUT http://deathstar.default.svc.cluster.local/v1/exhaust-port)

(⎈|HomeLab:N/A) root@k8s-ctr:~# c1 monitor -v --type l7

CPU 01: [pre-xlate-rev] cgroup_id: 7275 sock_cookie: 9612, dst [172.20.1.39]:56024 tcp

<- Request http from 1899 ([k8s:app.kubernetes.io/name=tiefighter k8s:class=tiefighter k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=default k8s:io.cilium.k8s.policy.cluster=default k8s:io.cilium.k8s.policy.serviceaccount=default k8s:io.kubernetes.pod.namespace=default k8s:org=empire]) to 1224 ([k8s:app.kubernetes.io/name=deathstar k8s:class=deathstar k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=default k8s:io.cilium.k8s.policy.cluster=default k8s:io.cilium.k8s.policy.serviceaccount=default k8s:io.kubernetes.pod.namespace=default k8s:org=empire]), identity 62396->50122, verdict Denied PUT http://deathstar.default.svc.cluster.local/v1/exhaust-port => 0

<- Response http to 1899 ([k8s:app.kubernetes.io/name=tiefighter k8s:class=tiefighter k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=default k8s:io.cilium.k8s.policy.cluster=default k8s:io.cilium.k8s.policy.serviceaccount=default k8s:io.kubernetes.pod.namespace=default k8s:org=empire]) from 1224 ([k8s:app.kubernetes.io/name=deathstar k8s:class=deathstar k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=default k8s:io.cilium.k8s.policy.cluster=default k8s:io.cilium.k8s.policy.serviceaccount=default k8s:io.kubernetes.pod.namespace=default k8s:org=empire]), identity 50122->62396, verdict Forwarded PUT http://deathstar.default.svc.cluster.local/v1/exhaust-port => 403